Researchers at the University of California have created a robotic perception framework that allows the devices to recognize objects by touching them. That’s what Venturebeat reports.

The researchers built on work published by researchers from Carnegie Mellon University. By doing so, they tried to design an artificial intelligence (AI) system that could recognise whether a set of physical observations corresponded to specific objects.

People naturally associate the appearance and material properties of objects across multiple modalities. For example, if someone sees a soft toy, they imagine how their fingers would feel if they touched the soft surface. If anyone touches the edge of a pair of scissors, they can imagine the scissors. Not only the identity of the scissors, but also the shape, size and proportions.

Research

The scientists also wanted to teach these kinds of associations to a robot manipulator. However, this did not prove to be easy. Tactile sensors lack the “global view” that image sensors do have. The tactile sensors work with respect to local surface properties. Also, the measurements of these sensors are more difficult to interpret.

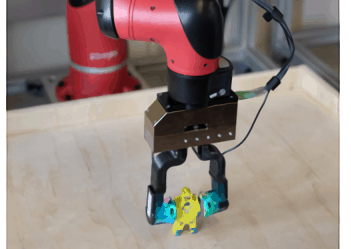

The team solved this by combining a high-resolution GelSight touch sensor – which generates measurements with a camera that detects gel distortions created by contact with an object – with a convolutionary neural network.

Two GelSight sensors were placed on the fingers of a parallel jaw grab, which were used to compile a dataset of the camera’s observations and the measurements of the tactile sensors when the grab got its fingers successfully around the objects.

outcomes

In total, the researchers collected samples for 98 different objects. Eighty of them were used to train the neural network. The other 18 were kept as a test set. In the tests, the system was able to accurately determine the identity of objects in 64.3 percent of the cases. These were also objects that had not been encountered during training.

There is still room for improvement, according to the researchers. All the images came from the same environment. They also say that the research was based only on individual grips, instead of multiple tactile interactions. Nevertheless, it is a promising first step towards perception systems that, like humans, can identify objects by touching them alone, according to the researchers.

This news article was automatically translated from Dutch to give Techzine.eu a head start. All news articles after September 1, 2019 are written in native English and NOT translated. All our background stories are written in native English as well. For more information read our launch article.