During Advancing AI 2025 in San Jose, AMD was adamant: Nvidia can be beaten and has no moat. Faster Instinct GPUs, scalable networking, a fully-fledged AI rack solution, and a streamlined roadmap inspire confidence.

AMD’s upward trajectory since 2016 is now well known. After teetering on the brink of financial ruin, it managed to beat Intel on price, performance, and execution in the CPU arena. Ryzen chips are now commonly found in desktops and laptops, and Epyc processors have taken a huge share in professional IT environments. On the GPU side, the challenge is far steeper. Nvidia, the “world champion of AI,” to put it somewhat bluntly and purely looking at stock prices, is not nearly as vulnerable as Intel was and continues to be.

In this article, we’ll discuss both AMD’s AI-focused hardware and software. Thanks to a particularly comprehensive explanation, we already know a great deal about what will be on offer in 2026-27 from AMD, with little need for creative imagination to figure out what comes after that. If all goes well, AMD will be as predictable in its execution as the metronomic TSMC where its chips are manufactured.

Instinct MI350 now, MI400 later

We already knew in October last year that Instinct MI350 would become the main AMD GPU for enterprise AI in 2024. Production actually started at the beginning of June, with the first shipments expected in Q3. The biggest buyers are hyperscalers, AI specialists, and “neo clouds,” i.e., GPU-focused cloud players such as Crusoe. Andrew Dieckmann, CVP and GM Data Center GPU Business, confirms that adoption is going well. Seven out of ten of the largest AI companies now use AMD Instinct “at scale.”

The current go-to market AMD Instinct strategy rests on four pillars. First and foremost, performance must be equivalent to or exceed that of Nvidia. The TCO must also be lower, which is essential for attracting customers when they have a free choice between Nvidia and AMD. They need to feel the financial gain to go for the challenger in the market. Migration must also be easy, run on an open (source) basis as much as possible, and be customer-centric.

The latter sounds like typical marketing talk, but recent wishes are definitely being fulfilled. During her presentation, CEO Lisa Su showed that sovereign AI initiatives based on AMD are being developed from Austria to Singapore, numbering in the dozens.

In the near future, MI350 will be central as an alternative to Nvidia’s Blackwell offering, competing with it through lower costs and a focus on easy implementation. This includes options for both air cooling and liquid cooling, even though Dieckmann says that the move to liquid has reached an inflection point this year. AMD will continue to offer air-cooled options, but in the future, customers will likely opt to revamp their data centers or build new locations with a liquid-first philosophy.

MI400 will follow in 2026, which, bizarrely, has already been ‘benchmarked’ against Nvidia’s fictional Vera Rubin GPU. AMD promises performance somewhere between “1x and 1.3x.” We’ll see. Both chips are merely figments of each company’s imagination right now. MI500 is set for 2027. We’ll let you fill in what 2028, 2029 and beyond have in store.

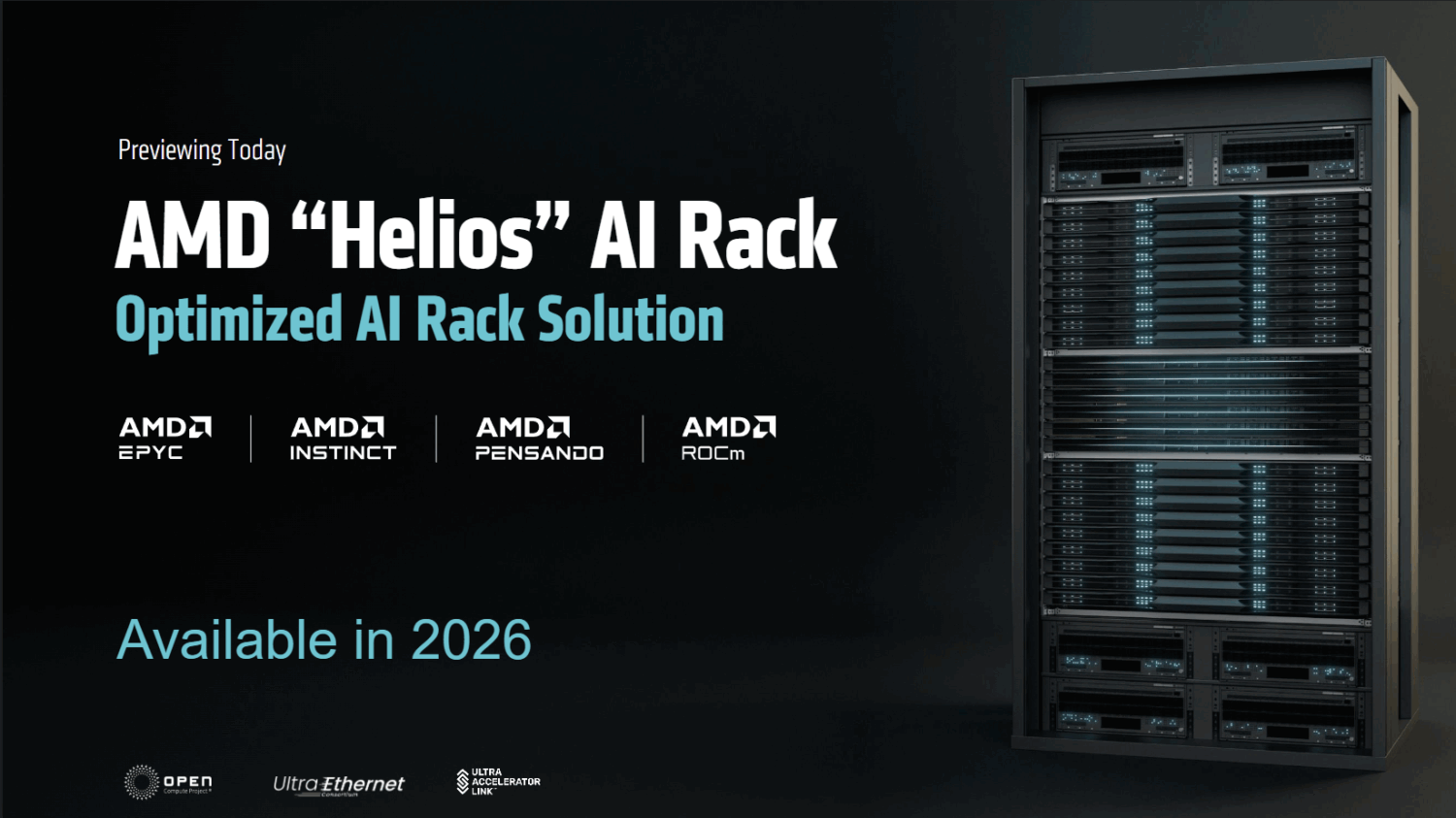

Truly Nvidia-esque: the Helios AI Rack

Last year, it became clear that AMD had its sights set on the data center network. For enterprise AI workloads, the network is crucial for providing each GPU with the data it needs, something that can cause delays of up to 10 percent when it fails regularly (as Meta discovered). In 2025, packets can no longer be lost occasionally.

The reason this matters so much to AMD is because it actually wants to offer more than “just” Epyc CPUs and Instinct GPUs. Of course, it also builds NICs and DPUs, but we need to think a step beyond the core components. Nvidia, for example, delivers what it sometimes calls “AI boxes” or “plug-and-play AI.” These are extremely expensive DGX GB200 systems, often referred to as end-to-end solutions, that are connected by hyperscalers and the largest AI players for massive training and inferencing. Nvidia does this with InfiniBand, a proprietary connectivity standard, while AMD, together with its Ultra Ethernet Consortium colleagues, is building on the well-known Ethernet.

Despite the open standards, AMD prefers to deliver a rack-scale solution to its most lucrative customers. To fit within the company’s philosophy, it does need to be OCP compliant. This will finally happen in 2026 in the form of the AMD “Helios” AI Rack. This combines Epyc, Instinct, Pensando, and the ROCm software stack for the simplest and most large-scale AI deployment possible. This, to make it clear, is where later GPT, Gemini, Claude and Llama models may be born.

The specifications are nothing short of astronomical. 72 Instinct MI400 (each with 432GB of HBM4 memory, much more than MI350’s 288GB) deliver 31 TB of VRAM and 1.4 PB/s of memory bandwidth. That’s 1.5x the capacity and bandwidth of Vera Rubin, respectively. It represents a faster evolution of the Instinct line than previous releases suggested, resulting in a hockey stick on the AI performance graph. The catch-up has well and truly happened, if the raw compute converts into real-world performance.

We risk forgetting about Epyc here, but the CPU also remains a critical component for Helios. Epyc “Venice” is the codename for the Zen 6-based processor in this AI Rack. AMD promises double the bandwidth between CPU and GPU, 70 percent higher CPU performance and memory bandwidth of 1.6 TB/s.

It’s the software

As impressive as the hardware story is, AMD’s Achilles heel is currently its software. While Nvidia defends its 90 percent enterprise AI market share with the proprietary CUDA software stack, AMD is challenging it with the open-source ROCm project. More partnerships, such as with PyTorch, Triton, and Hugging Face, should provide day-0 support for all new AI models.

ROCm 7 and in particular the newly minted ROCm Enterprise AI take it a step further. Cluster management aims to eliminate headaches for massive AMD expansion. Ramine Roane, CVP AI Solutions Group, says that built-in observability and deployment options eliminate weeks of work and prevent downtime.

In addition, ROCm can now run locally on fully AMD-powered desktops and laptops. This was previously a sore point for AMD, Roane admits; Nvidia users could use CUDA on their less impressive notebooks, for example. Another addition is support for Windows, which has also long been a problem for ROCm adoption.

A somewhat delicate point is the fact that ROCm greatly improves the performance of existing AI hardware. For example, DeepSeek-R1 runs almost four times faster on ROCm 7 on the same Instinct MI300 than with ROCm 6. Perfect, you might say, but this indicates that there was (and perhaps still is) a lot of headroom eaten up and letting the hardware fall well short of its potential. Given that AMD’s largest customers upgrade every year in this “abrupt” GPU refresh cycle, one has to wonder how much performance is lost each year. Nevertheless, the improvement is there, and ROCm receives new updates every two weeks. Perhaps it’s the inevitable end result of the AI boom occurring in such an explosive way: gains are to be made everywhere.

In addition, an AMD Developer Cloud is being launched, giving developers access to a day’s worth of free AI hardware use. Only a login via GitHub is required. AMD says it wants to bring ROCm everywhere, with this charm offensive marking an important step.

Nvidia has no moat

By far the most striking statement from AMD’s Advancing AI event comes from Ramine Roane. He explains that Nvidia has no moat whatsoever, i.e., a built-in advantage that challengers to CUDA cannot easily overcome. He explains that completely new GPU kernels have to be written every two years, which levels the playing field again. In other words, the championship is re-run with every refresh cycle, allowing AMD to bite into Nvidia’s market share as customers upgrade to the best option they have each time.

This reset is not about the functional support of a GPU winding down or anything like that, but about the fundamental architecture of the chip. For those unfamiliar with this kind of hardwareL plainly put, the reason GPUs run AI workloads so much faster than CPUs is that the required calculations are much more suitable for the designs in a GPU chip than what’s found in a complex CPU core. Matrix multiplications, the foundation of LLM calculations, are simply more similar in structure to the physical designs of GPU acceleration. The physical transistors are organized in such a way as to make them the best instrument to date for the jobs handed out inside AI workloads.

However, these designs need to be tweaked slightly each time. This is because different data formats are used to improve performance, with the move to ever-lower precision (from FP32 to FP16 to FP8, FP6 and FP4, for instance). The emphasis is on different types of calculations that, although similar to the previous ones, require a different architecture for optimal performance.

In other words, new GPU generations for AI are only partly needed for their efficiency gains and generic speed boosts. The latter is also achieved by building chips that better reflect the required calculations. On top of that, AMD, like Nvidia, needs to expand and optimize the software. It appears they’ve now got that sorted consistently for the first time.

Conclusion: a strong story, but what does it mean in practice?

We believe that AMD’s strategy of offering performance close to Nvidia at a lower price makes total sense. However, that’s just to get a foot in the door. The real key to success long-term is the open nature of its proposed AI stack, with partners such as Cisco, Juniper, and HPE contributing to innovation without forcing lock-in or expensive upgrades.

However, Nvidia’s dominance is undeniable. When we reminded AMD that current estimates put Nvidia at 90 percent market share in this sector, they nodded in agreement. Only if that number changes will Nvidia’s stranglehold clearly begin to weaken. Plus: the market isn’t expanding like it used to, with demand for AI training tapering off and AI inferencing taking centre stage. The end result is an equal total addressable market, sure, but there’s no guarantee an inference-first world needs the kind of architecture AMD and Nvidia are proposing in quite the same way. In any case, AMD has by far the best credentials to take on Nvidia. Intel, Cerebras, Groq, you name it, they don’t even feature in the story.