Snowflake makes AI Data Cloud the brain of any business

After over a decade of building, Snowflake faces a new era. The company shook up the traditional data warehou...

After over a decade of building, Snowflake faces a new era. The company shook up the traditional data warehou...

IBM claims that watsonx provides the building blocks for successfully deploying Generative AI initiatives in ...

The startup Cognition AI has developed a chatbot that uses generative AI for software development. That in it...

As organizations deploy generative AI at an ever-increasing rate, its shortcomings are becoming all too appar...

Meta has announced Llama 3, the successor to the highly successful open-source model Llama 2. Along with the ...

Visma’s AI team is quietly redefining document processing across Europe. With a background spanning nearly ...

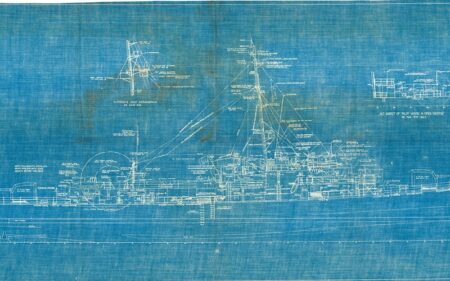

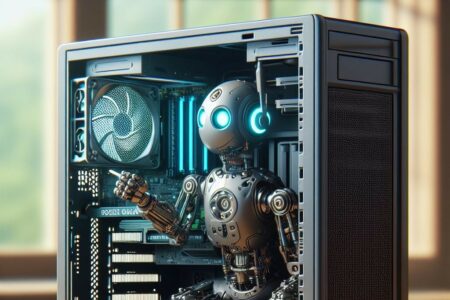

Open source object storage company MinIO has popped open DataPod, a reference architecture for building data ...

Canadian AI startup Cohere has raised 500 million dollars (US) from investors, or just under 460 million euro...

Technology infrastructure provisioning, optimisation controls and a wider capability for governance across en...

Ilya Sutskever has once again founded his own AI company. His previous venture involved the successful OpenAI...

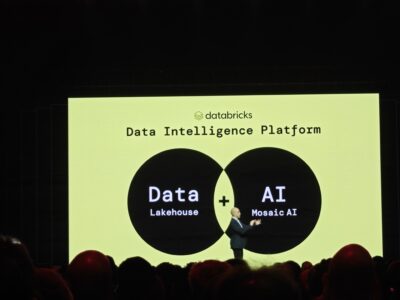

Databricks' Mosaic AI will focus on stronger model quality, new AI governance tools, and compound AI systems....

Through a partnership with builder Cognition, Microsoft is bringing Devin within reach. This chatbot aroused ...

We need AI to ‘broaden’ in depth, scope, accuracy and validity and, at the same time, we need AI to ‘na...

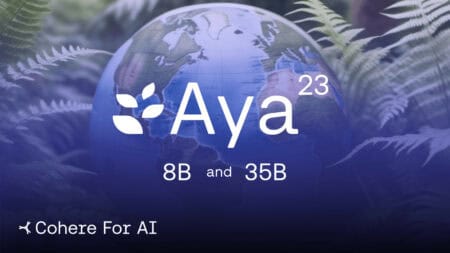

The new open-source LLM Aya 23 will appear in two versions: one with 8 billion parameters and one with 35 bil...

Generative Artificial Intelligence (gen-AI) is never out of the news headlines, equally full of hype and prom...

With generative Artificial Intelligence (gen-AI) rarely out of the technology newswires for a moment, current...

With the new Large Language Model Arctic, Snowflake can help companies generate code and SQL. In this way,...

OpenAI announced new features for enterprise customers, focusing on improved security and expanding the Assis...

French AI startup Mistral appears to have an unquenchable appetite for investor capital. The company is repor...

Google researchers claim they've cracked the code to give large language models (LLMs) the literary equivalen...

In response to -certainly not unwarranted- concerns about job loss due to the rise of AI, major technology co...

Microsoft has once again shaken up its management. Pavan Davuluri, who has been heading the Surface division ...

The new general purpose large language model should help organizations realize generative AI apps that perfor...

Last week, Amsterdam-based tech company Bird laid off 90 employees within the space of just seven minutes. By...

Both the European Union and its individual member states hope to become more digitally autonomous. However, f...

The new breed of generative AI of course makes extensive use of Large Language Models (LLMs) to draw its powe...

Predictions about the "AI PC" are littered with vagaries. PC vendors need a new unique selling point to regai...

Generative AI must be viewed as a feature of industry solutions – not a solution itself. Generative AI t...

ChatGPT has been in operation for one year today. But the celebration gets overshadowed by the recent crisis ...

Generative Artificial Intelligence (gen-AI) has had a busy year, obviously. The technology wires have been li...

'Grok' is the first AI chatbot from xAI. This company is led by Elon Musk, who was involved with the founding...

Google CEO Sundar Pichai opens the Google Cloud Next event. He reflects on the Cloud AI platform, specificall...