The startup Cognition AI has developed a chatbot that uses generative AI for software development. That in itself is not very interesting. What is interesting is that Devin can do this entirely independently. Based on initial benchmarks, the performance is also pretty good. Should developers start worrying?

So Devin wants to go further than possible with GPT-4, Llama and Claude 2. It is capable of performing software development independently. For example, a developer might ask Devin to build a website that maps all the supermarkets in London. Devin then searches for the addresses and contact information of the supermarkets and builds the website on which the supermarkets are visible. The developer sees what is happening step by step, and the code projects and data research tasks are visible.

Below is a video put online by Cognition AI surrounding the launch of Devin:

The chatbot features the tools that developers normally use for their development tasks. It features a code editor, browser and shell. “Everything a human would need to do their work” is there, according to Cognition AI CEO Scott Wu. The tools can be run in a sandbox environment. Within this environment, Devin must collaborate with the developer by processing feedback and design choices in addition to real-time reporting on the project.

On its website, Cognition AI has also published demos of the various development tasks Devin handles. These show how the chatbot helps solve common code problems. For example, Devin can automatically find bugs in code and fix them. Such bugs can creep into software projects, for example, by using open-source repositories.

Devin beats GPT-4, Llama and Claude 2

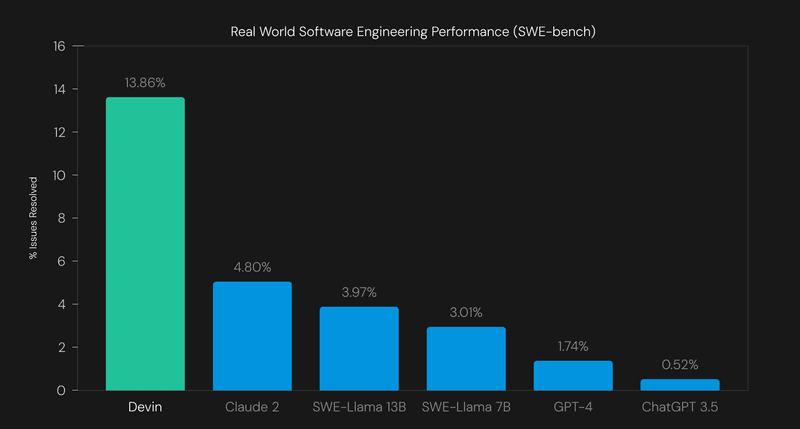

This makes Devin, in theory, an interesting option for software development. However, when Devin was introduced, Cognition AI also published a benchmark. This benchmark says a bit more about software engineering performance. It relied on the SWE benchmark, which asks developers to solve GitHub problems from open-source projects like Django and scikit-learn. These are problems that developers can regularly encounter in their work.

Devin’s performance was evaluated based on a random 25% subset of the dataset. Finally, the performance was compared with the other models. From this, the following statistics emerged.

An important difference between the models’ performance in the graph above is that Devin works independently. When Claude 2 works independently, it achieves a rate of 1.96%. Both Llama models score 0.7% in the SWE benchmark in that case, while ChatGPT 3.5 comes out at 0.2%. GPT-4 did not even manage to resolve anything. However, it is worth noting that the competing models’ results all date from Oct. 10, 2023, which means they may have improved since then and can solve more problems.

Promise has yet to prove itself

The benchmark provides insight into Devin’s promise, but additional testing must show how far along the chatbot is. Cognition AI says it will soon develop additional technical studies that will shed more light on how Devin performs against competitors in other tests.

Finally, it is worth noting that the Cognition AI company has only been around for two months, and it still has plenty to prove. However, given its promising nature, millions have already been invested in the company.

For now, Devin can only be used through an early access program. Developers can request access through a Google Docs.

Tip: ‘Claude 3 is better than GPT-4 and Gemini’: OpenAI has more and more competitors