With the new Large Language Model Arctic, Snowflake can help companies generate code and SQL.

In this way, Snowflake aims to compete with existing models. With the introduction of OpenAI’s GPT-3.5 and GPT-4, a large market has emerged, but more and more alternatives are emerging that can substantiate with benchmarks that they are better. Arctic also claims impressive performance. Before we dive into the benchmarks that show what the LLM can do, let’s look at how Arctic is put together.

Architecture

Arctic has opted for a Mixture-of-Experts (MoE) architecture. New LLMs are often built using this MoE architecture. This may be an advance over a dense approach that previous models relied on. In MoE, computing tasks are split into multiple components (experts). Each expert is made for specific tasks. As a result, an expert’s output is highly accurate because it knows exactly how to complete the task. The experts’ outputs are also combined to allow Arctic to provide an accurate response.

While presenting the new model, Snowflake compares this way of working to a hospital, which clarifies how this architecture works. You can go to a health expert who knows a little about everything when you’re ill, but the diagnosis isn’t always accurate. To get the best possible diagnosis and treatment plan, consulting a sub-expert (such as a cardiologist, psychiatrist or rheumatologist) is desirable. This is also how a MoE architecture proceeds, and even more. Each expert within the MoE architecture can be compared to a medical professional who is, in turn, more specialized. To stay in the comparison with the hospital, a cardiologist may also specialize in one type of cardiac condition.

Performance compared to DBRX, Llama and Mixtral

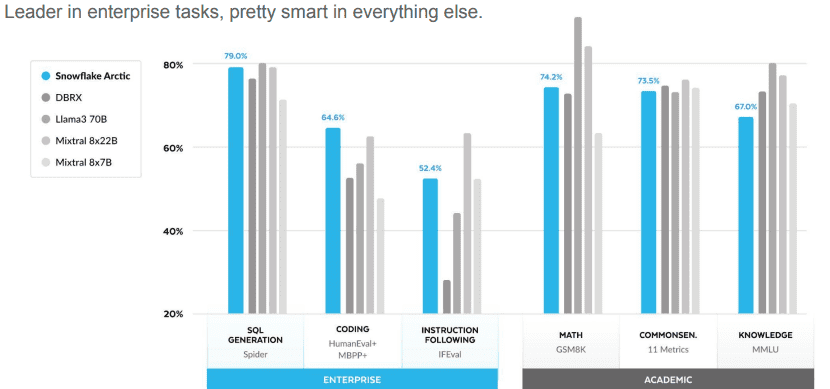

By choosing the MoE architecture, Snowflake aims to make Arctic suitable for the complex workloads of enterprises. The first differentiator Snowflake claims the company calls Enterprise Intelligence. That is, Arctic is especially good at the tasks of large enterprise organizations. Snowflake divides the benchmarks during the presentation into two types: enterprise and academic. Especially in the enterprise category (Spider benchmark for SQL and HumanEval+ MBPP+ benchmark for coding), Arctic performs well. The academic section (such as mathematical calculations and general knowledge) often is in favour of competing models. Below are the comparisons shared during the introduction.

It is important to note that language comprehension, programming and math are generally considered the three most important benchmarks for LLMs. So language comprehension and math are fine with Arctic, but competitors score slightly better there. Arctic, on the other hand, excels in programming, which is clearly Snowflake’s focus.

Also read: Databricks releases DBRX: open-source LLM that beats GPT-3.5 and Llama 2

What else makes Arctic interesting?

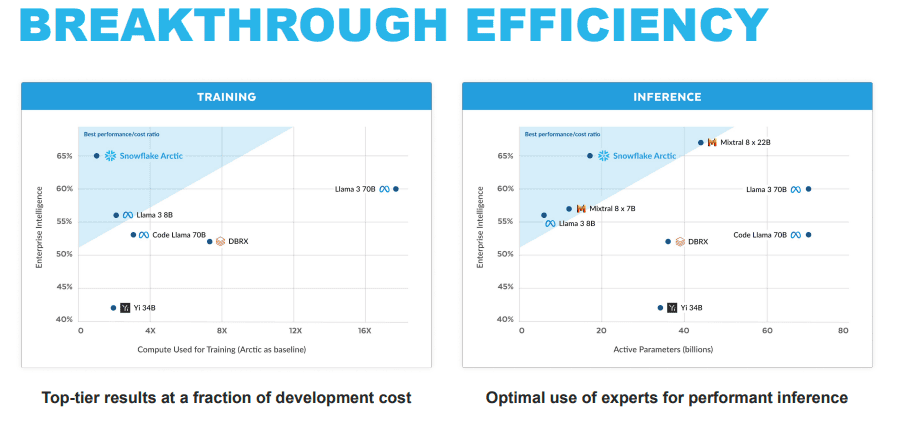

The other two differentiators Snowflake points out are Breakthrough Efficiency and Truly Open. By efficiency, Snowflake is referring to training and inference performance. The research team reportedly took less than three months for Arctic and spent roughly an eighth of the training costs of comparable models. The LLM is “trained by Amazon Elastic Compute Cloud (Amazon EC2) P5 instances. In doing so, Snowflake sets a new baseline for how to quickly train state-of-the-art open, enterprise-grade models, ultimately allowing users to create cost-efficient custom models at scale,” the data company said. Here are some more comparisons on training and inference.

In particular, the last distinguishing factor, Truly Open, is a reference to the release of the Arctic model weights under an Apache 2.0 license and the details of the research that led to how the model was trained. Later, more information about the data used in the training process seems to be available.

All in all, Snowflake is introducing an interesting model to the world today. While the company is stepping into the LLM war later than its competitors, it can particularly rely on good performance in programming tests. It will be interesting to see how the market will react to the latest LLM.