The placement of a watermark on images from an AI image generator has suddenly gained momentum. OpenAI is tackling the placement from the developer side, while Meta will add the watermark after a review of images shared online. The updates do not prevent the creation of malicious content, but OpenAI hopes that the watermark already increases trust in online images.

OpenAI announced it will implement the C2PA standard. This standard is not specifically designed for AI images, as the development of official laws and standards is still largely pending because AI is still a fairly new technology.

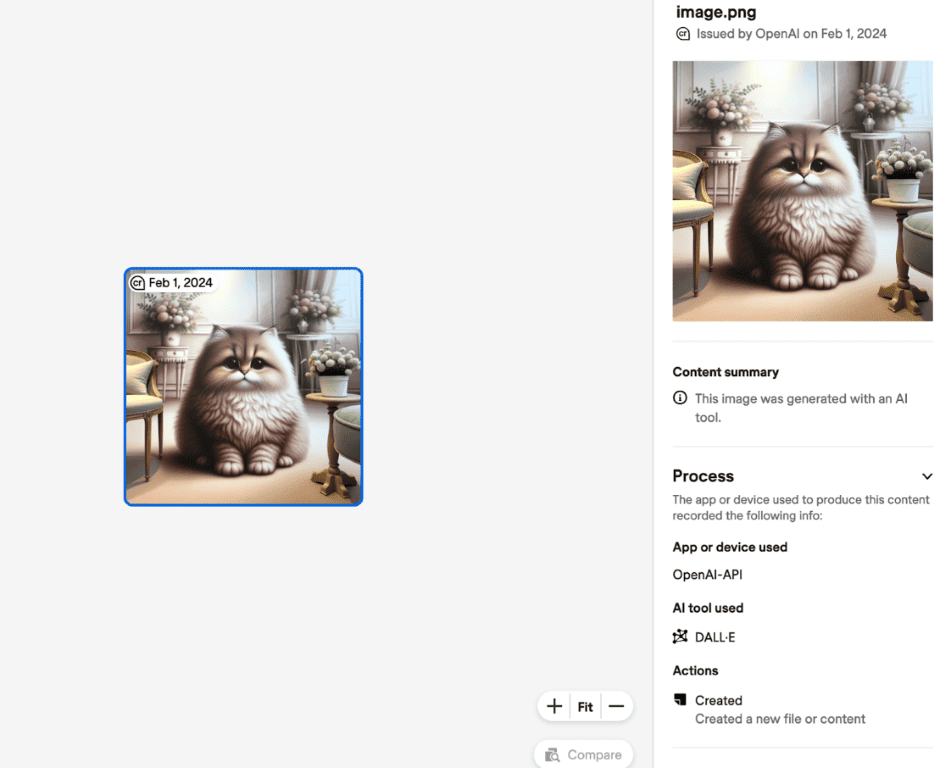

It does give the advantage that the media, among others, will immediately benefit from the implementation. The C2PA standard puts metadata in the media, which means that the non-public info of an image will show that it is an AI-generated image. As a result, media can more quickly check images for authenticity.

Metadata will be added immediately for images created on ChatGPT’s website and via the API for DALL-E 3. Images generated from OpenAI’s mobile tools will get the watermark starting Feb. 12.

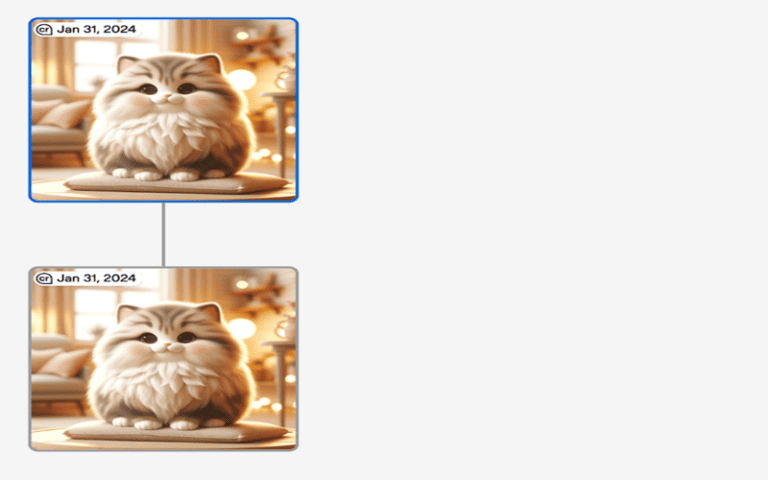

CR symbol

To the implementation of the standard, OpenAI also attaches a visible aspect. For this purpose, it is introducing a CR symbol. This symbol will appear in the upper-left corner of each image.

According to OpenAI, the combination of the two methods will help increase the reliability of images circulating online. The visible symbol is needed to address the problems with metadata: “It can easily be removed accidentally or intentionally. For example, most social media platforms today remove metadata from uploaded images, and actions such as taking a screenshot can also remove it.”

Source: OpenAI

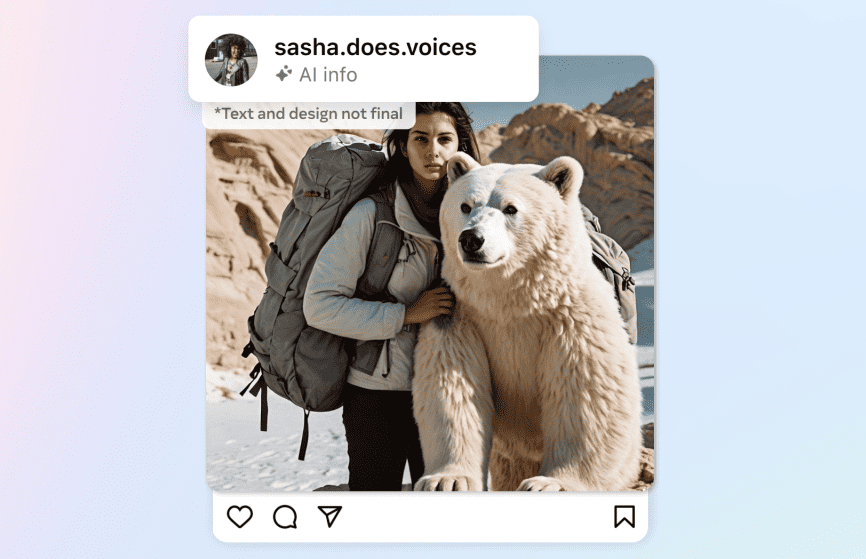

Meta checks every image

Meta, in turn, announced plans for its own AI label. This label will be brought to Facebook, Instagram and Threads. Every image uploaded to any of these social media platforms will receive a check for this purpose.

For Meta’s additional verification, the implementation of the C2PA standard at OpenAI comes just at the right time. Meta plans to build automated software in the future to detect AI-generated images. This software will check for C2PA and IPTC standards. Meta knows these standards will also be implemented by Google, Microsoft, Adobe, Midjourney and Shutterstock. There will also be verification in other areas to overcome problems with C2PA standards.

The labels will be introduced on Facebook, Instagram and Threads in the coming months. The initiative will later expand to video and audio.

Source: Meta

More controls needed

In the meantime, the label ensures that users know the image they see is fake. However, harmful content must also be dealt with more quickly. In recent weeks, examples have surfaced of AI content damaging the image of famous people. For example, pornographic images of Taylor Swift and fake audio clips in which U.S. President Joe Biden would convince voters not to come out to vote circulated.

Posting a label does not put the brakes on the creation of such harmful content. For that, AI tools must adopt stricter and more difficult-to-circumvent security measures. With the Digital Services Act, the EU is already placing some of the responsibility on social media platforms. They must intervene faster and slow down the spread of such content.

Also read: Big Tech gets new rules from EU, what will you notice as a user?