Christine Yen is CEO and co-founder of data store and query engine company Honeycomb. She has strong opinions on system observability in the age of cloud computing, distributed services and virtualisation. Keen to get us all thinking about shift left (i.e. bring into play early) techniques in application performance monitoring and wider software development lifecycle practices, Yen advocates something of a reality check against the Application Performance Management (APM) with its promise of a magical quick-fix solution for all challenges and ailments.

One of the defining characteristics of today’s systems is the complexity that arises from embracing cloud-native technologies: containers, orchestration layers and serverless all contribute to key business logic being spread out in completely custom ways. So what should we do to gain control?

“Most traditional APM products or platforms have promised a magical out-of-the-box experience, wherein telemetry was automatically captured by agents and automatically reflected back in canned dashboards – but often, that magic is based on an expectation of some common architectural patterns. When the systems buck those expectations, the magic is lost,” said Yen. “Instead, we take a more considered approach which accepts and embraces the reality of this new complexity and leans into customization – auto instrumentation provides a baseline, sure, but engineering teams are empowered to add any custom metadata that allows them to ask and answer questions in the language of the business, shared across the engineering team.”

Honeycomb’s support for answering questions about high-cardinality, high-dimensionality data offers engineering teams the chance to not simply ask what is happening or where, but whom it’s impacting and how. The suggestion here is that as forward-looking engineering leaders become ever more customer-centric, the ability to answer questions about business impact differentiates the value that engineers are able to extract.

A ‘traditional’ APM approach

“We think engineering teams recognize that practices are changing and are starting to demand more of their tools. The classic APM approach of ‘we’ll handle it all for you’ robs those teams of the ability to lean in and reach further with their vendor of choice, instead having to put their full faith in the product teams at those vendors to assess on their behalf what problems and questions are most worth answering,” asserted Yen.

She says that she and her term see this most with conversations they have around the role of Service Level Objective (SLO) in organizations. Why? Because most engineering leaders want their SLOs to reflect the customer journey through their system and reflect the experience those customers have. That means that the SLOs must be able to reflect the business-level language that impacts customers (distinguishing between different merchants on an e-commerce platform, or latency of checkout times, or the success rate of a specific sort of purchase).

“This introduction of key business-level metadata is important both at the SLO definition level and to directly support any action an engineer may take as a result of an SLO’s burn alert triggering,” explained Yen. “I have an immense amount of respect for the traditional APM tools that came before – and yet, I see many hundreds or thousands of folks in those R&D departments coming up with novel solutions to allow their customers to keep things exactly the way they are, but only building up more technical and cognitive debt along the way. It’s certainly possible to build ever-more powerful data lakehouses to magically correlate logs, metrics and traces… or we could acknowledge that those logs, metrics and traces are simply derivations of the same base building blocks (typically, spans within a trace) and build query engines that are powerful enough to provide the appropriate on-demand visualisations.”

It’s worth remembering at this point that correlation problems are only big problems worth solving if we accept the premise that the discrete pieces must stay discrete and be forcibly correlated in the first place. Honeycomb says it questions that core premise.

Redefining observability

“We define observability as the ability to understand the behaviour of software systems based on their outputs (telemetry, in this case, that is descriptive enough – likely with many dimensions, high cardinality – to fully serve user needs) and enables questions to be answered in a quick, exploratory way. Users should be able to take advantage of this ability to iteratively narrow the set of possible explanations for a given behaviour,” asserted Yen. “Many [in this market] to define observability as some state of having and handling types of telemetry – as if the simple state of capturing and aggregating against logs, metrics, and traces provides some ability beyond what any individual type of telemetry could accomplish on its own.”

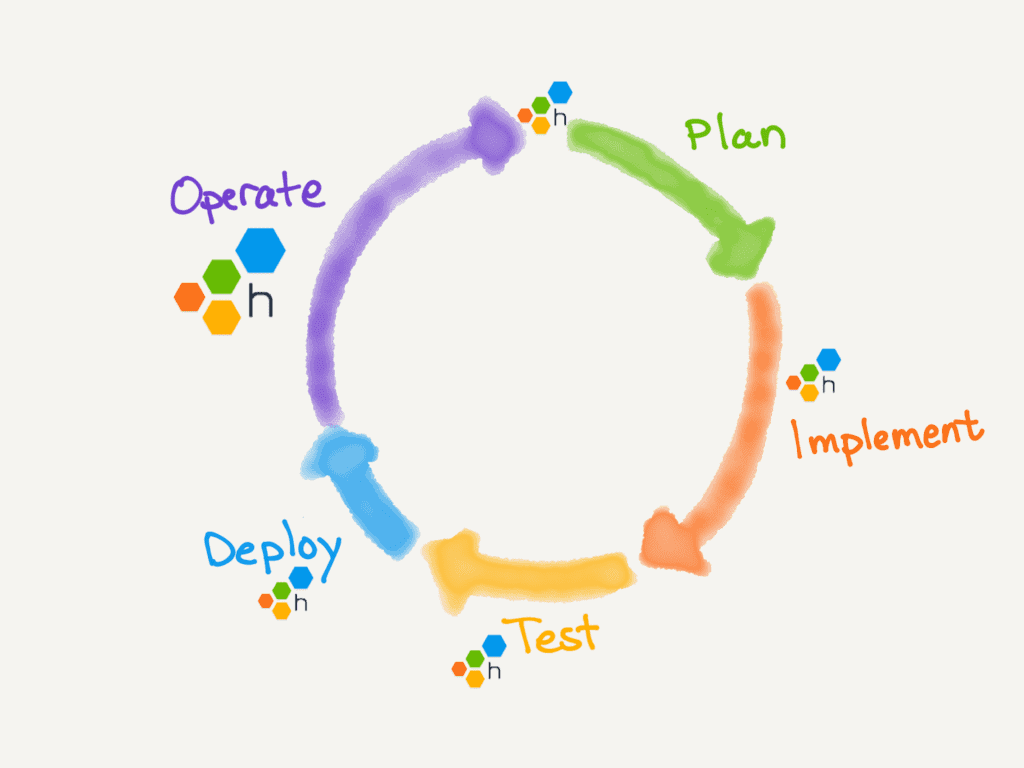

The Honeycomb chief insists her company’s approach has been different. Instead, they prefer to focus on what users are able to do with the appropriate tools – the feedback loops they’re able to fuel their workflows (‘iteratively narrow’ a search space, validate hypotheses in an ‘exploratory’ way) and their ability to connect technical signals to business impact (‘high cardinality’ fields, across many dimensions, uniquely defined by the customer).

For a lower substrate system-level technology practice so focused on the inner guts of system health and operations, the topic of observability seems to garner more strong opinions than most other sub-disciplines of cloud computing. But then, perhaps that is to be expected, given that cloud is essentially virtualised and abstracted away from us so that it is out of sight for the most part. Perhaps observability is no sub-discipline either (Yen certainly wouldn’t agree that it is) and we should all think about software system control systems a whole lot more anyway.