There is a strong urge to apply AI. Both managers and technical teams feel motivated to make the technology a success. But is that always the right attitude? According to Bryan Harris, we need to return to pragmatic thinking about AI deployment. During his recent visit to the Netherlands, we spoke with the Chief Technology Officer of SAS about why organizations should start with the problem, not the technology.

A conversation with SAS is almost always about AI. In this case, that’s a good sign, because the company was already focusing on software for building and managing models long before the generative AI hype. And with its 50th anniversary coming up in July, that means SAS has seen many forms and trends in analytics and artificial intelligence come and go. Nevertheless, interest in the technology in all its forms has grown at a record pace in recent years.

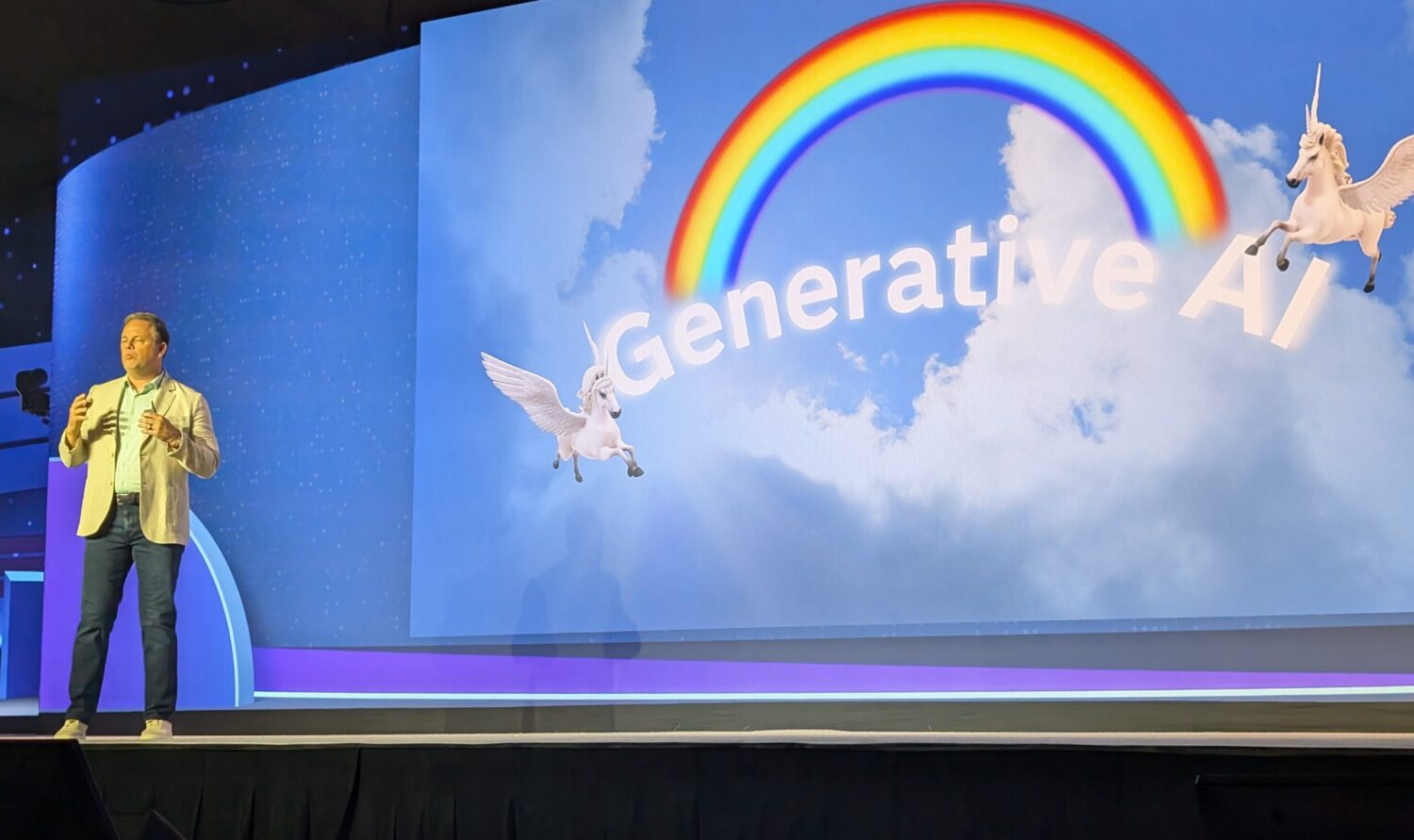

After all, many organizations have enthusiastically set up AI projects, often without a clear objective. According to Harris, this leads to problems. “People are obsessed with AI and not with the problem they are trying to solve,” he says. In his view, companies must first identify the problems they have, which ones they want to tackle, and which metrics they want to influence. Only then should you consider whether AI can play a role. This could mean, for example, that you “only” use an AI solution for one problem within your organization.

Although that sounds logical, in practice it works very differently. Harris sees that almost every employee in every company, from C-level to data scientists, is encouraged to tell AI success stories. If one of the employees successfully rolls out an AI project, it will benefit that person’s career. But it has also led to distraction. According to him, the most powerful skill is knowing when to reject AI because it simply adds no value to certain steps.

Deterministic systems versus generative AI

According to Harris, a key challenge in AI implementation lies in the difference between deterministic and non-deterministic systems. Traditional software always delivers the same output for the same input. That’s the case with traditional machine learning models, as an example. Large language models (LLMs), however, do not deliver the same output for the same input. This non-deterministic nature can lead to small errors that accumulate into major problems in workflows.

“When you introduce a non-deterministic system, you need to have verification and validation frameworks in place,” Harris explains. Without these controls, you assume that the system will always work correctly. However, this is not the case, which means there is a risk of incorrect output. SAS tries to provide as much support as possible in realizing the right AI systems. For example, the Viya platform offers the Model Manager, which includes governance functions for validation, approvals, and monitoring. A tool such as Model Risk Management, on the other hand, can also support in these situations, where regulated sectors in particular need functions for making high-risk decisions based on AI that must be 100 percent correct.

Harris also indicates that SAS itself is constantly looking at how it can use LLMs internally to achieve results with non-deterministic systems. A good example is code generation, something many organizations struggle with because developers’ workloads are simply too great to write everything manually. At SAS, code generation is therefore running at full speed. The company expects to have generated between 11 and 15 million lines of code using AI by the end of February 2026. This is mainly done with Anthropic Claude, which, according to Harris, excels at context integration for code generation. Microsoft GitHub Copilot is also used, but for certain tasks, Anthropic appears to deliver more accurate code.

Tip: With 50 years of experience in analytics, SAS is setting the course for AI

Agentic AI with human control

The point about deterministic versus non-deterministic also touches on the current trend of agentic AI. In agentic AI, LLMs are basically used to choose actions. Harris distinguishes between applications for “low-risk personal productivity” and “high-risk decisions” in this type of artificial intelligence. SAS focuses solely on the latter category for agentic AI, because that is where its customers traditionally reside – like fraud, life sciences, banking, and insurance.

In precisely these industries, it is important to use agentic AI only when it is completely watertight. The LLMs used for agentic AI must explicitly avoid making decisions themselves. They should function as an interface to reliable, deterministic systems. “We don’t ask large language models to infer. We ask them to summarize the results of other systems that actually make determinations.”

From that perspective, SAS cannot afford to use agentic AI that occasionally makes mistakes on its own. “You can’t be right 90 percent of the time. You have to be 100 percent right,” Harris emphasizes. Many trusted AI systems, often based on machine learning, are already running in customer environments. By adding a generative layer on top of that for natural interaction, you build a reliable agentic workflow.

Software development is a blueprint

If we go back to why SAS itself uses AI in software development, we see that choice as closely related to Harris’ deterministic narrative. The software development process consists of clear steps: design, build, verify, and validate. AI can generate test suites for verification and validation, after which the code is iteratively improved until all tests pass.

SAS wants to apply the same approach to other domains. “We are going to understand why software development shows such a strong ROI and translate that principle to sectors such as life sciences, banking, and insurance,” Harris predicts. For a life sciences organization, this could mean describing a clinical protocol (design), using AI to check the statistics (build), verifying against protocols and standards, and finally validating the complete report.

The challenge is that these types of verification and validation frameworks often do not exist in business domains. “In software, you have unit tests and functional tests. We don’t have those in business,” Harris acknowledges. But as agentic AI advances, those testing frameworks will have to be developed in order to iterate code and content in a way that is verifiable and validatable.

Domain-specific AI and synthetic data

SAS is also strongly committed to domain-specific AI. The company previously introduced new Viya innovations, including Intelligent Decisioning, which allows users to build controlled agentic workflows. This tool is already being used in banking and healthcare, where customers need strict guardrails.

Another important pillar is synthetic data. Following the acquisition of Hazy in 2024, SAS has significantly expanded its synthetic data capabilities. The company is working with a major tech player on battery detection scenarios. “Our synthetic data is world-class. We show customers how correlated the synthetic data is to real-world data,” says Harris.

That confidence means that models built on synthetic data sometimes perform even better than those built on real data, because synthetic data can include more scenarios and representations. It also solves a major problem in regulated sectors, where sensitive data cannot be widely used for AI training. By generating synthetic data without personal information, more people can work with it and achieve breakthroughs.

Digital twins as the next step

Finally, Harris mentions digital twins as an important focus for 2026. The work SAS did with Georgia Pacific, Epic Games, and Unreal Engine has generated enormous interest. “Every customer who sees our digital twin strategy immediately starts fantasizing about a version for their own company,” says Harris.

In healthcare, for example, SAS is working on computer vision models for highly regulated environments, such as sterilization facilities. By scanning entire facilities and building digital twins, they can create computer vision models that would otherwise never be possible. These models ensure that nothing is overlooked in critical processes.

SAS’s message for 2026 is clear in that regard. AI is not an end in itself. It is a category of possibilities, of which generative AI and agentic AI are just two examples. Success requires the right balance: knowing when to use AI, when to opt for deterministic machine learning, and, above all, when to ignore AI altogether.