Is the future of AI development open or closed? Both Intel and Nvidia have both tackled that question – and given opposite answers.

Many developers use TensorFlow, an AI platform originally developed by the Google Brain team. Users can manage everything through it, from data collection to training and inferencing. For peak performance, it is important to run AI workloads on GPUs, which can complete countless calculations with thousands of cores simultaneously. Ideally, TensorFlow should be a kind of “AI Switzerland,” where end users get the optimal support regardless of the hardware they choose.

However, support for Nvidia GPUs is the best by far. For example, TensorFlow works seamlessly with CUDA and cuDNN, respectively the API and library to run Nvidia hardware optimally. They are closed-source solutions and rely on explicit integration. Compatibility with Intel is possible via the open-source Intel Extension for TensorFlow, while AMD users tend to receive support for a months-old version via the also open-source ROCm.

It is one example of many: Nvidia almost always enjoys the best support for GPU acceleration. The same is true for AI workloads specifically, which currently require GPUs to run effectively. This is largely because Nvidia has been working on CUDA, an API for GPU acceleration, since 2006. It is the de facto standard in the software industry for these types of workloads and is exclusive to Nvidia. As no other vendor has been too concerned with this area of expertise over the past decade and a half for the most part, Nvidia’s GPU powerful position has largely been attained unchallenged in the data center world.

Nvidia AI: a closed platform for everyone

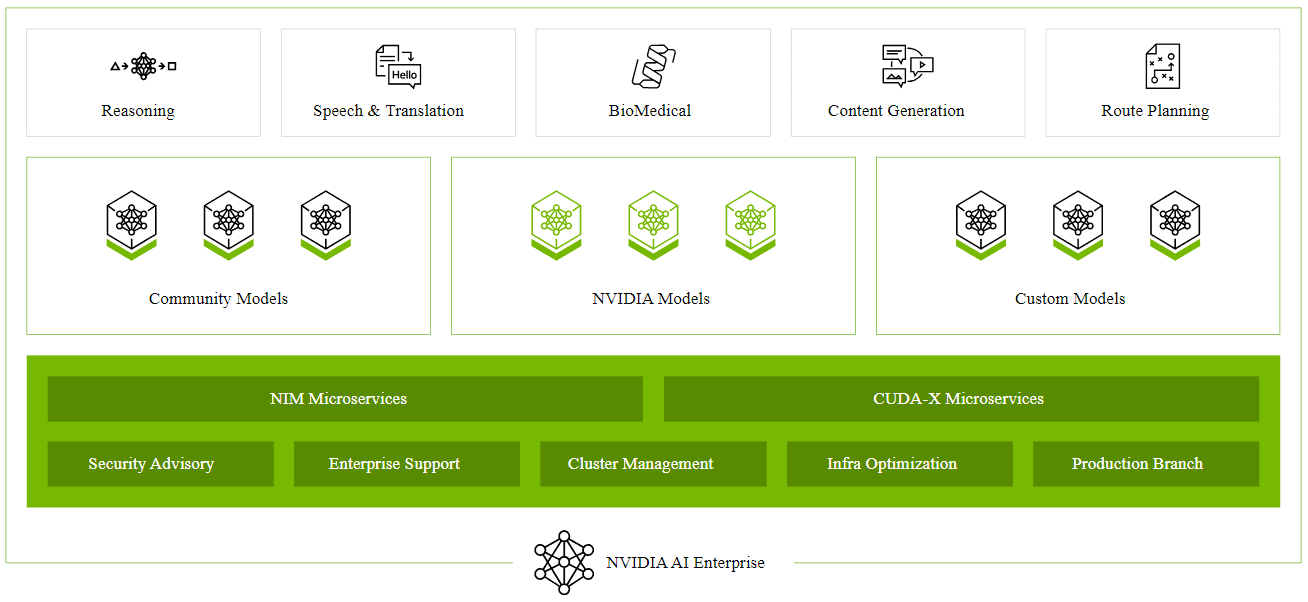

However, Nvidia has grander plans than just integrations with others. The company describes its own Nvidia AI Enterprise as the “operating system” (with Nvidia using quotation marks here – for now) for enterprise AI. It offers access to community models, custom variants and Nvidia’s own developed models. For generative AI purposes, chatbots can be realized, fine-tuned and kept secure through a comprehensive set of microservices.

Although organizations get wide latitude to deploy partnered services, Nvidia is increasingly trying to push customers toward a holistic solution on their own. With that, it should sound increasingly attractive to convert an entire server farm to “AI factories,” as Nvidia CEO Jensen Huang aspires. Currently, only the very largest tech companies can consider such an investment, allowing customers to access such infrastructure via a cloud service.

AI developers can sample Nvidia AI Enterprise for free, but according to AWS, the average price for regular customers is just over $100 per hour per instance. For that, organizations get access to a wide range of services. For example, foundation models can be trained through Nvidia NeMo for specific purposes, there’s continuous monitoring of security and frameworks and tools targeted for all kinds of AI applications.

The advantage for customers: they only have to turn to one party to take care of all their business. In doing so, a company will follow the vision Nvidia is pursuing (or “perspective,” as CEO Huang would call it). Eventually, within Nvidia’s AI platform, there should even be room for users to program in plain English. The downsides: lock-in, guaranteed high prices and likely long lead times for the latest hardware.

The backlash from Intel and co.

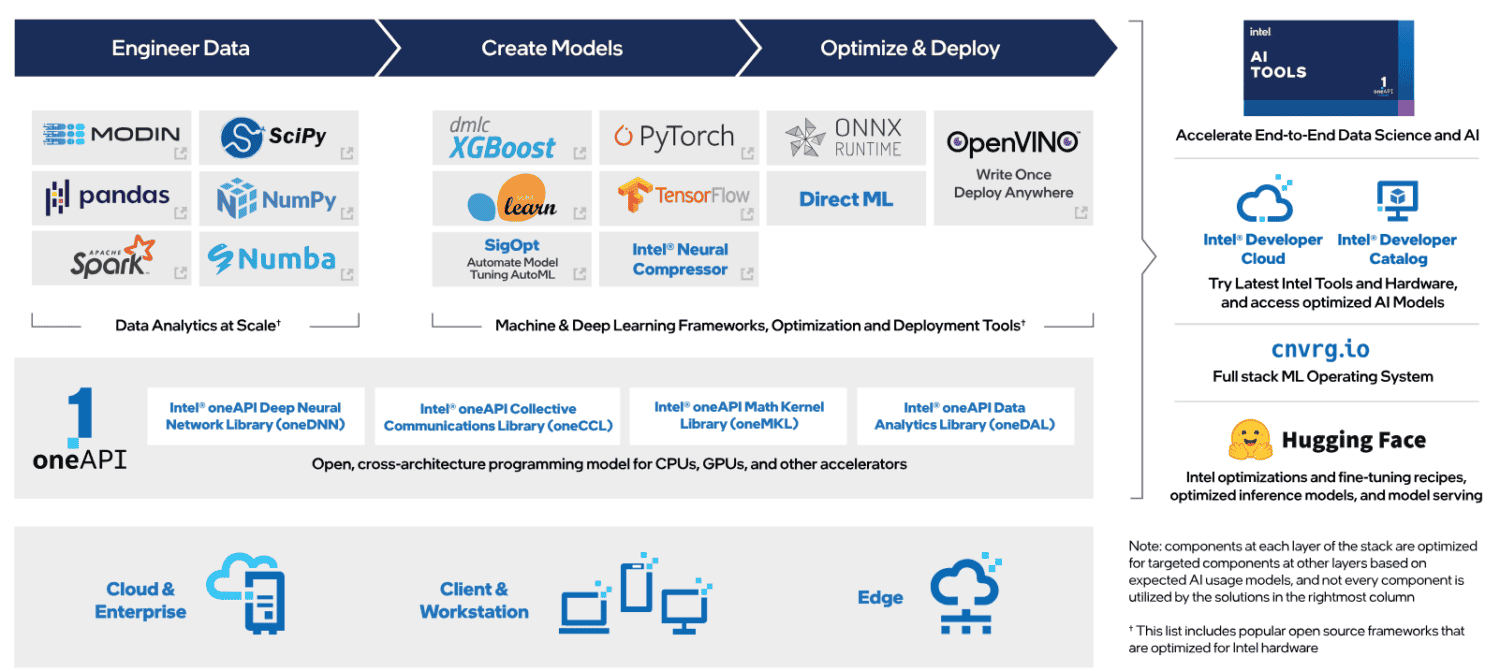

When the new Gaudi 3 chip was presented at Intel Vision this week, the company talked about it being the “leading AI alternative”. Cleary, the company is fully aware of the outsider role anyone has over the incumbent Nvidia. Still, Intel thinks it has found an asset, especially for the long term. CEO Pat Gelsinger emphasized in a conference call after his Vision keynote that AI should develop on an open basis. He believes that is what customers are looking for. Using existing standards, free APIs and open-source toolkits Intel and others aim to offer the space for others to choose to accelerate AI.

Central to this implementation is the oneAPI toolkit, co-developed by Intel in 2019 but not exclusive to that company. Its originators explicitly tout it as a CUDA alternative. In an interview with VentureBeat, Sachin Katti, SVP Network & Edge Group at Intel, clarified the advantage of this approach. “Unlike CUDA, oneAPI and oneDNN from Intel, which is the equivalent layer, is in UXL from the Linux Foundation. It’s completely open source. Multiple companies contribute to it. There’s no restriction on its use. It’s not tied to a particular hardware. It can be used with many pieces of hardware. There are multiple ecosystem players contributing to it.”

In short, oneAPI provides the freedom that Nvidia refuses to provide for CUDA. Incidentally, oneAPI also supports Nvidia chips. On top of that, it can leverage the AI functionality of CPUs, which, while much slower than GPUs, prove to be quite suitable for AI workloads without high speed requirements.

Intel’s position in this is not purely altruistic: as an AI challenger, it is trying to use all the tools it has at its disposal to compete with Nvidia. It means that servers with Intel Xeons are already useful for AI development, while an upgrade to Intel GPUs should then be frictionless. This is because the OpenVINO toolkit, developed by Intel, automatically chooses the most suitable hardware available within a computing environment.

No one likes dependency

In the short term, little will change about Nvidia’s dominance. Developers are familiar with the software and the only real restrictions are the limited availability of the hardware and the related sky-high prices. Just about every AI-related software package offers optimizations for Nvidia. Currently, the alternatives are still in their infancy and parties like Intel and AMD have too little market share in the GPU segment for data centers to truly challenge Nvidia. Still, the tide may be turning.

First, oneAPI, openVINO and AMD’s ROCm also work on Nvidia chips. Second, the hyperscalers AWS, Microsoft and Google Cloud are all contributing to a generalized AI offering that can support all kinds of hardware. They too have no interest in keeping Nvidia’s dominance untouched. Parties like Databricks, which hope to maintain their own role in the AI development process, are now forging partnerships not only with Nvidia but also with Intel. The more companies are driving this level of interoperability forward, the more choice customers will have to choose the tools they want to use.

Also read: Microsoft sees more opportunities for AI in UK and opens AI hub in London