After Nvidia presented its new Blackwell architecture for AI computing, a wave of press releases flooded the Techzine mailbox from companies announcing they will be using the chips or otherwise partnering up with the company. Nvidia is increasingly positioning itself as the preferred provider of AI computing power, and everyone wants to make it clear that they, too, are friends with the most popular kid in the class. But can Nvidia meet the huge demand for their GPUs?

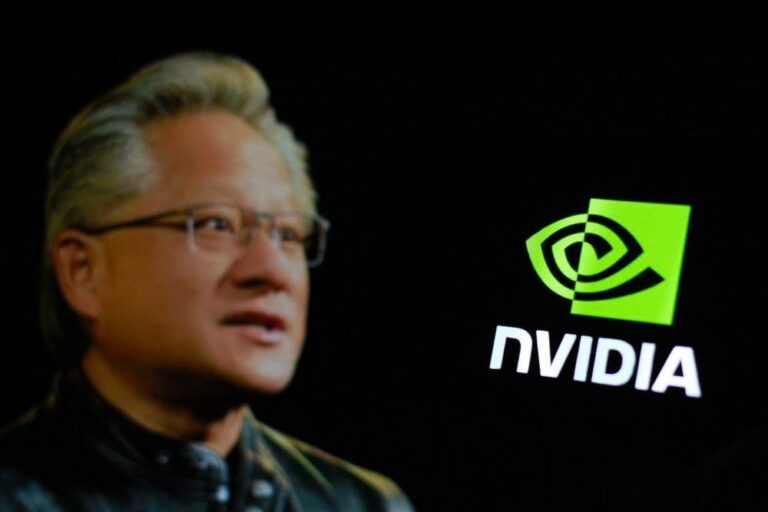

Nvidia CEO Jensen Huang presented the new Blackwell chip at the company’s own GTC 2024 event. He promised up to 30 times better performance for inference workloads with LLMs (large language models) or information generation by generative AI models.

He also announced partnerships with companies in industries as diverse as data processing, automotive, robotics and healthcare. In doing so, Nvidia claims a key role for itself in the AI arms race. It drove SiliconANGLE analyst Dave Vellante to describe GTC 2024 as the “most important event in the technology industry.”

It is important to remember that while Nvidia is a key player in this development, it is not the only one. Chip manufacturer TSMC and chip machine maker ASML are indispensable to the manufacturing process. However, the PR spotlight is mostly on Nvidia at the moment, which means that a huge number of companies are shouting their partnership with the San Jose-based GPU giant from the rooftops.

Announced partnerships and applications

For example, Amazon Web Services (AWS) reported adopting the new Blackwell platform. That allows customers to perform real-time (and faster and more cost-efficient) inference on multi-billion-parameter language models. It also equips Project Ceiba with over 20,000 of the so-called Grace Blackwell (GB200) chips. Project Ceiba is an AI supercomputer running entirely on AWS.

Google Cloud and Microsoft follow a similar route by respectively integrating Grace Blackwell platforms and NIM (NVIDIA Instance Manager) microservices into their cloud infrastructure.

SAP plans to use Nvidia chips to integrate generative AI into its cloud solutions, as does Oracle. In healthcare, Microsoft Azure will leverage Nvidia’s Clara suite of microservices and DGX Cloud to accelerate innovation in clinical research and healthcare delivery. IBM Consulting announced it will use Nvidia’s AI Enterprise software stack to help customers solve complex business issues.

Then there is Snowflake, expanding its partnership with Nvidia to integrate NeMo Retriever. This allows their customers to improve the performance of chatbot applications by linking these to enterprise data. Other data cloud providers such as Box, Dataloop and Cloudera announced that they will use Nvidia microservices to optimize customers’ Retrieval-Augmented Generation (RAG) pipelines and integrate these customers’ own data into generative AI applications.

Tip: What is Retrieval-Augmented Generation?

In Techzine’s mailbox, we also found press releases from NetApp, Dell, Supermicro, Schneider Electric, HPE, Pure Storage and Cognizant, to name just a few. Applications range from “off-the-shelf” generative AI SuperClusters to reference designs for AI data centres and AI-assisted medicine research with help from Nvidia BioNeMo.

Nvidia takes full advantage of AI explosion

CEO Jensen Huang emphasized that as far as Nvidia is concerned, “accelerated computing” is the future. Accelerated computing (as opposed to general computing) is Nvidia’s term for specific workloads that require much more than just a powerful CPU. GPUs with tens of thousands of cores can process AI calculations at lightning speed. Nvidia, traditionally a manufacturer of such hardware, has benefited most from the explosion in AI applications.

In the new chip series, however, it is not just raw computing power that is important but rather the scalability of the architecture. This allows companies to simply replace existing H100 chips with the B200, which will improve the acceptance and implementation of these chips.

However, the availability of the B200 remains a question mark because of its manufacturing complexity. The press releases in which the companies mentioned above announced their cooperation with Nvidia may be an attempt to showcase enough enthusiasm to be the first to start working with the new chips. Besides enthusiasm, a very old-fashioned willingness to pull out the wallet will be decisive for a lasting friendship.

Also read: Nvidia solidifies AI lead at GTC 2024 with Blackwell GPUs