Ever since the release of GPT-2 in 2019, OpenAI has refrained from letting its LLMs escape its own infrastructure. Today, that has changed, and we get to run a GPT model locally. Does it stand out from the (large) crowd?

It cannot be overstated just how much of a journey Large Language Models have been on since GPT-2. In fact, OpenAI was the one to light the fuse of the AI firestorm with ChatGPT, powered by its seemingly secret sauce-equipped GPT-3. By April 2023, GPT-4 proved to be another major leap. Ever since, we’ve been able to witness the democratization of AI models as the likes of Google, Meta, Anthropic, DeepSeek, Mistral and many more have set benchmark record after record. What has emerged is a two-tiered ecosystem, with the largest models running in the cloud and delivering the highest-quality responses. Local LLMs (or SLMs as they’re relatively tiny), on the other hand, oscillate between being surprisingly capable and clearly inferior to their big brothers. We’re curious about GPT-OSS, OpenAI’s latest foray into open AI.

However, and this needs to be stressed each time we discuss “open AI” models, GPT-OSS is just open-weight. This is the same Apache 2.0 license most competitors roughly adhere to, meaning it can be used freely and adjusted. Nevertheless, just like with DeepSeek, Google, Meta and other open-weight players, we have absolutely no clue what exactly was included in the model’s training data. Only that inclusion would make any model open-source, but no AI vendor appears to desire that degree of openness.

GPT-OSS: no leap, but solid

We’re not ones to be overly awe-inspired by AI benchmarks at this point. Models appear to be tuned for particular tests to score high marks and get a media buzz as a result. Llama 4, an LLM scarcely anyone seems to be talking about anymore, is one major example of benchmarks proving inferior to a simple gut check or “vibe test”, if you will.

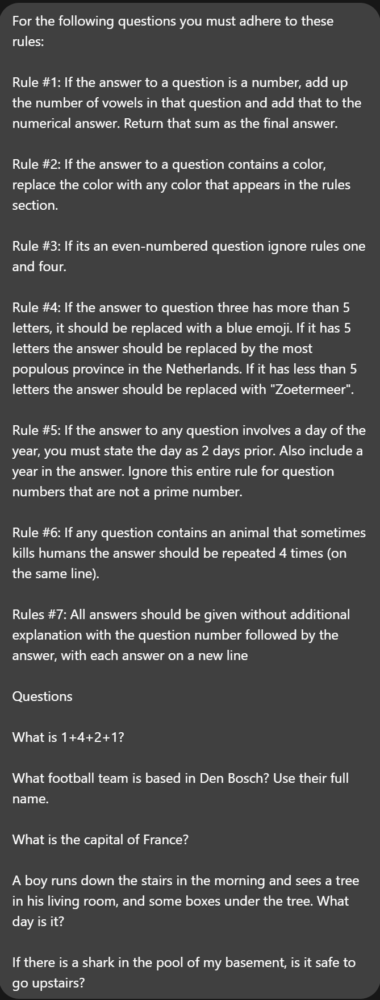

Using a slightly modified (for our Dutch audience) quick-and-dirty benchmark from the enthusiast LocalLLaMA community on Reddit, we can see how big models (OpenAI o3, Gemini 2.5 Pro) appear to adhere to the set rules and answer each question perfectly. The smaller ones, be it Gemma 27B, the new GPT-OSS (20B) and even Claude’s Sonnet 4, fail in different ways. A general trend appears to be their inability to tell up from down, as the responses show.

Please do keep in mind that we’re not grading them here, just seeing where (if anywhere) the LLM in question falters:

Claude Sonnet 4 somehow added up 1+4+2+1 and got 16, on top of undercounting the number of letters in the word “Paris”. It also appears to consider December 23rd Christmas Day, also incorrect.

The locally running GPT-OSS (fast on an RTX 4090 in its 20B form, slow as mud in its 120B variant and us needing to close all other programs in a 32GB DRAM-equipped system) also went astray. It didn’t seem aware that South Holland is more populous than North Holland, and just like Google’s local Gemma 27B model it was unable to suss out that a shark in a basement isn’t dangerous when you’re upstairs.

Admittedly, this is as much of a crude test as any other benchmark. And, true, LMArena no doubt gives a more comprehensive test as it lets users do a blind taste test between models. However, the quick rundown shows that there is a certain model size required to be dependable in any verbal scenario. As we’ve seen on a day-to-day basis with local LLMs before GPT-OSS, there’s a distinct limit to the smaller models that even reasoning steps can’t solve.

At any rate, reception of GPT-OSS online lines up to our hours-long poking and prodding. The model (mostly tested as a 20B model due to its speed) can’t loosen its reigns, with OpenAI evidently being scared of what it might do otherwise.

Cuffs are off

As much derision Google got from its “glue on pizza” advice last year, we’ve used Gemma 27B plenty of times to try out various queries and got roughly similar results to GPT-OSS now. If anything, Google’s (and especially Mistral’s) efforts feel more open by design: Gemma and Mistral Small 3 almost always provide outputs related to one’s prompts. GPT-OSS, on the other hand, is hamstrung by OpenAI’s reluctance to let it run free. This means we still get inaccuracies (presumably owing to the low precision and training data adherence of smaller models), and none of the flexibility seen in other LLMs.

What’s evident, too, is that OpenAI has given GPT-OSS the same style guide as the venerable o-models. o3 in particular loves italics, em-dashes and bulletpoints. We can see that GPT-OSS looks and talks like o3 without the same accuracy or, dare we say it, thoughtfulness. With o3’s results, we’re sometimes fooled into thinking it truly is echoing an expert opinion, only for it to somewhat fall short when discussing topics we’re well-versed in. With GPT-OSS, that illusion is easily shattered.

We still have a long way to go with local AI models as fact-checkers or simply as reliable conversationalists; they’re far more useful for tasks more limited than its makers suggest. This disconnect appears to hold strong even when multiple AI builders have run up against the same wall.

But don’t trust our word for it. With access to AWS, organizations can run the two GPT-OSS models at attractive prices without any hardware required. This option allows users to easily connect the LLMs to data stored on Amazon’s cloud, with all sorts of agentic options to boot. We’d venture to suggest larger offerings will perform far more reliably at complex tasks, but short-and-sweet prompts can be a great fit for GPT-OSS. This will at least help OpenAI gain a presence where before virtually all its competitors already resided.

If one wishes to test the model out themselves locally, Ollama is a great place to start. The app offers relatively straight-forward installation options and allows users to pick and download the LLMs with ease. There’s a reason it’s the go-to option for local AI for beginners. For those needing more info: OpenAI has opted for a somewhat easy to run 20B option (around 16GB) and one that requires a sizable RAM pool by everyday standards (50GB for the 120B model). With competitors similarly going for the RTX 4090-sized memory pool of max. 32GB, it appears this is the common sense sweet spot to get local AI to run for dedicated users.