8223 Router with a throughput of 51.2 Tbps makes it possible to run AI workloads across multiple data centers without any loss of performance.

Almost all readers will be familiar with the terms scale up and scale out. In short, scale up means adding extra capacity for workloads within a rack, while scale out means scaling horizontally by adding extra racks.

With the announcement of the 8223 Router and the Silicon One P200, however, Cisco is focusing on a relatively recent addition to the scaling vocabulary, namely scale across. The idea behind this is that AI models are becoming so large that they can no longer run within a single data center. As Martin Lund, EVP Common Hardware Group at Cisco, put it in conversation with us: “The demand for compute is greater than the capacity that can be delivered within a single data center. We can’t build data centers big and fast enough.”

Network challenges of scale across

However, scale across as a solution for running enormous AI workloads also presents its own challenges. How do you ensure that you make optimal use of all available compute when you connect multiple data centers? “That’s not an easy problem to solve,” says Lund. Certainly not if the goal is to do so as efficiently as possible.

“Of course, you can resend packets if they haven’t arrived, but AI training doesn’t stop there,” he continues. They use checkpointing to work optimally. A suboptimal connection ultimately also causes valuable GPU cycles to be lost and extremely expensive GPUs to sit idle from time to time. The idea is that this must be prevented at all times.

Deep buffering with Silicon One P200 in 8223 Router

Cisco believes that it has solved the above problem with the new Silicon One P200 chip. This chip offers a throughput of 51.2 Tbps (20 billion packets per second) and can scale up to a total bandwidth of up to 3 exabits per second. That should be more than enough for the time being.

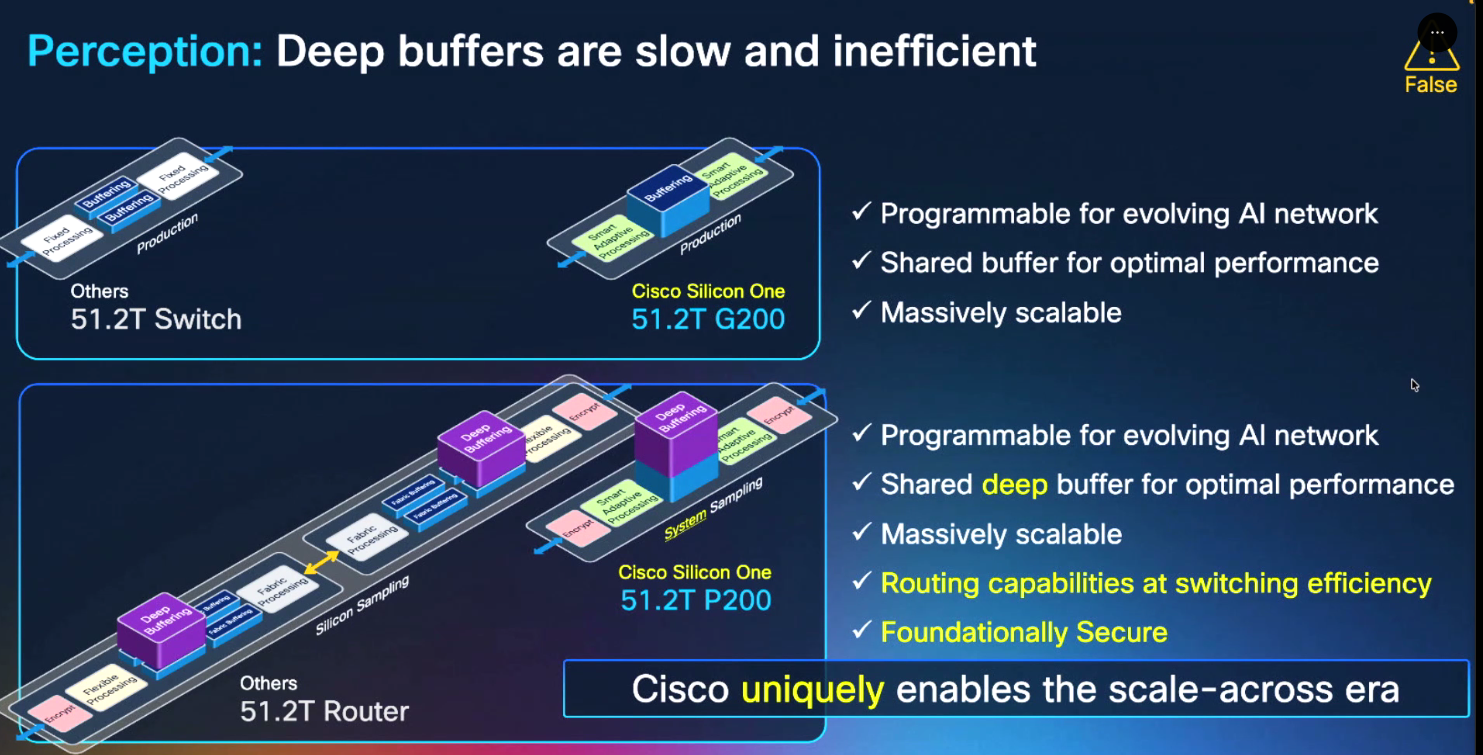

One of the distinguishing features of the Silicon One P200 chip in the new 8223 router (available in OSFP and QSFP variants) is deep buffering. It is not so much the fact that the chip uses it. Deep buffering itself is not new and is also offered in products from other suppliers. According to Lund, what makes the P200 unique is that it does this much more efficiently. “We have a density that is twice that of competing solutions,” he says. The idea is that the deep buffers do not cause the delays that other solutions do.

It is also worth mentioning that Silicon One is a unified chip. This means that routing and switching take place within a single chip. According to Lund, this results in enormous energy savings. He says that with Silicon One, you get routing and switching at the power consumption we usually associate with switching alone. The number of products needed to connect data centers is many times smaller with Silicon One than when building anon-unified stack .

Built-in security

The performance of the Silicon One P200 is certainly impressive on paper. When you consider that Cisco offers this performance with built-in security (MACsec and IPSec) and that the chip is ready for post-quantum cryptography (PQC), the performance is even more impressive. According to Lund, Quantum Key Distribution (QKD) will also be available in the near future.

Finally, during our conversation, Lund points out the telemetry that is available as standard on systems that use the new Silicon One P200. “All the telemetry you could possibly need is built in,” he says. As an example, he mentions IPM, which can be used to perform hardware-based latency measurements between endpoints.

Open platform

One of the things Cisco is emphasizing quite a bit with this launch is the fact that the new Silicon One P200 systems are open. “Customers can buy just the chip and build an entire product around it themselves, or buy the complete product and run SONiC on it, or buy the complete product with Cisco’s own IOS-XR on it,” says Lund. This offers various options for deployment in different locations.

Note that this openness is not so much because Cisco necessarily chooses it. Ultimately, of course, it does, but mainly because customers (in the case of the P200, these are mainly hyperscalers and similar parties) are asking for it. Hyperscalers develop a lot of software for their own data centers and run a lot on SONiC (although they all give it their own name). In other places, a complete turnkey rollout of a Silicon One P200-based system may be preferable.

When asked how SONiC and IOS-XR compare, Lund is clear. Cisco’s software offers many more options than SONiC. However, that does not necessarily make it the best option. Customers must decide for themselves based on each use case.

Silicon One P200: powerful addition to growing range

All in all, the 8223 router based on the Silicon One P200 is another new member of the Silicon One family that Cisco has built over the years. With the enormous throughput and inherent efficiency of the Silicon One design, the P200 is helping to enable the next big step in AI. Overall, this means that Cisco now has an answer to multiple scalability challenges. This makes Cisco (somewhat under the radar) a major and important player in the AI economy. The fact that Cisco is already supplying the new P200 chip to hyperscalers before its launch suggests a bright future.

Read also: Cisco throws next punch in battle for world’s smartest switches