Not only can GPT-4 be deployed as a content moderation tool, it is also said to perform certain tasks better than humans. GPT -4 developer OpenA bases this conclusion on an internal test.

OpenAI conducted an internal study on the differences between GPT-4 and human content moderators. It found that learning new content moderation rules, implementing them and extracting feedback to tighten the rules is considerably faster using GPT-4. In the case of humans, moderation can take several months, while the AI model reduces the time to just hours, OpenAI points out.

However, the AI model still has a limitation, namely: it can only moderate better compared to human moderators who received “light training. Experienced and heavily trained human moderators are still better than GPT-4.

Multiple steps

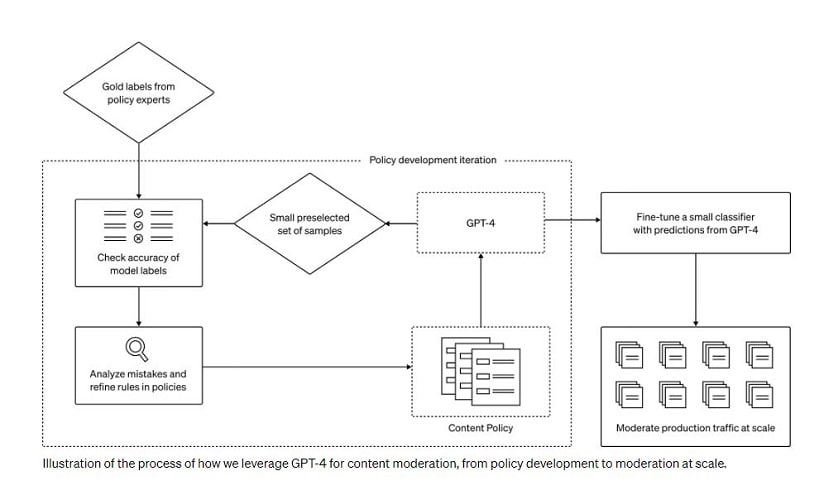

Training GPT-4 for content moderation involves several steps. First, a dataset must be created that contains examples of malicious content. After this, the AI model must be instructed to tag each malicious piece of content with a label describing why it violates the moderation rules. Furthermore, the labels created by GPT-4 should be checked for errors and problems.

Other benefits

In addition to performing moderation faster, GPT-4 also provides the ability to further define and tighten the rules. When the model makes a moderation error, it can reveal deficiencies in the moderation rules. For example, if they are too vague.

Anyone with access to the GPT-4 API can develop a content moderation system based on the information from the study.

Also read: Sites can now block OpenAI data scraping, but should they?