Nvidia has unveiled a proof-of-concept to design chips with generative AI. It developed a chatbot, a code generator and an analysis tool utilizing the technology. Nvidia research director Mark Ren says LLMs could eventually contribute to every step of the design process.

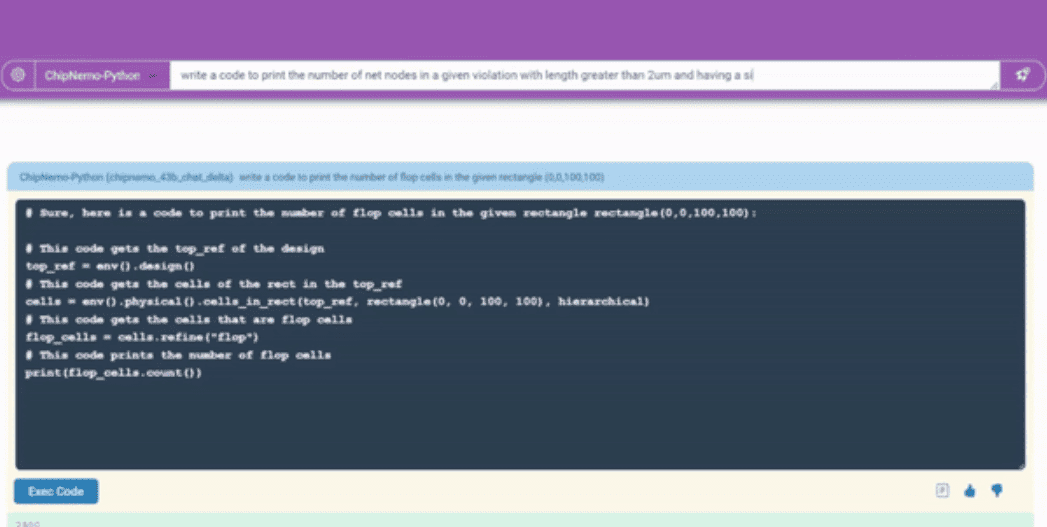

Nvidia polled its own staff to determine the ways in which LLMs could contribute to chip design. The current end result consists of three components, all under the name ChipNeMo. First, a chatbot answers questions about GPU architecture and points to useful documentation, an AI tool generates 10 to 20 lines of programming code, and an analysis tool automates bug description.

“This effort marks an important first step in applying LLMs to the complex work of designing semiconductors,” said Nvidia Chief Scientist Bill Dally. “It shows how even highly specialized fields can use their internal data to train useful generative AI models.”

Software and hardware

The company will hope that other organizations will follow suit: not only does it manufacture the GPUs that power AI, it also produces software to make AI models suitable for various specific purposes.

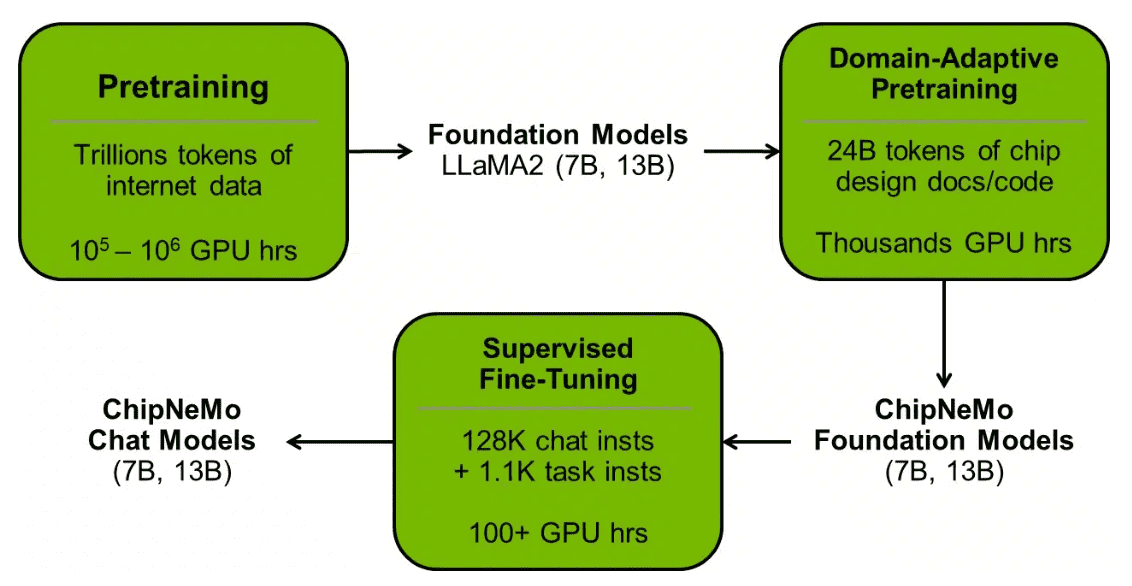

For example, the research used Meta’s LLaMA2 foundation models, both with 7 billion parameters and 13 billion. These models already have trillions of tokens of Internet data, which refers to the “words and symbols in text and software,” as Nvidia puts it. The company then further trained these models on domain-specific training data with its own Nvidia AI Enterprise platform to produce ChipNeMo-foundation models. These formed the basis for the final LLMs with which to converse.

Also read: Nvidia and AMD to challenge Intel with ARM chips in Windows computers