Anthropic introduces Claude Opus 4.6, an AI model that is quite capable in coding tasks. This is thanks to improved planning, a context window of 1 million tokens, and the new adaptive thinking feature.

Anthropic can present several benchmarks showing that the model outperforms its competitors. Claude Opus 4.6 scores highest on Terminal-Bench 2.0. This benchmark assesses agents based on their capabilities in terminal environments. To do this, Terminal-Bench 2.0 subjects each agent fed into the benchmark to a number of standard tasks. Opus 4.6 scores 65.4. The previous Anthropic version, Opus 4.5, scored 59.8. GPT-5.2-codex, released in December, comes closest at 64.7.

It also performs better than its competitors on Humanity’s Last Exam for multidisciplinary reasoning, with a range of 40-53.1 versus 36.6 to 50 for GPT-5.2 Pro. GDPval-AA, which demonstrates a model’s capability for knowledge work in the finance and legal sectors, also favors Opus 4.6 (1606 compared to GPT-5.2’s 1462).

New features for developers

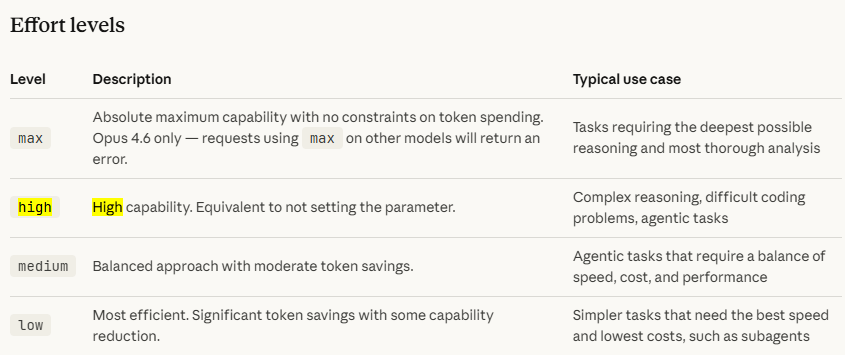

The new adaptive thinking feature gives developers more control over how deeply the model thinks. Whereas previously only extended thinking could be turned on or off, Claude can now determine for itself when more thorough reasoning is useful. Four effort levels (low, medium, high, and max) offer additional flexibility. These levels allow the user to determine how many tokens Claude uses for a response. High is the default setting, but this can be adjusted. The overview below gives an idea of what is useful in which situations.

Context compaction is another addition. For long-running tasks, Claude automatically summarizes older context when the context window approaches. This allows agents to work longer without reaching limits. The 1 million token context is a first for Opus models, although premium pricing applies from 200,000 tokens.

Claude in Excel has been upgraded for more complex tasks. The model now plans ahead, processes unstructured data, and implements changes in a single step. Claude in PowerPoint appears as a research preview for Max, Team, and Enterprise subscriptions. It reads layouts and slide masters to create presentations that match the corporate identity.

In Claude Code, users can now assemble agent teams that work in parallel. This is especially useful for tasks such as codebase reviews that break down into independent work. Pricing remains at $5 per million input tokens and $25 per million output tokens.

Safety and performance

Anthropic’s system card shows that Opus 4.6 scores at least as well as other frontier models in terms of safety. It exhibits little misleading behavior in safety evaluations. It also has the lowest over-refusal rate of recent Claude models, meaning it is less likely to unjustifiably refuse to answer innocent questions.

The model scores 76 percent on the 8-needle 1M variant of MRCR v2, a needle-in-a-haystack test. Sonnet 4.5 scored 18.5 percent on that test. This improvement is intended to counteract “context rot,” the phenomenon whereby performance declines during long conversations. Claude Opus 4.6 is available starting today via claude.ai, the API, and all major cloud platforms.

Tip: Anthropic launches Claude Opus 4.5 and promises an AI breakthrough