IBM wants to increase the reliability of artificial intelligence models by giving them the ability to express themselves when they are unsure about something. The problem IBM is trying to solve here is all about how AI systems are sometimes too confident, which can be bad for the results sometimes. For instance, they end up making predictions that are extremely unreliable in some situations.

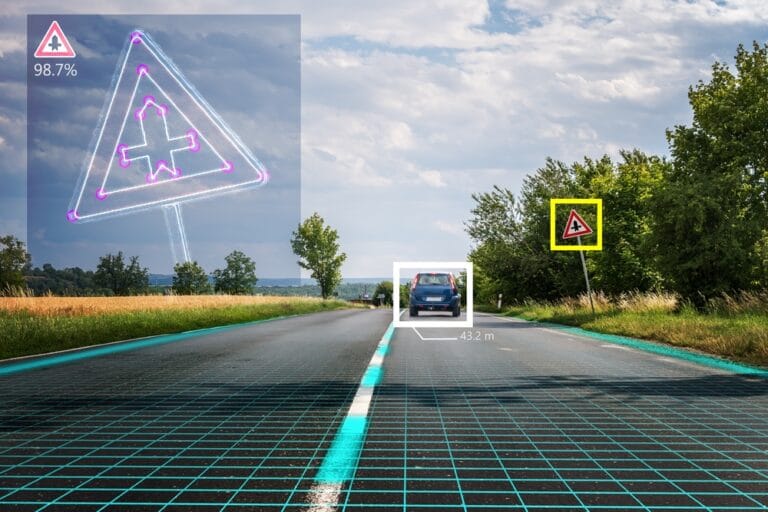

IBM researchers Prasanna Sattigeri and Q. Vera Liao, say in a blog post they published that overconfidence can be extremely dangerous. For instance, if a self-driving car thinks the side of a shiny building or bus is a brightly lit sky, it may fail to brake.

Be humble, AI

With an image like that in mind, it is easy to see how the consequences of this overconfidence can be fatal at times. To prevent things like that from happening, IBM created the Uncertainty Quantification 360 toolkit, which is available to the open-source community.

It was announced on Tuesday at the IBM Digital Developer Conference. The UQ toolkit is designed to enhance the safety of AI models by giving them the equivalent of humility or ‘Intellectual Humility” as IBM calls it. These algorithms are used by the AI models when they are unsure.

Improving models

It can also be used to measure and improve uncertainty quantification to streamline development processes, taxonomy, and guidance to help developers know which capabilities will work best for specific models. Sattigeri and Liao said that UQ 360 can be used to improve hundreds of types of AI models where safety comes first. A good example is AI that diagnoses medical issues. Catching them early with uncertainty estimates to boot is a great way to flag cases that need a doctor to examine further.

With concerns about the levels of safety and mistrust of AI among the public, the platform could not come at a better time.