Nvidia CEO Jensen Huang knows all too well how lucrative the AI hype is for his company. But there is certainly no denying the innovations courtesy of artificial intelligence. According to Huang, the innovation is so impactful that from now on we should talk about “software 3.0. What does that mean?

It’s not too crazy to think, “3.0? Where was 2.0? And what is software 1.0?” After all, you can assume that in the long history of software there must have been pretty big turning points to decide there’s an entirely new version name required.

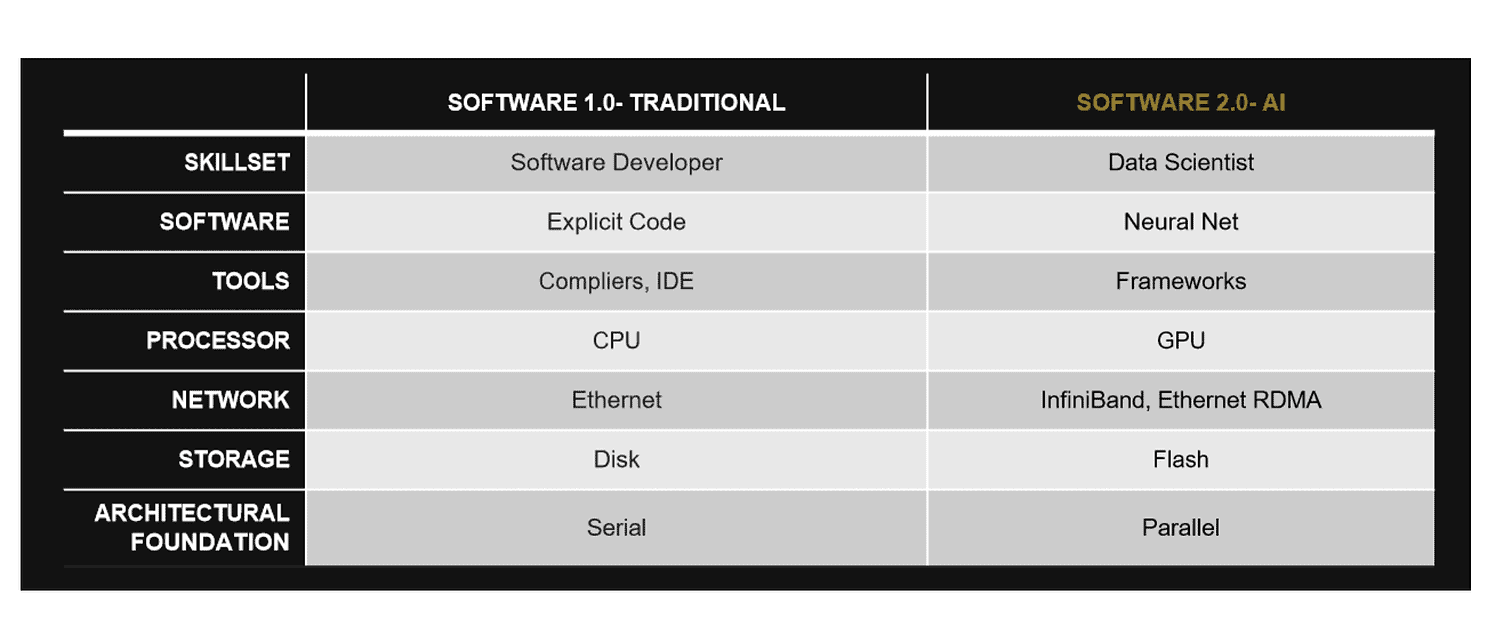

To clarify that, we must recognize that this terminology only works from a historical perspective. Therefore, we have to start with software 2.0, which became a popular term several years ago. Pure Storage used the term back in 2018. At the time, they explained that the fundamental difference between 1.0 and 2.0 is in applying AI. Where conventional programming methods were a lot of manual work and mainly depended on the capabilities of the CPU, Pure defined 2.0 as a development that puts the GPU at the center. This is because the data explosion that has occurred this century makes it necessary to make sense of all of it via an abstraction layer. With the help of AI, programmers can extract meaningful insights from data and problem-solve without all the extra lines of code.

Software 3.0: 2.0 in a new guise?

Enter software 3.0, where AI once again takes centre stage. So what’s the difference? Huang thinks the term is necessary because creating and running applications will be as simple as entering a few words into a universal AI translator, with only selecting an appropriate AI model as an obstacle. “That’s the reinvention of the whole stack. The processor is different, the operating system is different, the large language model is different. The way you write AI applications is different…. Software 3.0, you don’t have to write it at all,” he stated during a fireside chat at Snowflake Summit.

Tip: At Summit 2023, Snowflake expands the foundation for data apps

Returning briefly to 2018 where the term software 2.0 came along, we see that this involved a specific shift in capabilities, hardware requirements and tools. Pure Storage provided us with this overview at the time:

What then stands out about Huang’s explanation is that it does have quite a few similarities to the explanation of software 2.0. For example, data is now central to business and government agencies. Think of the customer data that drives the designs of Web stores, the traffic patterns that inform the construction of new roads, and so on. All of this is increasingly monitorable and increasingly difficult to oversee. Hence, data science is being placed above software development, and parallelization is taking over from serial architecture. Everything must be processed simultaneously, and preferably in the same way.

But that was already the case with software 2.0, which has increasingly become a reality. Software developers are deploying AI en masse. GPUs are hard to come by at Nvidia due to demand. SSDs are now very affordable and HDDs are slowly being phased out. So much is already underway. But the overriding factor that makes software 3.0 a different beast from 2.0 is that expertise requirements will decrease. Huang talks about a bright future peering over the horizon, and has a knack for using boastful terminology when discussing the capabilities of his products. Yet it is certainly true that today’s generative AI solutions can eventually become a very capable replacement for software development as we know it today. About the time frame, we just shouldn’t be too hasty.

Expertise

Recently, since the ChatGPT hype, we have seen a move toward very specific applications of generative AI that can provide assistance within a given application. Examples abound, from security aides at CrowdStrike to data analytics at Salesforce. These applications are already starting to demand less expertise from users. That is not to say that the engineers at these companies will be deprived of their jobs, but that the tools for their tasks will be democratized.

Nvidia benefits from this change because it owns the hardware that is essential in an AI-dependent IT approach. That does not mean, however, that it will merely continue to supply components. For example, with partner Snowflake, it also wants to help secure LLMs and thus focus more on software. With the GPU at the heart of the new form of computing, Nvidia is thus ambitious. After all, while Intel and AMD have produced software, their business model has ultimately depended on their own CPU hardware. Nvidia wants to be centre of computing’s future and a major software player to boot.