When Scott Sherwood built the plans, architectural constructs and initial codebase to create the TestLodge test case management tool, his team were focused on – strangely enough – software testing procedures. What they now realise would have brought them towards an even more functional end product more quickly was (and is) a more defined focus on data engineering from the start for analytics, reporting, auditing and assessment. Further still, in a software universe where AI is only as good as its data lifeblood, test case data provisioning (in and of itself) clearly needs some testing.

What is test case management?

As a core software engineering function that should feature in all enterprise technology applications of a reasonable size, test case management is all about defect tracking through test case creation procedures that are tightly aligned with version control so that all current code is part of the analysis carried out.

But why is test case management important? It is primarily because test case management can improve software release quality and software team resource utilisation efficiency (resources here being both human work hours and compute processing cycles and data storage requirements and ‘big’ data analytics calls and so on) so that the final resulting software meets a higher level of user acceptance. Because poor documentation can degrade test case management, data relating to the test case at hand is crucial to track test cases.

Basic data bread & butter

When Sherwood and his colleagues first developed their platform, they admit that they actually gave very little consideration to how they would use data to grow – aside from the obvious obligations around security and compliance.

“Being a test case management tool, we were purely focused on the workflow of performing tests, so much so in fact that the initial beta did not have a single reporting function,” admits Sherwood. “This quickly changed as feedback from users started to grow and the desire for detailed reports from our data grew. The difficulty came from the varied requests with every company seemingly wanting a different approach to how its data is analysed and presented.”

Looking back, Sherwood says this is an issue that has often plagued data engineers i.e. the desire to focus on a specific outcome or tool before understanding the data itself, how it moves through the pipeline, how it’s stored etc. Once they started to look at the data with a bit more of an intrinsic eye, they began to identify which features were going to have the greatest impact on (and for) users.

Developer dictatorship

“You have to be a bit of a dictator at times as a developer to make sure you don’t try to be all things to all people. We’d spend time creating a feature and monitoring its usage, but if this turned out to be less popular than others, we’d have to take the tough decision to remove it. However, rather than making a hard cut, we’d simply not give new users access to it, phasing it out. This keeps the product lean without upsetting existing users,” detailed Sherwood.

Another challenge for developers is data presentation and the resource requirements that go along with it.

Deluxe UX experiences

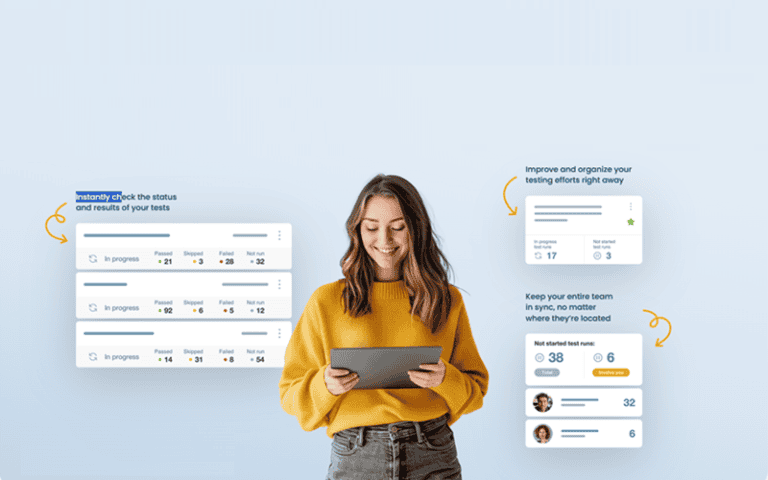

The UX of an interface can often be the determining factor on attracting or retaining new users, so it really needs to appeal at first sight. As a test case management tool, they introduced a user dashboard which gave instant insight into testing activities at a glance and proved to be very popular. The difficulty with this presentation approach lies in the need for it to load instantly, so to maintain performance it can only report on current data and activities.

“When reporting, you are crunching through a lot of data, so you need to dedicate your resources to only analysing the data you need for the desired outcome – no one wants to be waiting hours for a report to be generated and delivered,” said Sherwood. “One solution is to move as much of this to the background as possible and allow users to get on with other tasks while the analysis is taking place. Again though, much of this relies on how well you use your resources – as adding additional can be costly without necessarily improving the user experience.”

Detailing the work here further, he said that third-party integrations are a great way to allow users to pick and choose the functions they want without needing to do in-house development. In fact, third-party data analysis tools potentially offer a huge leap forward for developers wanting to provide different elements of reporting, with their ability to go through data and generate reports in a fraction of the time.

However, there are a number of things to consider before introducing these – what are the cost of these tools, will they integrate well, will your user’s data be managed ethically and responsibly, complying with privacy regulations and so on.

Data future outlook

So, he asks… what does the future look like for developers when it comes to using and reporting their data?

“It’s hard to look forward at this, or any topic really, without mentioning AI. While its use is inevitable, there is a misunderstanding as to the role it will play. The focus perhaps should not be on ‘will it remove the need for data engineers’ and more be on how engineers will use AI,” said Sherwood. “This comes from a general industry bias to focus on tools rather than the fundamentals – it all starts with the type and quality of your data! Deciding what data sets are correct for specific analysis will give you far quicker and more accurate, effective and relevant reports that use less resources. It will make us more efficient, but it won’t be replacing the need for people anytime soon.”

Finally, as already touched upon, Sherwood says that the ethics and use of data is a concern that’s only going to grow – as more developers use third-party tools, how many will go through the fine print and see where that data is physically stored? How will it be used, shared or perhaps sold? How can you ensure that your users’ data is secure through multiple platforms, jurisdictions and compliances?

In summay here, the TestLodge crew remind us that it’s incumbent on developers to ensure they keep users’ data safe and provide ample insight into how third-party integrations factor in. Trust is a commodity we can seldom afford to lose, and in a growing age of automation, being known to have ethics in your engineering will help you stand out.

TestLodge

TestLodge is a web-based tool for managing test cases and test runs. It is designed to help Quality Assurance (QA) teams streamline their testing efforts and collaborate more effectively. It integrates with issue trackers i.e. it can automatically create issues in a development team’s preferred issue tracker when a test fails. The collaboration features mean TestLodge allows users to easily share information and work together on testing projects. It can provide QA testing reports and metrics and also help developers see which requirements have associated test cases. TestLodge can automatically create tickets in an issue tracker when a test fails… and automatically update the status of issues in the issue tracker.