During the Snapdragon Summit on Maui, Cristiano Amon, CEO of Qualcomm, gave a glimpse into where the (mobile) ecosystem they provide with chips is heading. Qualcomm envisions a future in which AI moves from the cloud to your devices, taking care of everything for you in every possible way.

Qualcomm invited us to attend the Snapdragon Summit, where two new chips were presented: a new smartphone and a new compute chip. The latter is primarily intended for laptops and mini PCs.

These new chips feature the standard improvements in terms of CPU and GPU, but the focus seems to be mainly on the so-called NPU. This is used by AI models. The better the NPU, the higher the number of TOPS, and the better the chip can perform AI tasks (inferencing).

AI currently comes mainly from the cloud

Currently, most AI models are still run in the cloud. Many people who use AI do so via ChatGPT, Claude, CoPilot, Gemini, or built-in AI functions in software solutions. These all actually use AI models that run in the cloud.

This will not remain the case. It is virtually impossible to provide the entire world with cloud-based AI features. What we will see is that gradually, more and more “simple” AI tasks will be moved to the device. Editing photos, generating email texts, planning tasks, and scheduling appointments in your calendar are simple tasks that can be handled locally by AI.

Small AI models are getting better

A few years ago, we believed that AI models only improved as they grew in size. That theory is now outdated, as we are seeing more and more smaller models emerging that are many times better.

Several models can be run on laptops and high-end smartphones. OpenAI has the gpt-oss-20B model, Google has Gemini Nano, and Meta has smaller Llama models. But there are dozens of other small models.

The most important thing is that these models are constantly improving and are capable of performing tasks locally, generating results that previously required cloud-based processing. This reduces costs and creates new opportunities.

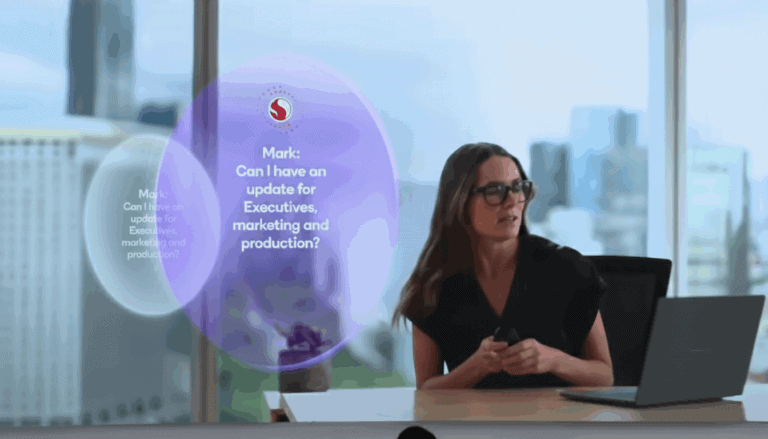

Qualcomm’s vision for the local AI assistant

Qualcomm’s vision is that all your devices, such as smartphones, smartwatches, AR glasses (also known as smart glasses), earbuds, and even your car, will work together as your personal AI assistant. The vision is that these devices, together with the application ecosystem, will continuously work for you and support you in your work at your convenience.

Some examples:

- You receive an email from a colleague asking you to forward some documents. The AI assistant identifies the relevant documents and inquires whether they can be sent to your colleague.

- Your smart glasses continuously see what you see and can also recognize people, help you remember names, or simply detect that someone you know is nearby.

- Detecting when problems arise in your calendar, such as when you are going to be late. Or when you still need to make a restaurant reservation. The AI assistant can then do that for you.

Context-aware 2.0

AI functions best when the context is clear. This applies to both the execution of tasks and the moment when AI is active. As your devices will soon be constantly monitoring and aware of your location and activities, the context will become even clearer. This will also enable the AI assistant to know when to interrupt you with questions more effectively.

For example, if you are in your car on the way to your next appointment, the AI assistant may prompt you to review a few key points, such as the examples mentioned earlier. Hopefully, this will no longer happen directly when a question arrives or when an appointment is about to begin.

From using devices to devices that serve us

This will also change the way we use many devices. Instead of picking up a device to look something up or plan something, AI will support us in such a way that this will no longer be necessary. If someone wants to make an appointment with you, AI will make a proposal that you only have to agree to. AI is, in a sense, the new user interface of the future.

The future of AI is hybrid

Ultimately, the future will not be entirely AI on the device (edge) or entirely in the cloud, but rather a hybrid form. Simple tasks will be handled locally on the device, while more complex tasks will be handled in the cloud, where significantly more computing power is available.

Everything revolves around agents

Finally, some orchestration layer will be needed. This will undoubtedly come from an application ecosystem. This could be at the operating system level, but also at the level of a workplace solution. Something that receives all the information from the AI agents and then interprets it into appropriate actions.

Ultimately, you want the AI assistant to disturb you when it’s appropriate. Not in the middle of the night or during a meeting. But when you’re on your way to an appointment and sitting in the car. Or when you’re out getting coffee. You also don’t want your smartphone, smart glasses, smartwatch, and car all giving you notifications that you’re going to be late for your 4:00 p.m. appointment. The orchestration layer will need to determine which device is best suited for each situation. According to Amon, it will be “the most personal technology ever.”

To achieve this, the data collected on the edge of your devices is fundamental. It can determine your current personal context. In a meeting, in the car, on the way to the coffee machine, or perhaps in the bathroom.

Qualcomm supplies chips and shows vision; the AI comes from someone else

Ultimately, Qualcomm is “just” the chip supplier. It presents a vision of how AI can operate on the various smart device they supply the chips for. However, it is up to companies like Google, Samsung, and Microsoft to make that AI vision a reality. It is difficult to predict how long that will take, but we will undoubtedly move closer to this vision in the coming years.