You may have heard about Snowflake recently. The software company had a very successful IPO under the leadership of CEO Frank Slootman. The company is doing well with its cloud data warehouse. Snowflake tells us the solution has become more extensive, so it positions itself more as a data cloud platform.

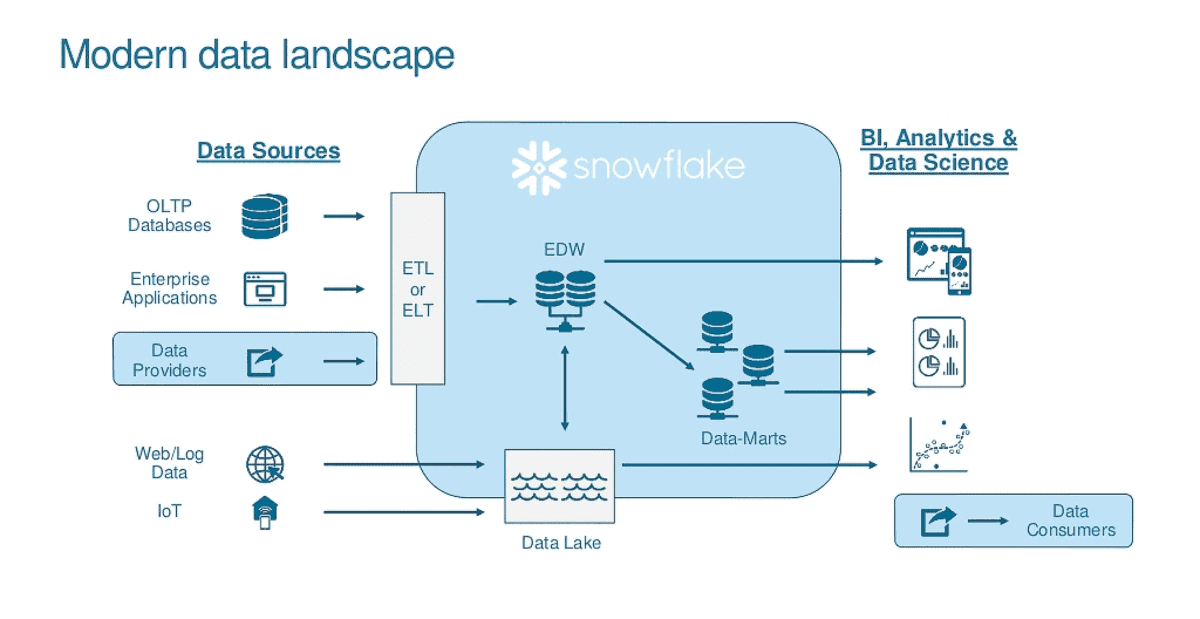

Snowflake was founded in 2012 and has managed to make a name for itself in just 9 years. The company focuses on a traditional market with very large players, but manages to break through and be successful through innovation. And it achieves this in a market in which Oracle is very dominant, as well as big cloud players like Amazon and Google. Snowflake started as a cloud data warehouse solution, but the Snowflake data warehouse is now more the core of the business, it presents itself as a complete data cloud platform. Snowflake’s platform eliminates architectural complexity so you can run multiple workloads on all three major clouds with the elasticity, performance and scale required by the modern enterprise

Related: Snowflake reports first earnings since IPO

Flexible data platform as a service

Nevertheless, Snowflake’s product makes a huge impression. On the one hand, it manages to take the entire installation, configuration, management and maintenance away from the customer. On the other hand, it offers a simplistic platform with which enormous amounts of data can easily be brought together in one database. All kinds of actions can then be applied to the data in Snowflake, like enabling data analysis or linking and restructuring data to feed another application.

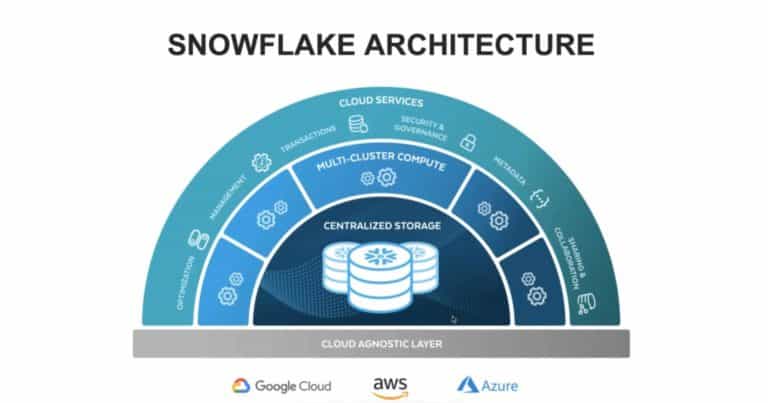

Snowflake’s platform is cloud-agnostic

Snowflake has built the platform from the ground up. Because of this, it is a cloud-native solution that can run on Amazon Web Services, Google Cloud and Microsoft Azure. In designing the Snowflake platform, standard cloud principles were considered, such as scalability and affordability. Also, Snowflake can secure the data up to row-level and meet all the requirements around compliance and governance.

Working with Snowflake

For this article, we attended a demo and were able to play with a test environment of Snowflake ourselves so that we can experience how the product works. Snowflake can be used in two ways. Developers can access Snowflake through an API to load, retrieve and analyse data. Or you can use the web interface, where you can do all these things and see the results in your browser. For our demo, we used the web interface.

Snowflake relies heavily on SQL (Structured Query Language), which many developers should be familiar with and which many data analysts are trained in these days. SQL is one of the simplest languages for working with data and can be learned fairly quickly by anyone. In the web interface, you also have to use various SQL commands to work with Snowflake.

Developers working via the API can work with any programming language combined with SQL. Java Database Connectivity (JDBC) and Open Database Connectivity (ODBC) interfaces are available for Snowflake, allowing you to connect to a Snowflake database in any programming language.

Snowflake can handle structured data, such as CSV files or Excel sheets consisting of tables, rows and columns. However, Snowflake has adapted to the cloud era and can therefore also handle XML and JSON datasets. This is highly desirable when data from SaaS solutions need to be retrieved and processed in Snowflake.

Regardless of what the data looked like in the source, in Snowflake it can simply be retrieved using SQL and filtered for certain columns where necessary. It is also possible to quickly combine data from different tables, even if it concerns hundreds of thousands or millions of rows.

Furthermore, Snowflake has recently launched a new developer experience. Snowpark will allow data engineers, data scientists, and developers to write code in their languages of choice, using familiar programming concepts, and then execute workloads such as ETL/ELT, data preparation, and feature engineering on Snowflake.

Snowflake is champion in metadata

Snowflake has tried to embrace the cloud model. You can pay for the actual usage, but you can also determine when you deploy which type of workload. As an organisation you can have more control over the amount of workloads you deploy, for how long and what costs are involved.

Suppose you have a huge database with millions of records in which you want to analyse data. Then you could choose to have your data analyst work with a smaller limited set first to get to the right queries and algorithms. If successful, they can then be applied to the entire data set to see if they lead to the right result.

This is very quick and easy to set up in Snowflake. However, what is also important is to scale up in time. Suppose you have developed such a query and algorithm and are about to apply it to a gigantic data set, then it is wise to scale up the workloads used for that.

In the demonstration we got, this really makes a huge difference and can save a lot of money. For example, running a heavy query on a large database with a limited workload can take tens of minutes or even hours, while the same query on much faster hardware is completed within a minute. The heavier workload may be only three times as expensive per hour as that smaller workload. So by choosing small workloads, you do not necessarily save on costs. It is really a question of the right workload for the right queries.

If you are really going to deploy Snowflake in a production environment where the database is used 24/7, you will have to choose a workload that can cope with the requests in many situations. Even then it is possible to scale up and down based on usage.

Sharing data and collaborating

There is a high demand for good data analysts and the supply is very limited. Many companies therefore regularly hire external analysts to help them analyse crucial business data. Snowflake also enables users from outside the organisation to work with data. For this purpose, users and user rights can be customised. It is also possible to determine which data a user has access to, down to row level. It is therefore possible to allow an external data engineer to work with a more limited data set. In doing so, very crucial sensitive data is withheld, but an internal employee can apply these analyses to the complete dataset to also analyse the sensitive data. In this way, certain compliance requirements can be better met in some cases.

Data exchange

Finally, it is good to know that Snowflake facilitates data exchange. Here anyone who has valuable data sets can share them with other Snowflake users. This can be done for free or add a cost. For example, there are databases with information about IP addresses or weather forecasts per city worldwide. For application developers, the Snowflake data exchange can be a valuable source to develop applications faster because data is more easily accessible.

Conclusion

Snowflake’s platform continues to innovate, it is already a lot more than a data warehouse it came from. Snowflake’s platform enables the Data Cloud, a global network where thousands of organizations mobilize data and eliminate data silos. A lot of big tech companies are already Snowflake customers, to accelerate application development and data science projects. Over the coming years, the solution will grow further and become more extensive as more companies will join the data cloud. After our first meeting, we certainly see the advantages and opportunities for many companies. Especially the whole cloud-native aspect, the speed of innovation and the ease of use will appeal to many companies.