Intel is striving for a world where AI compute is available everywhere. That starts with the datacenter, with the 5th generation Intel Xeon as its beating heart. Yet the new server chip offers something for everyone, with lower costs for all customers thanks to significant efficiency gains.

CPUs such as the Intel Xeon and AMD Epyc play a key role in the global IT infrastructure. Even when companies end up building their own accelerators, such as AWS with the Graviton and Trainium, they still rely on a processor driving a server cluster. “If there’s an accelerator, it needs a host CPU,” concludes Marc Sauter, EMEA Technology Communications Manager for Enterprise at Intel.

The CPU is also crucial for AI workloads, Sauter argues. He points out that Nvidia CEO Jensen Huang chose 4th-generation Xeons with the DGX H100, which Nvidia itself describes as the “gold standard for AI infrastructure.” Sauter attributes this choice by Huang to the unmatched single-threaded performance of the Intel CPU. Xeons are, in essence, an understated driving force behind the current AI revolution, even if it doesn’t get the same attention (and favourable share prices) as others. In addition, small and medium AI models are still suitable for fine-tuning and running on Xeons, while GPUs only need to be deployed as the big guns to tame a particularly large LLM like Meta’s Llama 70B or OpenAI’s GPT-4. For a timely AI training process on the dataset, you do still really have to turn to Nvidia (and even then, it may take weeks).

“The best CPU for AI”

When presenting the new 5th generation Xeons, Intel made no bones about it: it’s apparently “the best CPU for AI, hands down.” In this, the processor doesn’t just play second fiddle behind the coveted AI capable GPUs. The Sapphire Rapids architecture of the previous generation of Xeons was already largely focused on specific workloads, including AI. For that purpose, it uses special accelerators, embedded components in the CPU that are ideally suited for select tasks. It’s a markedly different approach from AMD’s, whose Genoa and Bergamo server chips contain astounding numbers of cores. According to Sauter, we’ve reached a point where throwing cores at the problem isn’t always beneficial: extra performance costs a disproportionate amount of energy, which quickly adds up on the balance sheet. So efficiency, as well as performance, is important. “We want the best of both worlds,” Sauter states. IDC research also shows that most deployed solutions use a “midrange” number of cores, i.e., 16 or 32 rather than 64, 96 or even 128 cores.

Emerald Rapids, the architecture behind the 5th generation Xeons, builds on this philosophy. However, the number of tiles, discrete pieces of silicon that make up a single processor, has been cut in half. Instead of four tiles, there are now two, which brings all kinds of technical advantages. The bottom line is that the new architecture delivers up to four additional cores (to 64) across the chip, with major efficiency gains to boot.

The biggest performance gains over Gen 4 are a massively faster networking and storage throughput (+70 percent) and significantly improved AI inferencing (+42 percent). A tripling of L3 cache is a major factor in this. On top of that, owners of existing 4th generation Xeon platforms can install the new processor with a relatively simple drop-in upgrade. However, they cannot do so if they want to fill 8 sockets in one system, a rare scenario.

Who is the customer?

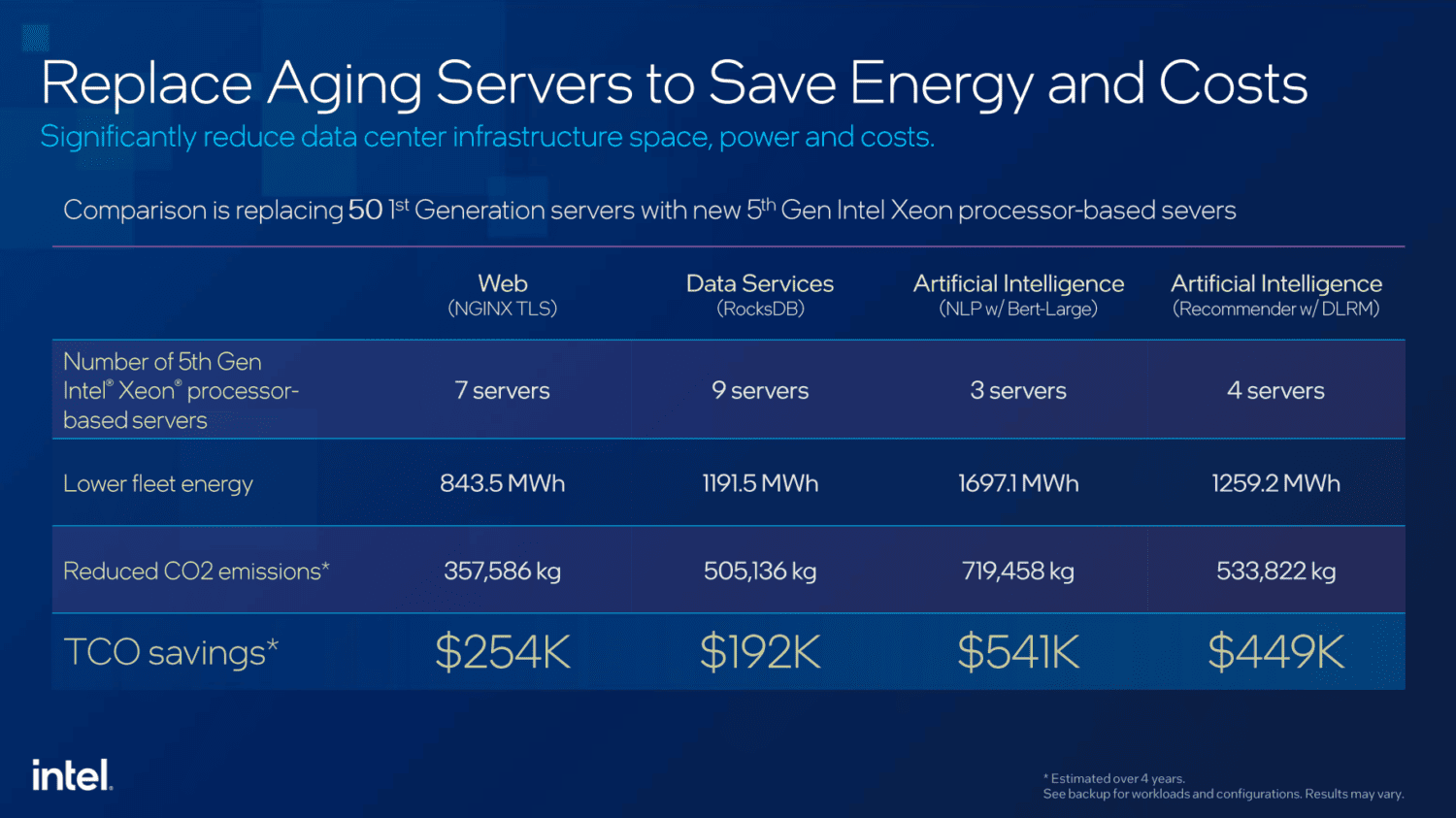

Sauter points out that the majority of Xeon owners are still using 1st and 2nd generation chips. Xeon generation 2, launched in April 2019, is the most common of them as it stands. Since the usual upgrade cycle for server chips is three to four years, according to Sauter, Gen 5 will be an entry point for many. That was always going to be the case for the biggest cloud players on the market, as they consistently upgrade every year. For the rest of the Xeon customer base, now might be the scheduled time to get into the new platform. A pretty appealing TCO visage awaits them, if Intel is to be believed:

Intel continues to insist on TCO (Total Cost of Ownership), also when comparing its latest chips to Xeon’s 3rd generation. Relative to AMD, the numbers don’t lie: roughly 30 Intel Xeon servers would be competitive with 50 AMD Epyc 9554 servers, while that battle becomes even more one-sided when AI workloads are involved. Indeed, those concentrating solely on AI would be able to beat 50 AMD-equipped servers with just 15 Intel counterparts. Such users could also benefit from a 62 percent cost savings in the most extreme case, viewed over a four-year time frame. When running Web or data services and HPC workloads, customers will save between 21 and 27 percent over four years with the latest Xeon, according to Intel.

Looking ahead already

We already know the Emerald Rapids architecture won’t stay the benchmark for long. Granite Rapids, the code name for the 6th generation Xeons, will feature three times as much memory bandwidth. At the same time, a tripling of AI performance awaits, Sauter informs us. He also reminds us that these chips have a development time of about three to four years. In other words, Intel didn’t simply anticipate the AI revolution of 2023, as they had already worked on accelerators for this now-hyped workload. Sauter refers to Knights Mill, a 2017 Xeon Phi product focused on deep learning, to indicate that Intel has been on the AI train for a long time. “For the last decade, Intel has been an AI company,” he said.

For now and the near future: continuous computing

One notable concept during Intel’s unveilings is that of “continuous computing”. This refers to the phenomenon that it has AI hardware in datacenters, at the edge and at the client, all within the Intel portfolio. A “hybrid approach” utilizing all of those compute offerings will become increasingly popular, Sauter believes. A developer can construct their AI application with the help of to open toolkits like OpenVINO, while Intel determines behind the scenes what available hardware is best to run it on. As many tasks as possible will eventually be moved to the most effective place possible, which in the long run means we’ll get more client AI. The reason for that is that ultimately, latency is obviously at its lowest for the end-user when calculations are made on the local device. In other words: running AI will be done mostly at home in some as-yet undefined number of years. This will be necessary, too, given the strict privacy requirements for personal data that the European Union in particular maintains.

At least, that’s the suggestion given by the simultaneous announcement of Intel’s Core Ultra laptop chips. The company has encouraged more than 100 software vendors to develop AI-powered applications that can be run (in part) locally on the brand new NPUs (Neural Processing Units) in the upcoming generation of laptops. However, it appears from our conversation with Sauter that the heavier AI tasks will still be handled by more powerful hardware outside of the reach of consumers for some time to come. After all, AI hardware on laptops is still in its infancy (indeed, it’s a newborn at the time of writing). He doesn’t say it explicitly, but Intel’s commitment really does seem to be to enable the AI revolution primarily through datacenters.

Also read: Intel Core Ultra: the promise of an AI PC personalized to you