As expected, Nvidia presented a fleet of new GPUs at CES 2025. But more importantly, and possibly more profitably in the long run, is a $3,000 Blackwell-based mini-PC. What is Nvidia planning?

The initial lineup of Blackwell GPUs for desktop PCs is as follows. At the top sits the RTX 5090 ($1,999), followed closely by the RTX 5080 ($999), the RTX 5070 Ti ($749) and the RTX 5070 ($549). Nvidia claims they’re significantly more powerful than their RTX 4000 series counterparts (not a surprise). Additionally, the prices are generally a little less scary than some feared.

More importantly, however, Nvidia is not stopping here. Blackwell was introduced at Nvidia’s GTC conference last year above all as heavy artillery aimed at the datacenter. Given that revenues there are roughly nine times that of the consumer market for Nvidia, that focus was not surprising. But Blackwell can also scale down and still be extremely useful for generative AI. Indeed, the giant GB200 NVL72 Grace Blackwell Superchip’s little brother suggests a new opportunity for Nvidia. In the process, it may yet further solidify its immense market position.

An AI supercomputer on your desk

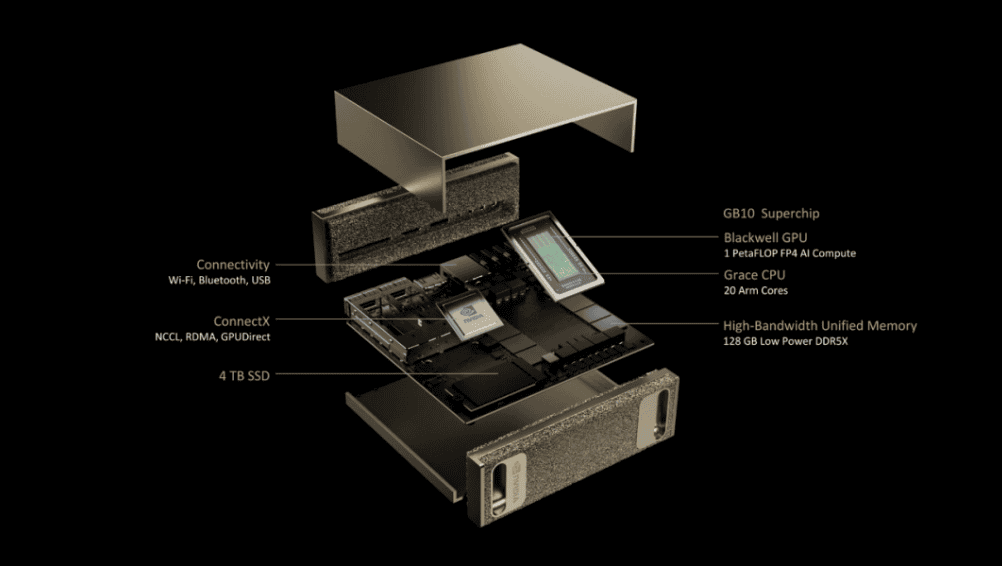

‘Project Digits’ is the code name for a new product based in part on Blackwell. It is a $3,000 mini-PC with 128 GB of LPDDR5X memory and uses an Nvidia GB10 Grace Blackwell Superchip. GB10, like the immense GB200 for data centers, is a combination of Grace CPUs based on the Arm architecture and utilizes Blackwell GPU cores for AI grunt. In short, it is Nvidia all over, from head to tail, when it comes to compute. And it’s a lot more impressive than Nvidia’s earlier Jetson Orin Nano, for example. As a reminder, this was a tiny Raspberry Pi-like device (which, as it happens, was still potentially offering more TOPS than NPUs inside AI PCs).

The bottom line is that Project Digits can handle an LLM of 200 billion parameters. In other words, Llama 3.1 405B is a step too far for it. However, the 70B model variant fits with great ease. Nvidia makes it possible to link two Project Digits boxes via ConnectX networking. Suddenly, this does make Meta’s 405B iteration of Llama 3.1 possible to run on your desktop. Thus, had Anthropic’s LLMs been open-source, running Claude 3.5 Sonnet locally with this would have been possible on a single PC, as this LLM contains an estimated 175 billion parameters. If anyone is going to tailor-make an AI model for Project Digits, it’d likely be suitable for whatever GenAI task you have in mind.

However, models must be reduced to 4-bit, which reduces the accuracy of the output. Exactly how the outputs turn out, is unclear. In addition, Project Digits is currently still a system only aiming at developers. On board is a special variant of Ubuntu Linux to take full advantage of the underlying architecture. We’re not expecting this mini-PC to be all that user-friendly.

But we do see something in Project Digits which goes beyond a devkit. What if the company comes up with an intermediate form between the miniscule Jetson Orin Nano and this new desktop AI solution? In that case, organizations, and not even big ones, could actually have their own local inferencing box with enough potential to get meaningful outputs. No need for expensive cloud API usage at that point, and no risk of losing your business data through such a process.

What can an AI PC do?

The promise toward organizations starting with AI locally is already there. AI PCs are everywhere, some suggest. AMD, Intel and Qualcomm today provide Windows Copilot+ systems with their own NPU, often a powerful CPU and regularly a GPU with additional AI horsepower if required.

There is much wrangling over the exact TOPS, or Tensor Operations Per Second, of each vendor, a metric which supposedly shows how powerful its AI capabilities are. Typically, we talk about TOPS in the hundreds when it comes to AI PCs. However, if you were to put one of the latest Nvidia GPUs into a run-of-the-mill desktop, you’d trounce any such AI PC: the RTX 5090 delivers 3,352 TOPS, the 5080 1,801, the 5070 Ti 1,406 and the 5070 988. Although the exact specifications of Project Digits are not yet known, we have to assume that the TOPS are more likely to sit near the discrete GPU range, rather than resemble what’s in AI PCs’ NPUs.

But TOPS are not at all the ultimate measuring stick for GenAI. What you can actually run on a system is much more relevant. There is still no clarity on that at all when it comes to the maximum potential of AI PCs today. Local models are clearly worse than cloud-based ones going by the AI PC example. Nvidia is bringing about a change on this front with Project Digits. It has laid out what works on its new hardware and what doesn’t, something that is a lot more common with products for developers than those targeting consumers.

Much is still unknown, though. For example, what’s the memory bandwidth on Project Digits? How many TOPS are present here (we’re curious anyway)? And are there possible drawbacks such as a restrictive OS that optimizes all Nvidia tooling and does not tolerate anything else? We need more clarity there. But an impetus for a machine to put GenAI workloads at your fingertips on premises, Project Digits certainly is.

Also read: Nvidia sees robotics as new growth engine