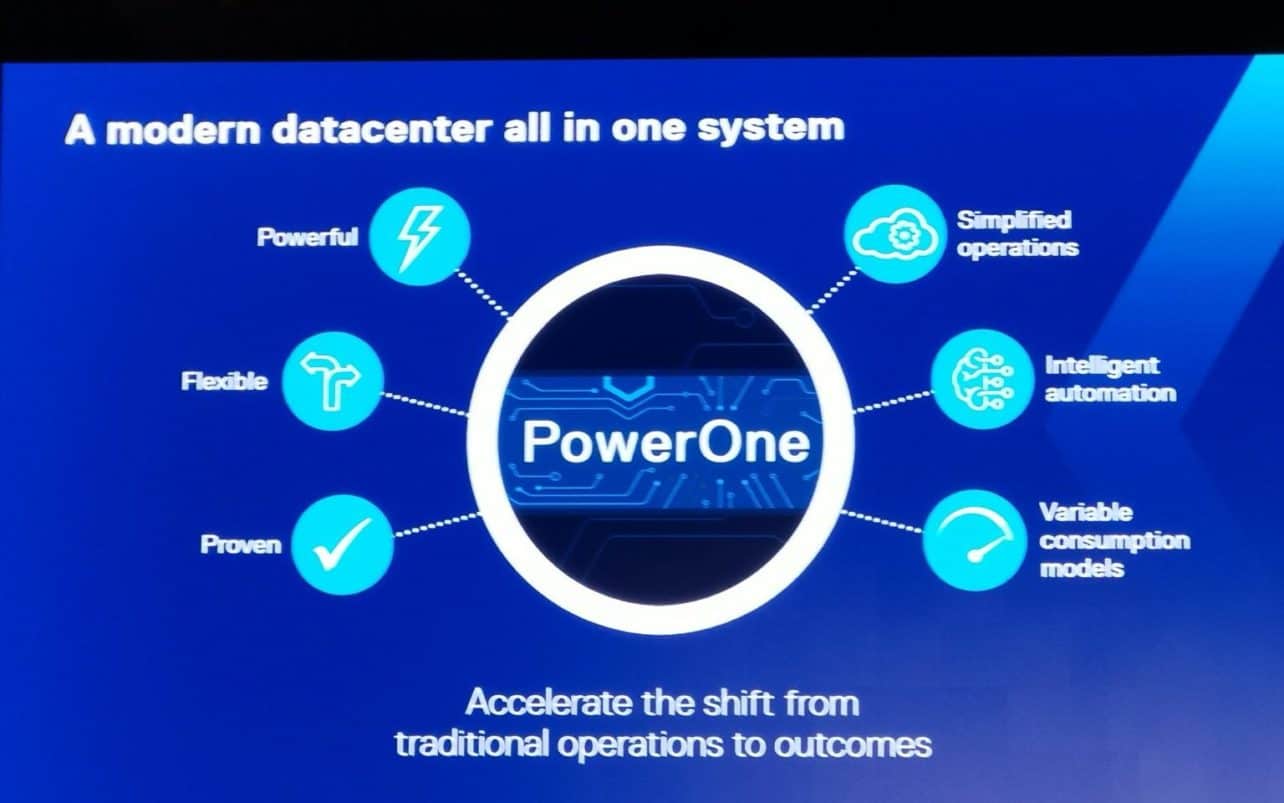

Dell Technologies presented a completely new product at its dedicated analyst and media kit in Austin, Texas. With PowerOne, the company brings an autonomous data center onto the market that takes virtually all the work out of IT operations. This data center is also available as-a-service or based on a consumption model.

It was only a matter of time before we could welcome the first autonomous data center. Dell Technologies is now the first party with such a step. Other parties are likely to follow soon, so we certainly expect a similar product from HPE based on their HPE Infosight database.

However, it is Dell Technologies that is now launching a completely autonomous data centre, taking an enormous amount of work out of your hands.

What is Dell PowerOne?

The Dell PowerOne is an autonomous data center and actually consists of all existing Dell Technologies solutions tied together through a software layer, a software-defined data center. If a company chooses to purchase Dell PowerOne, it starts with a complete rack. After that, there’s plenty of room to scale up. A PowerOne rack comes standard with at least one PowerMax to store data, a PowerSwitch with 100GB of fabric to provide connectivity between all components and one compute pod with 8 nodes. This is the basic layout of a PowerOne, the configuration of the PowerMax and the nodes can of course still be put together according to your wishes. Also, multiple PowerMax storage servers can be combined, and the number of nodes can be increased to 240. This requires multiple racks.

Ultimately, Dell Technologies wants to make the PowerOne highly scalable, making the autonomous data center highly scalable and able to meet any need. Also, based on the words of a number of executives, we don’t rule out other expansions in the future.

How is a PowerOne composed?

The PowerOne is an autonomous data center, but the configuration and wishes of customers vary. So we were wondering how Dell Technologies is going to deal with this because it’s obvious that it presents challenges. If you need to scale up, it’s important that you can and that the system knows what resources are needed and actually available. Perhaps some storage arrays can be better distributed to achieve high IOPS. That may be obvious, but people think differently from machines. That logic has to be there.

It has been explained to us that when putting together the first PowerOne configuration, we look very closely at what the customer actually wants to run on the PowerOne. Whether this is a SharePoint environment, Windows servers, a large data warehouse or a few thousand VMware virtual machines, the initial configuration must fit the customer’s needs. That first match is very important because otherwise, you will have to make all kinds of costly adjustments immediately after taking the PowerOne into production.

Dell Technologies has set up a special program to map out the number of processors, calculation cores, working memory and storage capacity. This way, an actual PowerOne configuration comes out of the box. However, a configuration is not the only thing that comes out, a complete cabling plan and the configurations of the nodes and PowerMax servers are also immediately clear.

As soon as the customer finalizes his order, all configurations and details go to a Dell factory, and these plans are executed exactly as planned. The rack, including the cabling plan, is built exactly like this and then delivered to the customer ready to use. Finally, the configuration is loaded into the software-defined data center layer that runs across the hardware. In this way, the environment exactly knows what resources are available, how they are classified and distributed across the various racks and nodes.

How is a PowerOne Autonomous Datacenter managed?

With the term Autonomous Datacenter, management and maintenance tasks are taken out of your hands, although not everything can be taken out of your hands. Somewhere, for example, you need to indicate what kind of environment you need. Both a control panel and a set of APIs have been developed for this purpose. In the end, Dell has the highest expectations of the APIs, because they also allow the last part to be automated from a central environment.

Within the control panel, there are three so-called assists available, assistants who will help you perform a task. There is launch assist, lifecycle assist and expansion assist.

Launch assist

Launch assist is designed to create a completely private environment with an amount of storage, compute and memory. It is also possible to define a target, so that, for example, vSphere can be rolled out, and one can immediately start working with a VMware environment. This all sounds very simple, but it certainly is not.

Normally you would have to do it all by hand. You then decide which servers and storage you deploy, create the storage array and create the links between all components. You will also have to roll out vSphere yourself. All in all, according to Dell, these are about 2000 configuration steps that will be taken out of your hands. It’s something that takes weeks, but through launch assist, this can be realized within a few minutes. So that’s where the first real gain lies.

Lifecycle assist

The second assistant is the lifecycle assistant. This offers the possibility to manage all software automatically. Think of all the firmware present on the servers, from BIOS to the RAID controller, but also for example vSphere. With lifecycle assist, you can automatically update all software and upgrade vSphere to a newer version. This is also all automated, so you don’t have to worry about it.

Expansion assist

Finally, there is the expansion assist. Of course, it can always happen that an environment runs into its limits: whether it’s a lack of storage, or simply too few nodes with memory and compute. Then the environment can be expanded with extra racks that can all be connected to make the environment larger. As soon as this is the case, the expansion assist must be used manually so that the configuration parameters of the hardware can be updated. In this way, the AI and machine learning algorithms of the software-defined data center can conduct better analyses of how environments can be set up more efficiently. For example, these are aimed at preventing noisy neighbours. If you have a number of storage arrays with a lot of high IOPS and some with very low IOPS, it is wiser to combine the high with the low to have the same physical hardware next to each other.

Also, you often want to separate a storage array for VMs from bare metal instances, because these are also possible with PowerOne.

How did Dell build this software-defined data center?

As mentioned earlier, the PowerOne consists of a rack of all existing Dell products, from a PowerMax storage server to PowerEdge servers pinned into a blade shape in a Compute Pod. All of this is tied together with a PowerSwitch fabric. On top of that, the software-defined data centre is running.

Of course, we wanted to know more about that too, because the hardware in this autonomous data centre is ultimately the least innovative. It’s all about the software that makes this possible. This consists of various open-source technologies that have been merged. For example, Dell uses a large number of Kubernetes containers combined with Ansible playbooks to build and maintain the autonomous data center.

As soon as the machine learning engine detects that a task needs to be performed, containers are launched to perform these operations. This can be by simply running an Ansible playbook or by really moving and optimizing certain environments over the available hardware. The tasks that happen automatically can vary a lot. However, Dell foresees that developments certainly in the area of Kubernetes can be much larger, so there is even more value to be gained from this autonomous data center. Dell does not rule out having more news about the PowerOne autonomous data center in the near future, in view of new features, possibilities and products.

PowerOne as an extension of the cloud

Much has already been said and written about the hybrid cloud, where the on-premise environment can burst into the cloud or collaborate with a cloud environment. The on-premise environment is often used as a basis. In theory, PowerOne could mean a new step, a kind of hybrid cloud next generation.

Before that happens, Dell still has to make some deals with the major hyper-scalers to get PowerOne certified for their on-premise technology. For example, for Microsoft’s Azure Stack or AWS Outpost. If that happens, it could become possible to get the fast train of cloud technology on-premise as well.

In this case, a company links its PowerOne autonomous data center to its public cloud account. The hyper-scaler then takes control. Using the available APIs, the environments are set up, and the cloud products are rolled out on-demand. Companies can then roll out their own region or availability zone, as it were, in their own data center. There is a lot of demand from enterprise organisations for more control over data and the discussion about what is running where also seems infinite. At the same time, however, enterprise organisations want to benefit from the rapid innovative developments that the cloud brings with it. This could be the best of both worlds. Especially since PowerOne brings much more scale.

We have already spoken with AWS and presented the idea behind the PowerOne to them, they state that the chances are very small that they will embrace the PowerOne. AWS Outpost is currently running on hardware that was co-developed by AWS. Because of this, AWS Outpost can work as well as an extension of the cloud and benefits can be achieved. These would be lost with the PowerOne. In addition, according to AWS, Outpost is also enormously scalable. So AWS will be dropped, Google will only do Kubernetes on-premise, leaving Azure with Azure Stack as the only one left.

All in all, PowerOne is an interesting development, but the only question is whether Dell is not just too late. We expect other companies, such as an HPE, Lenovo and Huawei, to respond to this in one way or another. This means that having your own data centre, without the burden of high management costs and staffing, may still be an option.