Google Anthos was presented at the beginning of 2019 as the platform for modernising applications and indirectly the underlying infrastructure. At that time, Google was talking about unlimited possibilities. Now that a year has passed, it is time to do a deepdive into Anthos. For what purposes should you use Google Anthos and what distinguishes Anthos from a standard Kubernetes container platform?

Google Anthos consists of several managed services that add value to the Kubernetes container platform. Before we go deeper into this, it is good to take a moment to look at the advantages of containers and Kubernetes.

Containers form the base of the modern application architecture

With a modern application architecture, large applications are broken down into smaller pieces, with a focus on functions and services that all have their own primary task and can perform them independently. These parts are all placed in separate containers. As a result, an application no longer consists of one large code base, but of dozens or perhaps hundreds of different containers. Together, these functions and services still form the complete application. All these functions and services in containers often communicate with each other through APIs over a network connection when they need each other.

What is a container?

A container is an isolated environment with application code and libraries, where the operating system is virtualized from the underlying platform. Containers are much simpler than, for example, VMs that virtualize an underlying hardware stack. Containers share the resources of an underlying VM or appliance, boot up much faster and use only a fraction of the memory compared to booting up an entire (operating) system. Technically, containers run on hardware, virtual or physical. Multiple instances of the same container can also be active at the same time. As a result, each service or application in a container can scale according to its needs. Scaling per container is very efficient and makes it possible to achieve a high level of availability for the complete application.

With containers, developers can create predictable environments that logically separate functions and services. Dependencies are handled for each application component which makes a container safer, stable and more efficient. A specific runtime version or software library is guaranteed to be consistent regardless of where the service and container are used. The advantage is that it takes less time to troubleshoot. When testing, it can be assumed that a container in a test environment behaves exactly the same as in production. Another advantage is that functions and services can be developed and improved separately from each other. It is a matter of replacing a specific container with a container that contains the new version of a function or service. All this increases the productivity of a continuous development process.

Advantages of containers

- A container is an isolated environment. Specific software dependencies are handled per container. CPU, memory, storage and network resources are virtualized at the level of the operating system so that containers are logically separated from each other;

- Because the operating system is virtualized from the underlying platform, this is also safer and always up-to-date. The cloud provider or supplier often does the maintenance;

- Containers guarantee a consistent implementation, regardless of the target environment. A container in a development environment on a laptop behaves identically in production on a container platform;

- Containers start up faster, use a fraction of the resources compared to applications on traditional VMs. Resource requirements can be determined per container, as well as the number of containers per service.

Existing Applications

In order to embrace this modern application architecture, the easiest way is to redesign and develop applications again, so that the best way to divide an application into containers can be taken into account. However, many organizations have a large application landscape and it is not possible to rebuild all of them. Eventually, many existing (commercial) applications also have to be migrated. It will then have to be examined whether they can be split up into smaller parts and packaged in containers

Splitting up an application is quite complex, but there is another element that makes it even more complex. As mentioned before, containers only contain the application code, the storage of data and files are done outside the container, for example via an external (persistent) storage solution and a database server. This can be a database storage service in the cloud or on-premise servers. For example, all user data is stored here, or the photo files and PDFs used within an application.

Google has now been one of the first to develop a solution for this called Anthos Migrate, which we will elaborate on later in this article.

Kubernetes

To run containers, you not only need hardware from a cloud provider or vendor, but you also need a software platform, also called a container orchestration platform. Today, the industry has massively chosen Kubernetes as the standard for running and managing containers.

The most important thing about Kubernetes is that it is a container platform that can be used anywhere: on-premises, in the cloud or as a hybrid/multicloud solution. With Kubernetes, services can be automatically rolled out, rolled back and scaled up or down according to the needs of the moment. Containers are monitored continuously and restarted if necessary. Services will only be offered when it is certain that the containers have booted up correctly. Also, Kubernetes is managed on a declarative basis. This means that you describe the desired status of your applications and their containers. Kubernetes will evaluate the current status and determine which steps are needed to get back to the desired status. This can also mean doing a failover in case of a hybrid or multicloud solution.

All major cloud providers and manufacturers now support Kubernetes. Either through a Kubernetes platform within their cloud environment or by supporting it within their existing software stack.

Kubernetes was build by Google, but Google donated the project to the open source community, so everyone can contribute to Kubernetes. These contributions from all over the world have made it a mature platform and a standard concept in the world of containerization. So Kubernetes will not disappear again soon and its development will continue anyway.

We looked for a moment to find out who the biggest contributors are;

- Independent developers

- Red Hat

- Huawei

- ZTE

- Microsoft

- VMware

- IBM

The list is much longer, but these companies are currently ensuring that Kubernetes is developed further.

Anthos as a multicloud platform for your modern applications

Although Google has donated Kubernetes to the open-source community, development at Google has not stopped. It still actively contributes to the open-source version of Kubernetes, but it also develops additional commercial managed services, which in turn use Kubernetes and add value to the container platform. Google is not alone in this; many cloud providers and manufacturers see the open-source version of Kubernetes as a starting point for adding additional services.

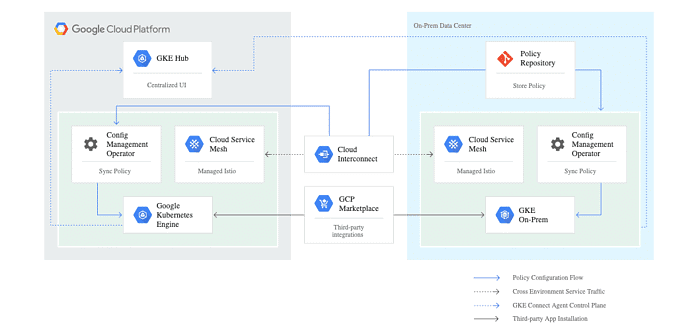

Google Anthos is a set of managed services that take the container service to a higher level. These managed services help customers configure and manage Kubernetes clusters, increasing security, improving compliance and monitoring. Anthos is suitable for both the hybrid and the multicloud.

You can roll out Google Anthos anywhere you prefer, on-premises in your data center or in the public cloud. By anywhere we mean anywhere, even in competing clouds of Microsoft Azure and Amazon Web Services, or one of the many others.

Why Google Anthos?

Now that we have explained the advantages of Kubernetes and summarised what Anthos has to offer, it is time to take a closer look at Anthos. Where Kubernetes is open source, Anthos is not. As mentioned earlier, Anthos is a set of managed services around Google’s Kubernetes Platform. These managed services are partly applicable to Kubernetes engines of other providers, as long as they adhere to the open-source standard.

Google Kubernetes Engine (GKE)

Although Anthos adheres to the Kubernetes open-source standards and is therefore able to work with virtually all Kubernetes clusters from different providers, there are a number of advantages that Anthos offers with its own Google Kubernetes Engine (GKE).

When new versions of Kubernetes are released, with new features, optimizations or security patches, they need to be rolled out to all active clusters. The pace at which this happens varies per provider. A Kubernetes update must also be able to deal with the provider’s own implementations, must be tested properly because no clusters may fail due to an incorrect software update.

If you combine Kubernetes clusters from different providers, it’s possible the performance will vary, that certain functionalities will not work properly, or that clusters will still be vulnerable due to missing patches.

By using a managed Kubernetes engine, you don’t have to perform all those updates and tests yourself, but this is done for you. The entire maintenance of the cluster is done by the provider or in this case by Google.

Anthos everywhere (on-premise, AWS, Azure and more)

As part of Anthos, GKE on-premise has been introduced. GKE on-premise allows you to roll out GKE in your data center. There are also specific versions of GKE on-premise for AWS and Azure, making it possible to roll out Anthos at AWS and Azure. This is done automatically with the right configuration, Anthos can talk directly to the IaaS-APIs of AWS and Azure to build a GKE in their cloud environments. When choosing to work with GKE in all data centers and clouds, Google Anthos can guarantee that the Kubernetes version and therefore, the experience is the same everywhere. Google ensures flawless updates for GKE that can be rolled out across all GKE clusters at the touch of a button.

Centrally manage Kubernetes clusters

Determining where you are going to roll out your Kubernetes clusters and set up these clusters is the first step. The next step is to automate the management of your clusters and the underlying infrastructure as much as possible. This way you can focus on building modern applications instead of managing infrastructure.

Centralized and easier management of your Kubernetes clusters is one of the possibilities of Google Anthos. If companies want to simplify their Kubernetes management and configuration, Anthos is definitely a good option. These can be GKE clusters, but also from other suppliers.

If Kubernetes clusters from other providers are used, the management is limited to configurations, policies and compliancy. For upgrading and applying security updates to external Kubernetes clusters you are dependent on the specific vendor as mentioned earlier. Google can offer this through with its own GKE.

Anthos Config Management

For the configuration of the Kubernetes clusters, Google has developed Anthos Config Management. With Anthos Config Management, you control the configuration of the clusters. You can centrally manage the configurations of all Anthos clusters from a Git repository (this can be Github or Gitlab). Once the configurations are modified, they can be rolled out directly to all connected clusters. You can also configure it to disable local control of the Kubernetes clusters themselves. This way, the configuration from the Google Cloud console is always leading, and you can be sure that all your clusters are configured in the same way.

Configuring the clusters includes standard things like quotas for the amount of compute and memory. Also policies and compliance can be configured. For example, who has access to a cluster? Tailor-made compliance rules can also be applied. If a configuration violates the compliance rules, the configuration will simply be rejected or automatically reset.

Configurations for Kubernetes are extensive and can therefore quickly become complex. Anthos helps by testing the configurations for possible problems. This allows you to minimize errors.

Anthos Service Mesh

With Anthos Service Mesh, Google takes over some more complicated tasks. With this tool, you can arrange things like security and monitoring between the different containers. The Anthos Service Mesh uses the open-source project Istio. Istio is an open-source service layer that is linked to a container (also called a sidecar) to monitor it. The Service Mesh operates independently of the container but is close to it. All traffic also passes through the Service Mesh, creating telemetry data for logging and monitoring the container. This data can be analysed from the Google Cloud console.

Especially in the case of malfunctions or parts that do not want to work smoothly, all this telemetry and log data can quickly locate the problem and help apply the solution more quickly.

With all this log information, the Service Mesh can also map out exactly which containers communicate with each other and over which ports. These ports can be opened or closed. It is even possible to implement a Zero Trust Security Model, so that good behaviour can be recorded once and everything else is blocked.

In many images and texts, Google will still refer to StackDriver as the main tool for analyzing this data. Since the end of February 2020, Google has converted this to the Google Cloud Operations Suite. This combines all cloud logging and monitoring.

Google has also extended the possibility to issue security certificates to a so-called Mesh CA. It allows the Anthos Service Mesh to issue certificates for the encryption of connections. The Service Mesh can ensure that all connections between containers and clusters are encrypted. Certainly, for companies that want multiple clusters in different data centers, this is an essential feature.

Which workloads can you run with Anthos and which are suitable for Anthos Migrate?

Currently, Web and Application servers are the easiest to migrate with Anthos. Business middleware such as Tomcat, LAMP-stack and smaller databases can also be migrated to Kubernetes containers with Anthos. Migrating Windows applications to Windows containers that can run in GKE Windows Node Pools is currently in preview.

Applications that can be migrated but depend on a large underlying database will have to split this. The application can simply be migrated to one or more containers, but the database will need to be moved to its own VM or even better to a PaaS database service. This is the most efficient solution. Running large databases in Kubernetes is technically possible, but it is not recommended.

Containers are also very suitable for test environments and workloads that require few resources.

While writing this article it has become clear to us that Anthos is still actively being developed further. We expect Google Cloud to add additional features in the near future to improve Anthos and the multi-cloud world.