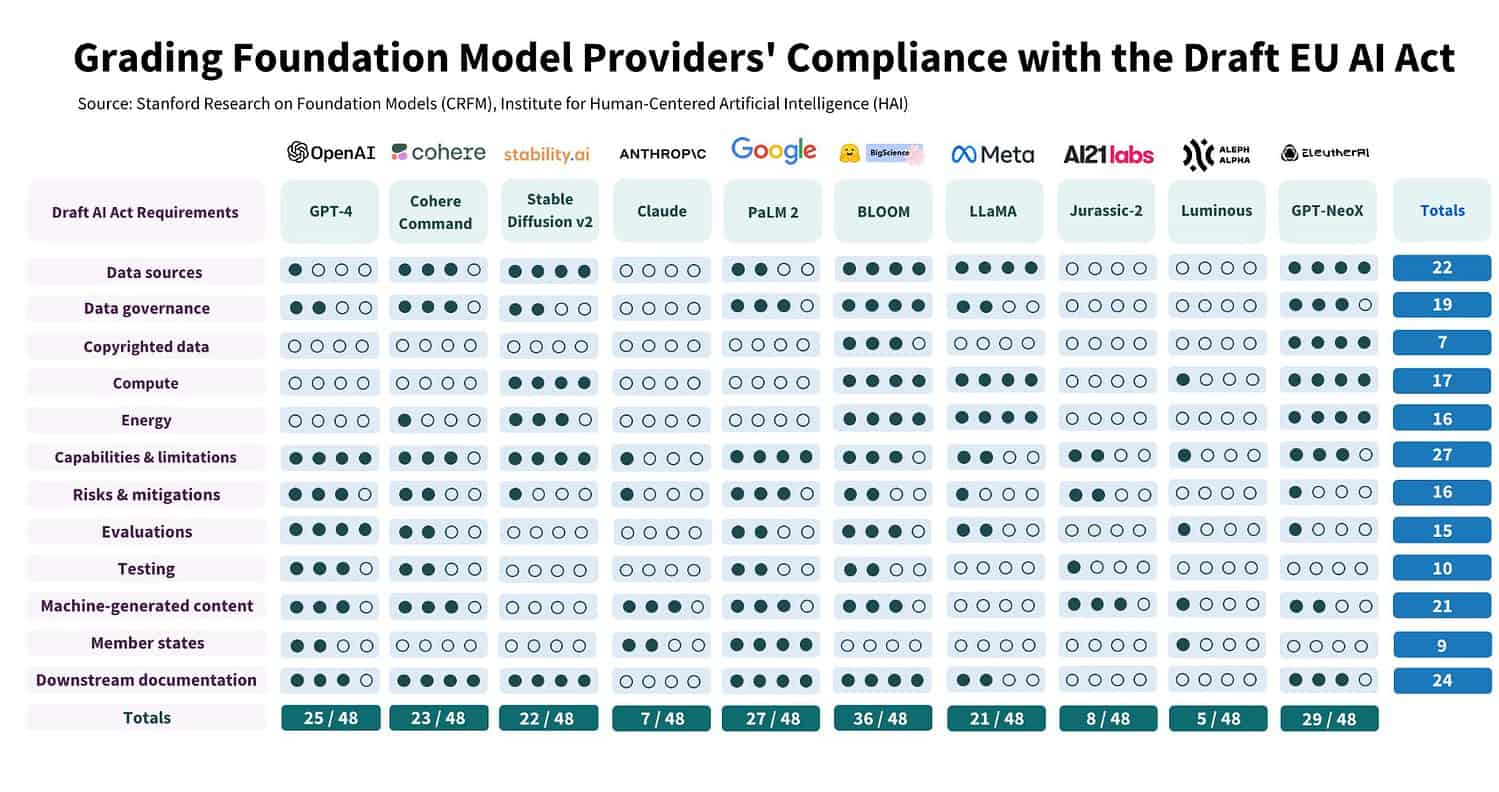

Now that a preliminary version of the AI Act has been approved, AI developers can begin to get their models right. Researchers at Stanford University have taken on some of that work. They conclude that BigScience’s BLOOM model currently ranks best. With a 36 out of 48, it is not yet refined to perfection, but it is a sharp contrast to the models Claude, Jurassic-2 and Luminous, which score less than 10 points.

Scientists at Stanford University examined whether foundation models comply with the AI Act. The conclusion is clear: “They largely do not.” The biggest stumbling blocks lie in transparently communicating sources used in training the models and pointing out the key properties of the models themselves.

Tip: EU votes on AI Act draft: should ChatGPT change?

However, it is not a lost cause either, as the researchers do argue that the law is feasible for the foundation models analyzed. Although there will be more work for some than others.

Weakest model cooperates with HPE

The companies AI21 Labs, Aleph Alpha and Anthropic, for example, do not even have a quarter of their models in order. At first glance, these do not appear to be the most high-profile language models. Digging a little deeper, it is worth noting that Luminous has already secured a contract with HPE Greenlake for Language Models. At the same time, this foundation model puts away the worst score in the study with a 5 out of 48.

The study bases the overall point on 12 components, all of which will receive attention under the AI Act. On each component, the foundation model can obtain a score between 0, worst, to 4, best. Luminous scores a 1 out of 4 on the components “compute,” “capabilities & limitations,” “evaluations,” “automatically generated content” and “member states. In total, it thus drags in 5 points. The points deal with whether the company is transparent about these issues. ‘Compute’, for example, measures whether it knows the model size, computing power and training time for the foundation model behind Luminous.

Furthermore, the model scored zero points for the categories of ‘data sources,’ ‘data governance,’ ‘copyrighted work,’ ‘energy consumption,’ ‘risk limitations,’ ‘testing,’ and ‘downstream documentation.’

One model is already three-quarters in order

The future looks brighter for BigScience’s BLOOM model. That model scores 36 out of 48, which means it is already more than three-quarters in order with the upcoming rules. This is an open-source model that is available to researchers for free. Moreover, the language model speaks multiple languages.

OpenAI’s often-fussed models, Google and Meta, all score around 50 percent. Meta still scores below the limit (21/48) and mainly has work to do regarding transparency about copyright sources, the testing method, machine-generated content and the EU member states in which the model is available. OpenAI was assessed against GPT-4 (25/48) and still needs to tinker with copyright resource visibility, computing power and energy capacity required for training. Google has exactly the same working points but scores better on some other categories with PaLM 2 and therefore scores 27 out of 48.

‘AI Act feasible for foundation models’

With the research, Stanford University does show that the approved bill is feasible. “Our research shows that it is currently feasible for foundation model providers to comply with the AI Act,” said Stanford. The researchers even take it further, stating that the rules promote greater transparency in the entire ecosystem.

That shines an interesting light on the mostly negative criticism the bill has already received. That criticism was even addressed directly to the European Commission in an open letter. In it, 150 scientists, managers of EU companies and researchers backed the view that the AI Act will mainly put a brake on technological developments and EU competitiveness. The fear is mainly that AI developers will migrate away from the EU.

Interestingly, a scientific study now supports the other side of the story. The European Commission previously concluded that the allegations were not based on much concrete evidence. According to the Commission, many companies simply jumped on the arguments of a few small companies. A study by a deserving university puts more weight on the legitimacy of the upcoming AI Act.