HPE too has not escaped the AI developments of late. It is making LLMs accessible to more organizations through HPE GreenLake for Large Language Models. For HPE, this is just the beginning of the AI journey.

LLMs are definitely of interest for a wide range of applications. Public LLMs, however, are especially fun and interesting for search engines and other primarily consumer-oriented applications. The future of AI and also of LLMs for organizations does not lie there, is our firm belief. There are quite a few organizations that may not or do not want to send their data to a public service. Even ChatGPT Business, a version of ChatGPT that doesn’t share anything with the public model is still a public model. By the way, this is not to say that there is no use case to be made for public LLMs at organizations. But the most critical processes will not be exposed to it.

HPE GreenLake for Large Language Models

The big question then is how organizations should get started with LLMs. They can invest in a supercomputer themselves, but only a few organizations can do that. Aside from the cost of this, organizations also need a lot of specialized knowledge for that. On top of that, it takes a long time to get everything running. Training LLMs in the public cloud removes those last hurdles, but then again you have little control and the costs can also be substantial due to egress fees. In addition, you can argue about the HPC capabilities of the public cloud compared to those of specialized systems.

HPE sees a gap in this market, where it wants to try to offer the best of both worlds. That is, it wants to offer training LLMs as a service, but on an infrastructure designed for it. HPE can do that because it has Cray in its portfolio. This company, since its acquisition by HPE it is officially called HPE Cray, has a long history in supercomputing. So now HPE is pairing this powerful hardware with the HPE GreenLake platform. This should make LLMs accessible to many more customers. These can work there with both open-source models and proprietary models.

An example of the latter model is Luminous from Aleph Alpha, which is a launching partner for this new GreenLake service. This model is available for English, French, German, Italian and Spanish. It allows organizations to use their own data to train and fine-tune an AI model specific to their own needs.

HPE has big plans for AI

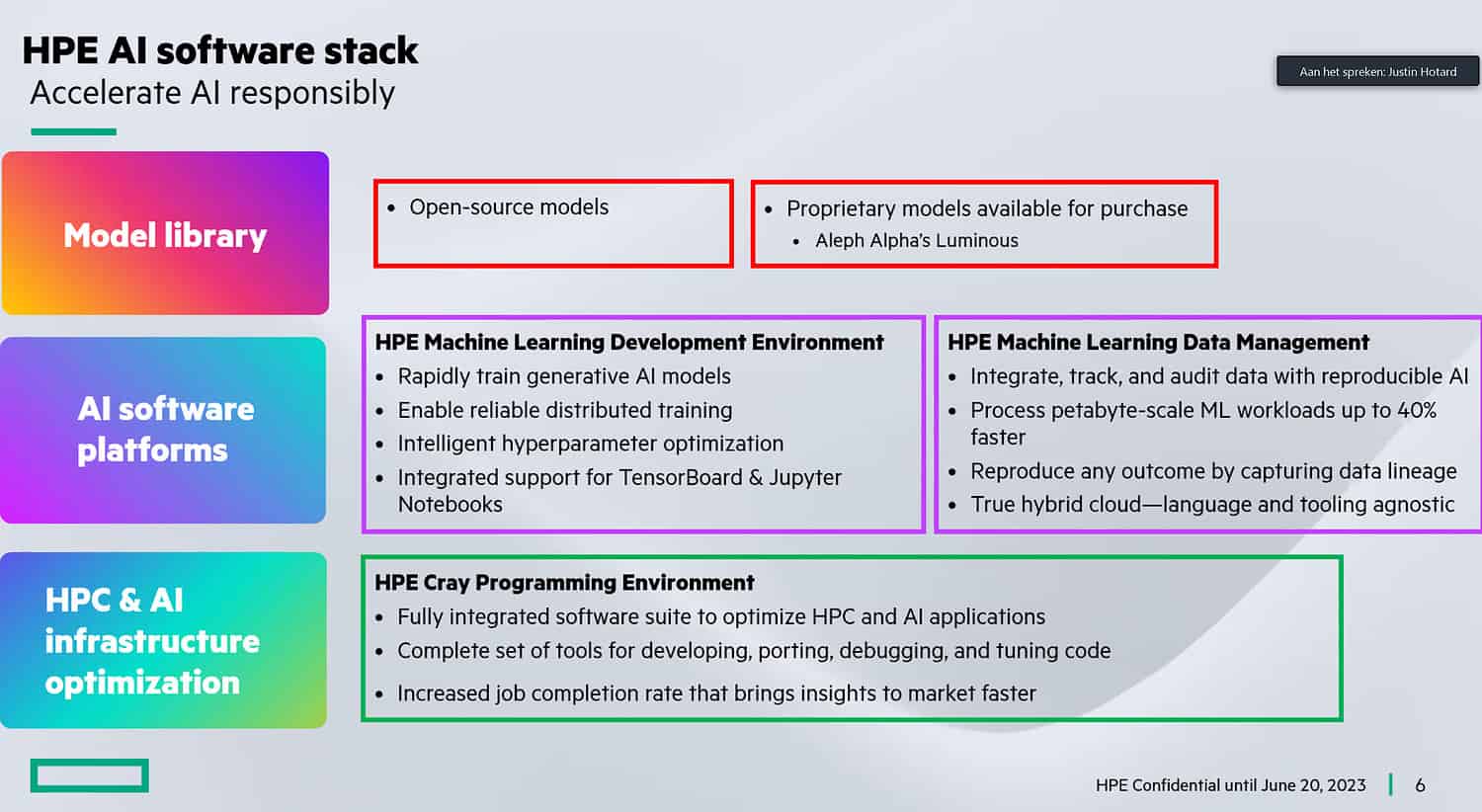

It won’t stop at HPE GreenLake for Large Language Models, HPE promises. Of course, this is a logical starting point, as generative AI and LLMs are very popular right now. Looking toward the future, HPE has much more in store for this combination of Cray and GreenLake. It will work on applications for climate modeling, drug research, financial services and so on. In addition, the new platform also supports HPE’s own AI/ML software. These include HPE Machine Learning Development Environment to train models and HPE Machine Learning Data Management Software to ensure the models are reliable and accurate.

So we haven’t heard the last of this from HPE when it comes to AI. HPE GreenLake for Large Language Models is the first of many services to come. It will become available at the end of this year in the US. Europe will follow in early 2024. HPE is already accepting orders for the new service.