Microsoft has introduced two Orca 2 ‘small’ LLMs that are not inferior in performance to competitors’ LLMs.

With the introduction, Microsoft aims to demonstrate that high-performing LLMs do not necessarily need large amounts of parameters to deliver good performance. Both Orca 2 LLMs are based on the original 13B Orca model presented several months ago. This model showed strong reasoning capabilities by mimicking step-by-step the reasoning tracks of larger and more powerful models.

Reasoning capabilities

The Orca 2 LLMs have sizes of 7 billion and 13 billion parameters. Within these models, the training signals and methods were improved so that smaller models still receive the enhanced reasoning capabilities normally found in larger models.

The training of the models did not use imitation, as in the original Orca model, but more different reasoning techniques. In addition, Microsoft developers taught the model to determine the most effective reasoning technique for each task.

Benchmark larger LLMs

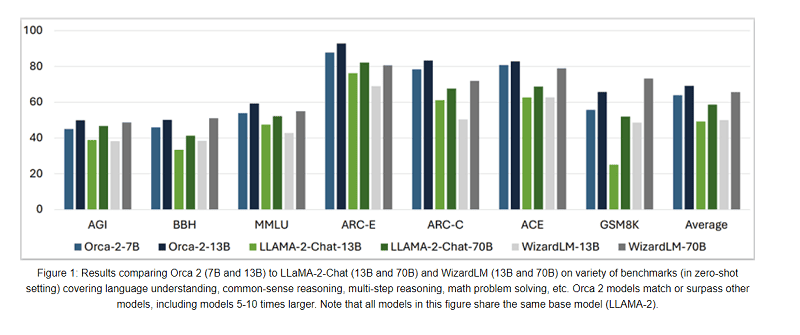

The Orca 2 models were tested at 15 points against a number of larger LLMs. The latter models were sometimes five to 10 times larger in size. The tests showed that both Orca 2 7B and 13B models outperformed, for example, Meta’s Llama-2-Chat-13B model.

They also outperformed Hugging Face’s WizardLM-13B and -70b models. In a particular configuration, the latter model did still outperform Meta’s Llama models and Microsoft’s Orca 2 models.

Other vendors

Microsoft is not the only tech giant exploring the potential of smaller LLMs. China’s 01AI also recently presented a “small” LLM of 34 billion parameters that outperforms larger models. French Mistral AI also offers a 7B LLM that rivals larger models.