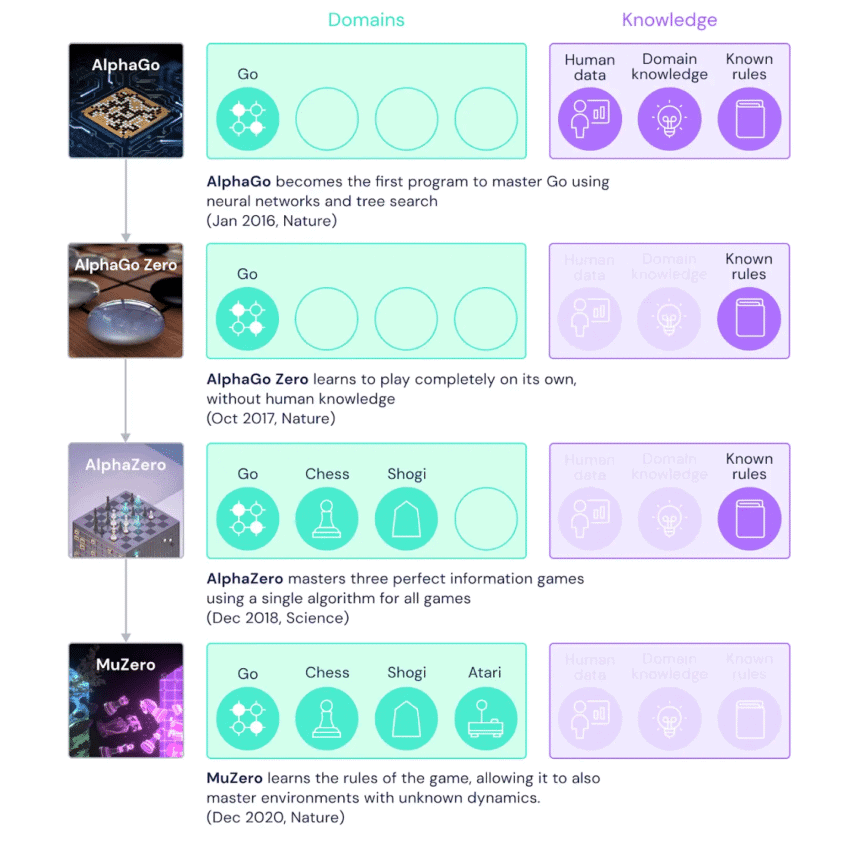

Alphabet subsidiary DeepMind has built a new version of its AI software that is better at coming up with new strategies in unfamiliar situations. The AI, called MuZero, has mastered Go, chess, shogi and various Atari games without having the rules explained to it.

DeepMind explains the improvements in MuZero in a blog post. The company explains that, until now, artificial intelligence has struggled with environments where the rules and dynamics are unknown and complex. By focusing only on the most important aspects of the environment, MuZero manages to solve this problem.

Challenges such as playing Pac-Man were difficult for earlier versions of the AI, but MuZero has achieved a ‘state of the art’ result on this Atari benchmark. The AI also performed similarly to its predecessor AlphaZero in games like Go, chess and shogi. Contrary to its predecessors, the researches did not have to explain the game rules to the AI.

Focus on the most important aspects

By focusing on the most important aspects of the environment, DeepMind makes a comparison to human insights. When a human sees dark clouds in the sky, he predicts it will rain and takes an umbrella with him before stepping outside. This is a skill that humans quickly learn, but AI still has trouble with.

MuZero works in a way that is more comparable to the human example. Instead of creating a complete model of the environment, AI focuses only on the elements relevant to AI. DeepMind gives an example that it is more useful to know that an umbrella keeps you dry than to model a pattern of all the raindrops in the air.

Because of this different approach, the AI is better in tasks that don’t have clear boundaries like simple board games do. The researchers tested the MuZero with 57 different Atari games, and the AI quickly reached a high level.