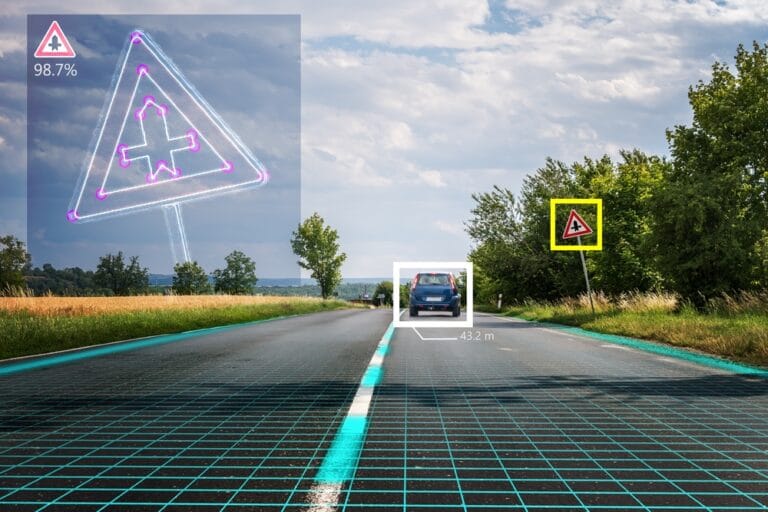

Researchers have found an interesting way to confuse computer vision algorithms. When a completely unrelated object is placed next to the intended one, computers no longer seem to understand what they are seeing. By applying this knowledge, self-driving cars, for example, can be fooled.

These findings are shared by Paul Ziegler, the CEO of Redflare, and Yin Minn Pa Pa, a researcher at Deloitte Tohmatsu, in a talk about their research called “Hiding Objects From Computer Vision By Exploiting Correlation Biases” at the Black Hat Asia conference, reported on by The Register.

Algorithms rely on context

The two researchers looked at several computer vision systems, including those of Microsoft and Google. They used images from the Common Objects In Context (COCO) database. Ziegler and Pa Pa say that many algorithms use context to understand what is in an image. However, when the object in question is depicted with something that has nothing to do with it, the algorithm gets confused. One example is that a plant is seldom recognised if depicted on a T-shirt. When an algorithm sees a person, it does not expect to see a plant in its vicinity. Similarly, a random round object near a dog is quickly recognised as a frisbee because the algorithm correlates dogs and the toy.

Creating confusion

This observation gave the researchers the idea to determine if this knowledge could also be used to fool the algorithms deliberately. They found that when dogs and cats were put together in an image, the algorithms thought they saw a horse. A more worrying result came when the researchers showed the algorithm a picture of a stop sign with a piece of fruit next to it. The piece of fruit was recognised, but not the stop sign. Other unusual combinations of stop signs with other objects were not recognised either.

Malicious applications

This knowledge could be used as an attack, whereby an attacker would deliberately place the confusing objects next to or behind a stop sign to force self-driving cars into mistakes. How this is less cumbersome than simply damaging or removing the sign, the researchers did not say. Other purposes for which this knowledge can be used is to circumvent an upload filter for certain images or to confuse camera-only shops, such as Amazon Go. The researchers have not tried such attacks.