Nvidia is the main beneficiary of this year’s AI hype. During SIGGRAPH in Los Angeles, the GPU manufacturer revealed that it is only beefing up its offerings. A new server product, improvements to its own GH200 Superchip and software innovations passed the review.

Nvidia CEO Jensen Huang is one of the industry’s most enthusiastic AI evangelists, especially now that his company has recouped the gamble of betting on it many times over. The company developed a number of innovations for its 2018 GPU line such as DLSS (Deep-Learning Super Sampling), which enforced a hefty AI portion on the silicon chips supplied. It helped upscale computer graphics, with often impressive returns with less hardware grunt on paper.

The company has been digging into accelerated computing since its inception, but it only gained a foothold in the gaming market for decades. That gradually changed, and over the past 10 years it has managed to maintain a 90 percent market share in the datacenter market. Now that the world has become enamoured with AI, it has been able to make its move once again.

Ada Lovelace architecture

Nvidia’s shift in focus from gaming to enterprise has been going on for some time, but AI has accelerated it. Never was this more evident than now: in fact, the new L40S GPUs are baked with the Ada Lovelace architecture, which until now has been best known from its use in the RTX 40-series gaming GPUs. Evidently, the company also prefers to deploy these chips for the enterprise market, and customers with the new OVX servers can put up to 8 of these L40S cards in them, each with 48GB of video memory and 1.45 petaflops of tensor-processing power. Tensor processing is critical for AI workloads.

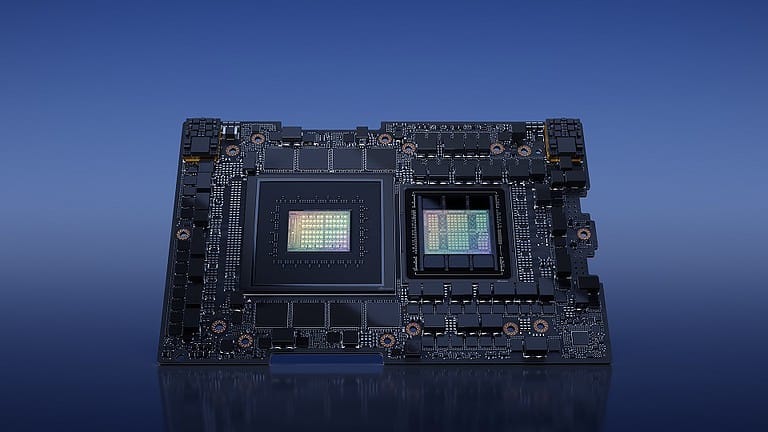

The GH200 Superchip also received an improved spec sheet. This CPU-GPU combination is an AI supercomputer and had already been announced, but now it is clear that HBM3e memory will be used. Since AI benefits tremendously from large amounts of fast memory, it is a logical platform development.

Software: AI Workbench

Nvidia is also bringing innovations in the software area: within the AI Enterprise line, an upgrade is taking place with AI Workbench. It’s a do-it-yourself kit to develop and customize AI, complete with pre-trained models. It is reminiscent of the recently announced collaboration between IBM and Meta, with the latter’s LLaMA 2 model available for selection within IBM watsonx.ai.

Also read: IBM customers can get their hands on Meta’s LLaMA 2 model

With these announcements, Nvidia is showing how it intends to take advantage of its AI lead. The question remains whether it can meet the huge demand. A week ago, industry insiders talked about the gossip surrounding who would receive the latest coveted Nvidia H100 GPUs, and how much. It may well determine how long it will be before we see, say, GPT-5 from OpenAI or other generative AI innovations.