Intel has unveiled details about the architecture of next year’s Xeon processors for servers and workstations. While the fifth generation of these chips is not even on the market yet, we’re already getting a decent insight into what its successors will bring to the table. Customers have widely varying requirements, based on Intel’s feedback. That’s why the company is opting for two variants of its end products: one processor line aimed at brute force and another option boasting maximum efficiency and density.

While the hype surrounding AI has created unimaginable demand for GPUs, Intel notes that server CPUs are still in demand. The heaviest HPC workloads call for strong individual CPU cores, while there are also customers, according to Intel, who primarily have many different requirements for servers that are not too intensive.

Instead of an all-purpose monolithic chip, Intel is opting for two specific solutions, based on one platform/socket. P (Performance) cores for one, E (Efficiency) cores for the other. This terminology is not new to Intel: even consumer chips currently contain mostly a hodgepodge of P and E cores. The processors will consist of a sum of chiplets, making them modular. The IO silicon is created separately from the compute cores as well.

Baked on Intel 3

Both types of cores are baked on Intel 3, the latest manufacturing process the chip company uses. The E-core variant is codenamed Sierra Forest and will come out in the first half of 2024, while the P-core-built Granite Rapids chips are to arrive “shortly thereafter.” This does put them slightly behind previously stated roadmaps: Intel 3, in fact, was at one point scheduled for Q3 2023.

Regardless, both promise a big step up when it comes to performance-per-watt, especially for Sierra Forest. It is notable, however, that both chips will run the same firmware and support the same operating systems. So it is essentially the specific application that end users have in mind that will be guiding them to one of the two options. Those who want the best performance can opt for Granite Rapids products. Sierra Forest is there for customers who primarily need efficiency with as many cores as possible. Intel talks about a 250 percent improvement over fourth-generation Xeons when it comes to the number of cores that can fit in a server rack. Earlier rumours spoke of up to 144 cores, although no exact number is mentioned for now.

And AI workloads?

Every tech company gets asked how it turns the AI hype into products. Intel praises its own performance in this area of its previously announced fourth-generation Xeons. Compared to its major competitor, AMD’s EPYC products, this processor is said to process the AI model BERT 5.6 times faster, making it 4.7 times more efficient.

Improvements for the 2024 Xeons focus particularly on the large bandwidth in terms of memory. AI workloads rely heavily on this. There are options for DDR5 or MCR memory, with maximum speeds of 6400 MT/s and 8000 MT/s, respectively. A maximum of 12 channels are supported with a theoretical bandwidth of 614.4 to 768 GB/s. This is more than double that of fourth-generation Xeons. Up to 136 PCIe 5.0 lanes can be addressed, connecting to any PCIe device ranging from GPUs to NVMe storage.

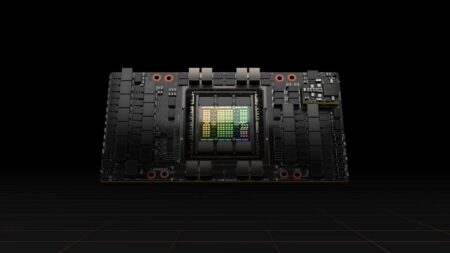

Ultimately, a server system needs a CPU and GPU to competently run AI workloads. For giant generative AI applications, most parties will look to Nvidia for the GH200 Superchip, for example. However, some AI tasks don’t parallelize well and are a poor match for a GPU as a result: many predictive capabilities depend on sequential computations, for which a CPU is more suitable. As a result, there will certainly be interest for the new generation Xeons from the AI angle, plus the appeal for customers looking for CPUs that can perform existing tasks faster. This could be anything from powering large amounts of storage to running virtual machines.

Also read: AWS says it’s running a custom 96-core Xeon on its servers