Cisco has presented a new open-source project designed to help make AI-generated code more secure. The initiative, called Project CodeGuard, provides a framework that allows development teams to integrate security rules directly into the workflow of AI coding tools. Examples include GitHub Copilot, Cursor, and Windsurf.

AI coding agents are increasingly being used worldwide to accelerate software development and increase productivity. At the same time, the security of the generated code is often inadequate. According to Cisco, basic protection, such as input validation and secure secret management, is often lacking. Many AI systems also use outdated cryptography or rely on components that are no longer supported.

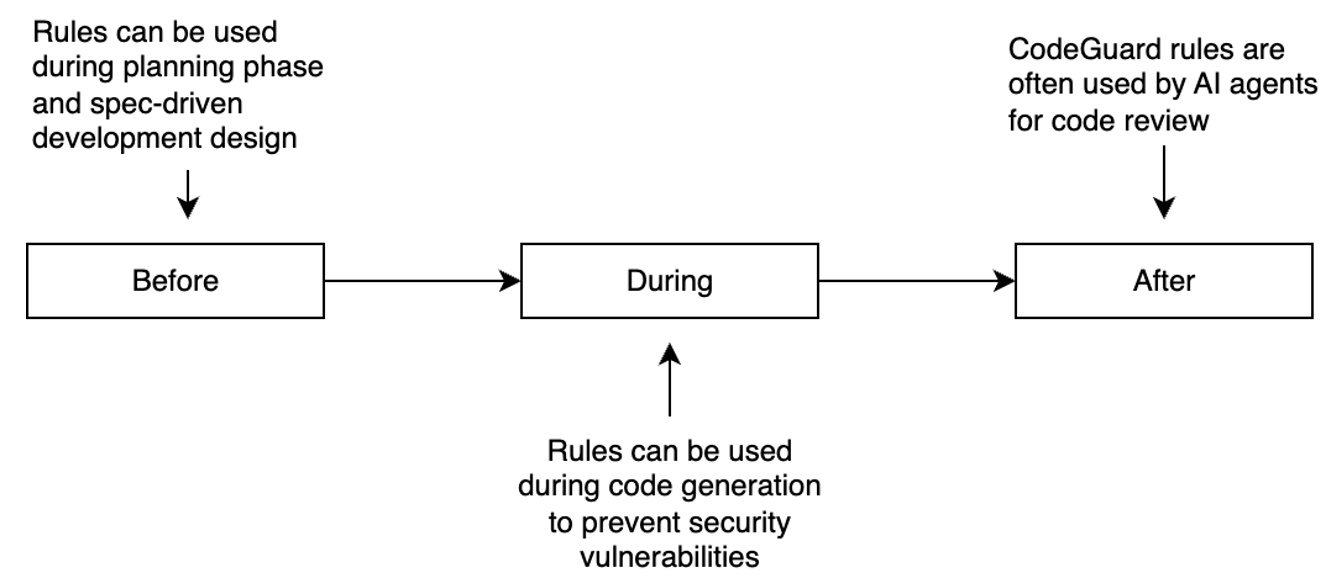

Project CodeGuard aims to change that. Cisco describes the framework as unified and model-independent. This means that it can work with different AI systems and development environments. The framework is designed to embed security across multiple phases of the software lifecycle, ensuring that protection rules are active before, during, and after code generation.

The framework includes a set of security rules that automatically guide AI models toward safer programming patterns. These rules can be applied during the design phase of software, as well as during and after code generation.

Security throughout the entire development process

This allows development teams to build security into the entire development process, rather than just after the fact during a code review. Cisco emphasizes that this is essential because AI assistants today not only write code but also create designs, generate services, and suggest improvements.

One example is a rule that enforces input validation. This can prompt a model to handle input securely while writing code, flag potentially unsafe user input, and check whether validation and sanitization have been correctly implemented in the final code. Other rules help prevent AI from generating hard-coded passwords or API keys.

The first version of CodeGuard includes a core set of security rules based on industry standards. Examples include OWASP (Open Worldwide Application Security Project) and CWE (Common Weakness Enumeration). With this, Cisco is specifically targeting common vulnerabilities, including hard-coded secrets, missing input validation, outdated cryptographic methods, and dependencies on end-of-life software.

Cisco emphasizes that Project CodeGuard is intended as an additional layer of defense, not as a guarantee of completely secure code. Developers must still apply regular security practices, such as peer reviews and manual checks. The goal is to significantly reduce the chance that obvious vulnerabilities will go unnoticed in production, while maintaining the speed and efficiency of AI coding.

The first release, CodeGuard v1.0.0, offers basic rules and tools that automatically translate them across different AI platforms. This makes them easy to integrate into existing development environments. Cisco states that this is only the beginning: future versions will expand coverage to more programming languages, support additional AI coding tools, and add automatic rule validation.

The company is calling on the community to contribute to the project. Security engineers, software developers, and AI researchers can submit new rules, build translators, or provide feedback via the public GitHub repository. The ultimate goal is to make secure AI coding the standard, without compromising speed and innovation.