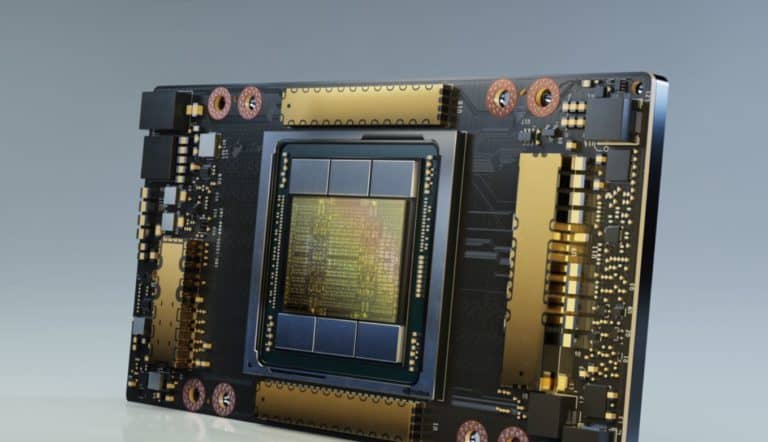

Google has announced a new series of its virtual machines in the Compute Engine A2 line. The VMs are fitted with Nvidia A100 GPUs. Google offers variants with up to 16 of these GPUs in a single node.

This makes it the largest single-node GPU instance of any public cloud provider at the moment, Google claims. This should make them suitable for the most demanding workloads customers could possibly have. In addition, efficiency should be higher and costs lower, writes SiliconAngle.

Ideal for machines learning

“A single A2 VM supports up to 16 NVIDIA A100 GPUs, making it easy for researchers, data scientists, and developers to achieve dramatically better performance for their scalable CUDA compute workloads such as machine learning training, inference and HPC,” said product managers Bharath Parthasarathy and Chris Kleban. “The A2 VM family on Google Cloud Platform is designed to meet today’s most demanding HPC applications, such as CFD simulations with Altair ultraFluidX.”

Scalable up and down

The VMs can be scaled down as well as up. In addition to VMs with 16 GPUs, Google also offers variants with 1, 2 4 or 8 GPUs. For users who need more GPU power, Google offers “ultra-large GPU clusters”. These clusters consist of thousands of GPUs intended for the most demanding machine learning tasks. Within nodes, the GPUs are interconnected with NVLink, which should ensure better cooperation between GPUs.

Ten times as fast as predecessors

Google claims that the Compute Engine A2 VMs are up to ten times faster than their predecessors based on the Nvidia V100 GPUs. Customers who have already been allowed to try the VMs confirm these claims. “We recognized that Compute Engine A2 VMs, powered by the NVIDIA A100 Tensor Core GPUs, could dramatically reduce processing times and allow us to experiment much faster,” said Kyle De Freitas of AI company Dessa. “Running NVIDIA A100 GPUs on Google Cloud’s AI Platform gives us the foundation we need to continue innovating and turning ideas into impactful realities for our customers.”

Availability

The new A2 instances with Nvidia A100 GPUs are available immediately in three regions: us-central1, asia-southeast1 and europe-west4. Google will add more regions later this year.