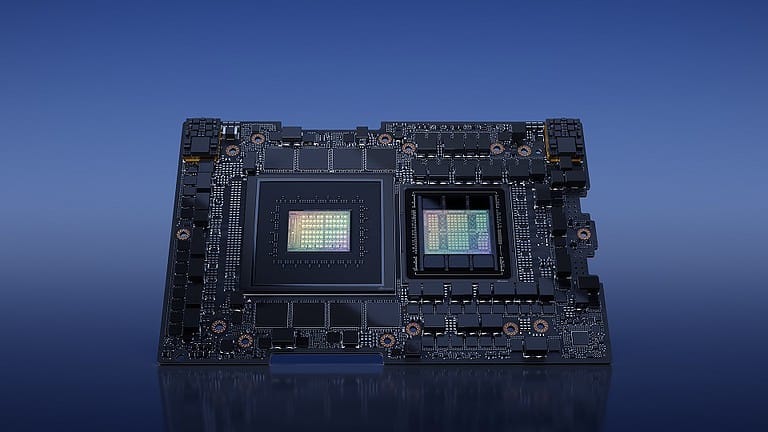

Nvidia unveiled the GH200 Grace Hopper “Superchip” at Computex 2023. It is a combination of the company’s CPUs and GPUs intended for data centers. This Arm-based chip will allow AI workloads to run efficiently and at massive scale. Google, Meta and Microsoft are said to already have access to the technology.

AI is taking center stage at many a tech conference, and the same is true at Computex. Nvidia itself promises to take a big step toward efficiency and scalability. CEO Jensen Huang claimed that its own Arm-based chips are up to twice as efficient as their x86-64 counterparts from AMD and Intel. Arm is a striking differentiator in this regard, as these three parties are now all designing both CPUs and GPUs (albeit for different purposes), but only Nvidia is on Arm. Thanks to a lightweight instruction set, it is possible for large companies to run their in-house software very close to bare metal. Think of applications such as the algorithms behind Facebook, Google search functions or Microsoft ads.

In that area, Nvidia promises an impressive improvement: AI models could be trained up to 30 times faster thanks to this new innovation.

The asset of NVLink

Nvidia is already the overwhelming court supplier of data center GPUs. It has numerous orders standing for the latest H100 GPUs, and it remains to be seen whether it can meet the demand. What is clear, however, is that Nvidia has invested heavily in scalability. GPUs have been able to communicate with each other for some time, but this requires a technology such as NVLink to do so at the desired speed.

Up to 480 GB of LPDDR5X memory sits on each Superchip, parked close to the silicon. There is a whopping 117 MB of L3 cache on the chip. The Grace Hopper superchip has a theoretical bandwidth of up to 900 GB/s between the CPU and GPU. In addition, the GPU runs on HBM3 memory, which has up to 4 TB/s of bandwidth. There is 96 GB of VRAM on each Superchip, which may sound like a lot, but is far too little for the large data sets that feed LLMs. Up to 256 GPUs can be connected together thanks to Nvidia’s latest NVLink tech: this means that 150 TB of video memory can be accessed.

For all this, despite its efficiency, a hefty energy bill should be expected. Indeed, a GH200 consumes up to 1,000 Watts, although this is programmable to “only” 450W.

“Generative AI is rapidly transforming businesses, unlocking new opportunities and accelerating discovery in healthcare, finance, business services and many more industries,” Nvidia VP of Accelerated Computing Ian Buck told SiliconANGLE. “With Grace Hopper Superchips in full production, manufacturers worldwide will soon provide the accelerated infrastructure enterprises need to build and deploy generative AI applications that leverage their unique proprietary data.”

Also read: Nvidia benefits hugely from hype around AI