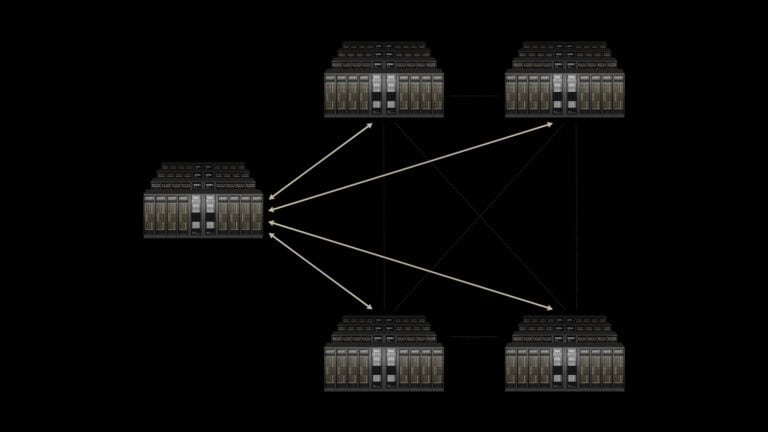

Nvidia believes that the AI-driven data center growth needs another avenue of exploration. Only the connecting of different physical locations can allow for the desired AI capacity to be achieved. In addition to scale-up and scale-out, this also requires ‘scale-across’, as the chipmaker coins it.

The means to achieve this feat is Nvidia Spectrum-XGS Ethernet. This technology, a continuation and expansion of the existing Spectrum-X Ethernet platform, allows AI chips from different locations to behave as one giant ‘superchip’.

Third pillar

The limits of growth within data centers become apparent fairly quickly. These locations have defined space constraints and, in most cases, cannot consume more than a fixed amount of power without rearchitecting it. Scale-up, or expanding a single system or rack, is further limited by the capabilities of infrastructure such as water cooling. There is simply a maximum wattage that can be covered by the existing installation, however large. At the same time, the number of locations inside a data center is limited, so scale-out, or adding more racks, more servers, etc., also has a hard upper boundary.

This brings us to scale-across, where the limit has so far revolved around the infrastructural reality. Although data centers communicate with each other at lightning speed, the requirements for an AI workload are very high. Synchronization across all processors takes an eternity in hardware terms. Spectrum-XGS Ethernet aims to change this. Nvidia refers to this as AI “superfactories.”

Automatic congestion control of network traffic, latency management, and end-to-end telemetry work together to deliver a 1.9x performance gain over typical network speeds between data centers. This is based on a benchmark using Nvidia’s Collective Communications Library (NCCL, pronounced “Nickel”). In other words: this is assuming the (quite realistic) scenario of a full-blown Nvidia location trying to tap into another remotely situated equivalent.

Unsurprisingly, AI capacity specialist and close Nvidia partner CoreWeave is an early adopter. Co-founder and CTO of that company, Peter Salanki, believes this step will enable “giga-scale” AI. It may well be necessary to make larger projects such as Oracle’s, SoftBank’s and OpenAI’s North American Stargate initiative a reality.

Spectrum-X Ethernet foundation

Nvidia’s major step forward could not have happened without work on the existing Spectrum-X Ethernet. Although Nvidia likes to promote its own InfiniBand interconnectivity standard within data centers, the reality is that Ethernet is ubiquitous. Spectrum-X delivers comparable speed, scale, and latency across the board with this tech, but allows for Nvidia’s hardware to deliver its best regardless of the greater compatibility versus InfiniBand.

Read also: Nvidia under fire in German patent lawsuit