According to experts, inferencing will account for the lion’s share of AI calculations. Qualcomm is seizing the opportunity to offer an alternative to the established players in the data center. A ‘rich software stack’ should entice customers to switch.

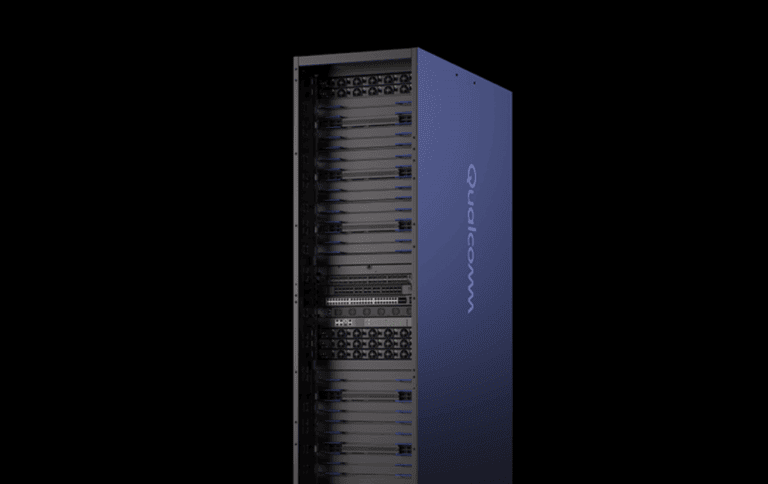

The rack-scale systems promise high performance and efficiency, with memory advantages to handle the largest AI models. Two variants will be released, the AI200 and the AI250, the former in 2026 and the latter in 2027.

With both solutions, Qualcomm is entering the data center market for AI inferencing, or the daily running of AI models. The AI200 focuses on low total cost of ownership (TCO) and optimizes performance for large language models (LLMs) and multimodal models (LMMs). Each card has 768 GB of LPDDR memory, which provides more capacity for less money.

The AI250 goes one step further with an innovative memory architecture based on near-memory computing. This places computing power close to the physical memory chips. This delivers more than 10x higher effective memory bandwidth and much lower energy consumption. This enables so-called disaggregated AI inferencing, which increases hardware efficiency. This splits the calculation of the AI tokens and the processing of the initial prompt.

Both Qualcomm racks follow what has become a traditional approach to AI: they run on direct liquid cooling. PCIe provides scale-up, Ethernet provides scale-out. Confidential computing also protects sensitive AI workloads here, as it does with many competitors. Power consumption per rack is 160 kW, which is significantly less than some racks from players such as Nvidia:

Read more: How data centers are making the giant leap to 1 megawatt per rack

Software stack and ecosystem

Qualcomm is building an extensive software layer around the hardware. This supports common machine learning frameworks, inference engines, and generative AI frameworks. Developers can deploy models from Hugging Face with a single click via the Efficient Transformers Library and the Qualcomm AI Inference Suite.

“With Qualcomm AI200 and AI250, we are redefining what is possible for rackscale AI inferencing,” said Durga Malladi, SVP & GM of Technology Planning, Edge Solutions & Data Center at Qualcomm Technologies. The solutions are designed for frictionless adoption and rapid innovation.

Does ARM stand a chance?

Because we are not talking about ‘classic’ data center use here, ARM suddenly has a chance. Traditionally, the problem for server workloads is that they are set up for x86, so the CPU choice is limited to Intel and AMD. For AI workloads, the underlying ISA (Instruction Set Architecture) is less important; the focus is on the GPUs and the efficiency of the network. Both components differ little or not at all between x86 and ARM.

However, that does not answer the question of whether Qualcomm specifically has a good chance of gaining market share. After all, Nvidia’s “AI cabinets” are also filled with combined CPUs and GPUs based on ARM. For the time being, these are the most sought-after machines for running AI workloads, whether for training or inferencing. The latter is more dependent on efficiency to be attractive to end users, but the demand for AI computing is so great that all hardware will find a use. That wisdom, more than Qualcomm’s competitive position, gives these new devices a chance in the market.