The Nvidia A100 Tensor Core GPU is now available in alpha as part of the new Accelerator-Optimized VM (A2) instance series on Google Cloud.

The company claims that the new chip, built using Nvidia’s new Ampere architecture, is the most powerful GPU the company has ever produced. Designed primarily for AI training and inference workloads, the chip is twenty times more powerful than its predecessor, the Volta GPU. The A100 Ampere chip is also the largest chip built by Nvidia and consists of 54 billion transistors.

The chip was built using Nvidia’s third-generation tensor cores. The new GPU also uses multi-instance gpu (MIG) technology. MIG allows one A100 chip to be partitioned into seven small independent GPUs with dedicated system resources to perform multiple tasks simultaneously. In addition, Nvidia’s NVLink interconnect technology allows multiple A100 chips to be connected to train large AI workloads.

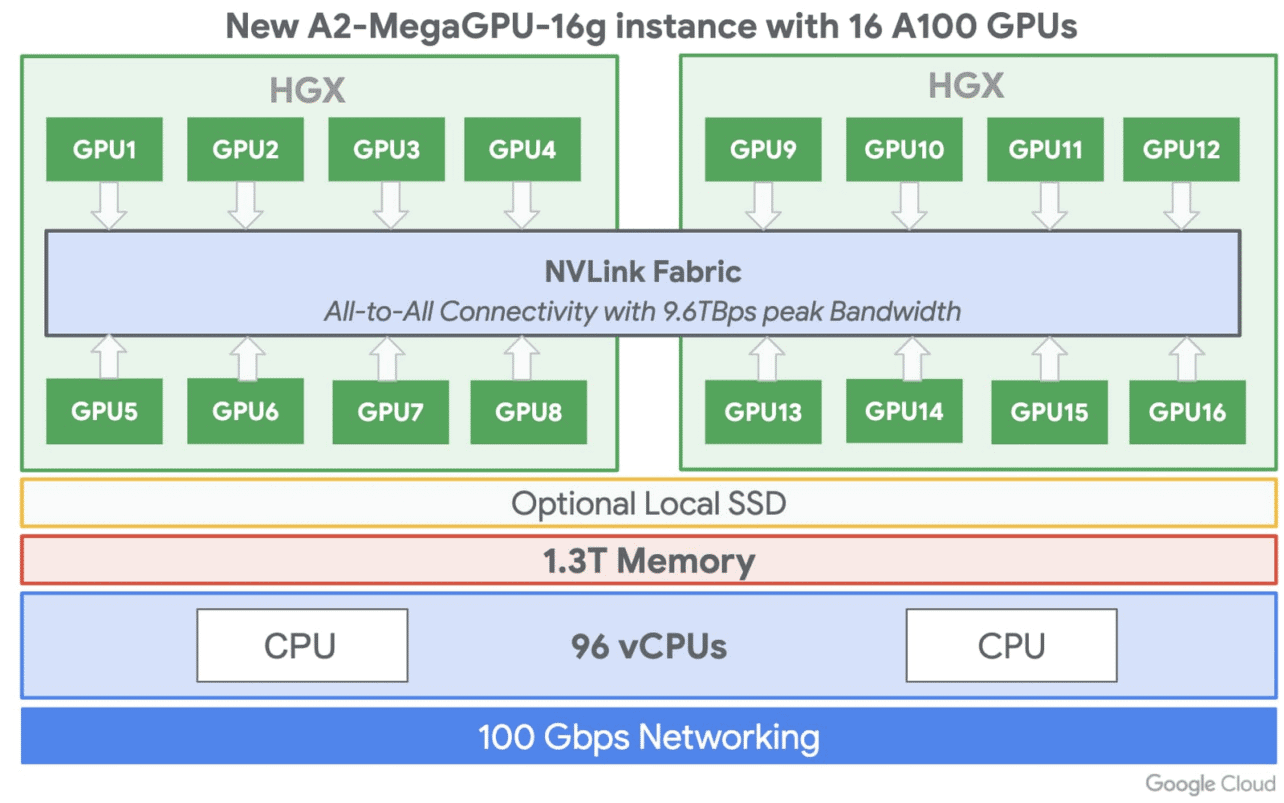

Google Cloud

Google uses this possibility to connect multiple chips. The new instance series also includes an a2-megagpu-16g option that allows customers to use 16 A100 GPUs simultaneously, with a combined CPU memory of 640 gigabytes and 13 terabytes of system memory. It also provides an aggregate bandwidth of 9.6 terabytes per second.

The A100 GPU is also available in small configurations for customers with smaller workloads. According to Nvidia, the A100 GPU will soon also be available for Kubernetes and Google Cloud‘s AI platform.