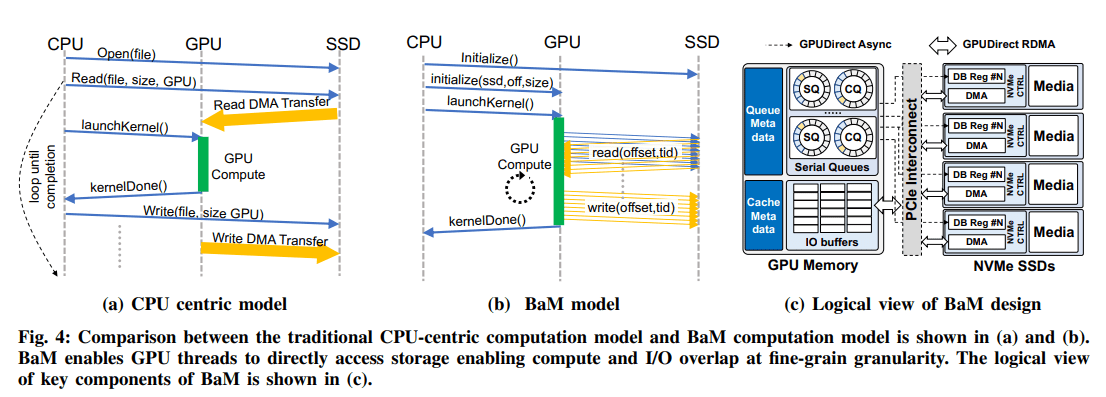

Nvidia and IBM are developing joint technology to give GPU-intensive applications lightning-fast access to large amounts of data for processing, made possible by The Big Accelerator Memory (BaM).

The companies are working on technology for applications for training machine learning, analytics and high-performance computing. The entire process should be orchestrated by the GPU rather than the CPU.

The researchers argue that the current access process is out of date. While present technology is suitable for ‘classical’ GPU applications such as training dense neural networks, it still causes too much overhead and strong growth in I/O traffic. According to the researchers from Nvidia and IBM, the drawbacks make the old technology unsuitable for more innovative applications.

Introducing BaM

To solve the problem, they come up with the BaM technology. This technology should allow precisely the new innovative applications to run more efficiently in data centers. The technology ensures that, in cooperation with an NVMe SSD, the sharp increase in I/O traffic is undone. Data can be loaded from the storage into the GPU memory faster.

BaM uses the GPU’s built-in memory, a software-managed cache and a GPU thread software library. The threads in the GPU receive the data from the storage environment and move it using a custom Linux-based kernel driver.

Test tracks

The researchers also tested the new technology. They used an Nvidia A100 40 GB PCIe GPU, two AMD EPYC 7702 processors with 64 cores each and 1TB of DDR4-3200 memory. The system was running Ubuntu 20.04 LTS.

Nvidia and IBM were very pleased with the tests and found that even consumer SSDs with BaM increased application performance to the point where they can compete with a more expensive DRAM-only solution.

Tip: Nvidia wil datatransfers versnellen via connectie GPU’s met SSD’s