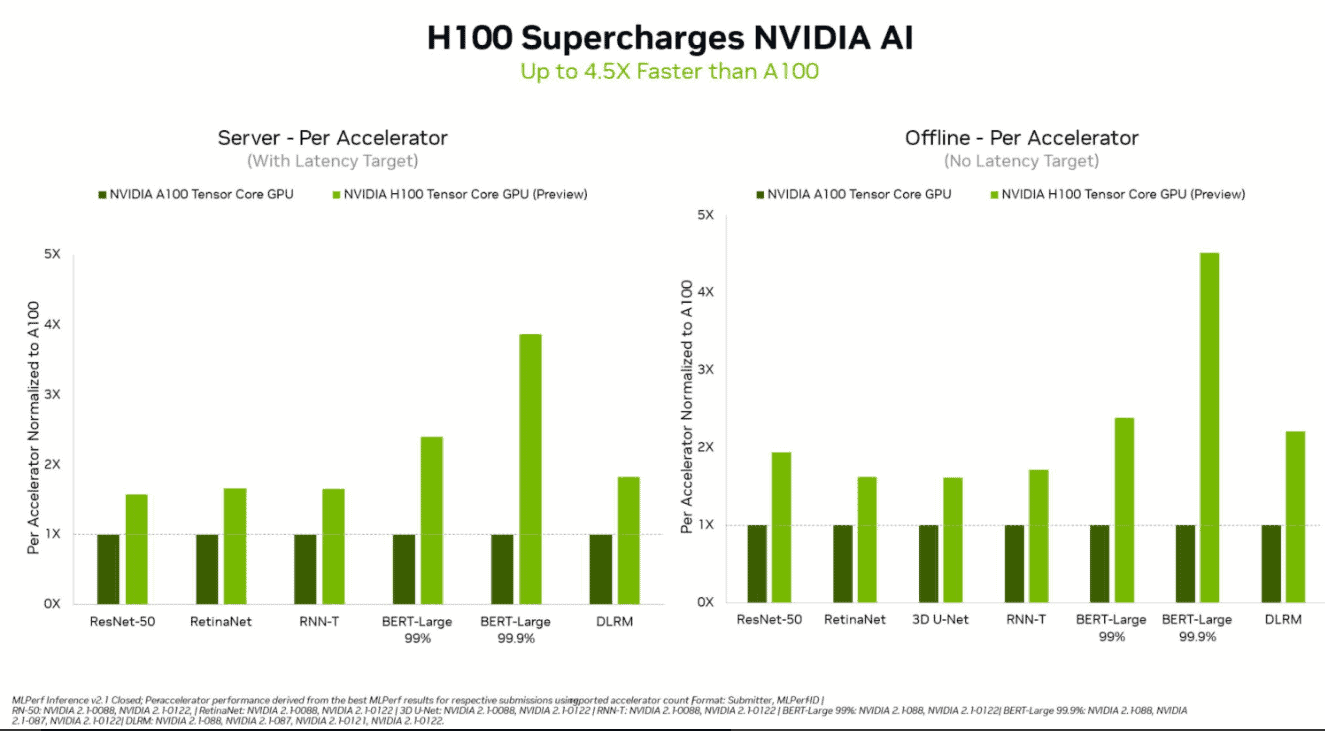

Nvidia claims it set a performance record with the upcoming Hopper H100 Tensor Core GPU. According to Nvidia, the new GPU is up to 4.5 times faster than the current A100 processor for AI and ML applications.

The new performance record was reportedly set through the MLPerfTM Inference 2.1 benchmark, an industry standard. This benchmark measures so-called ‘inference’ workloads that demonstrate how effectively a processor can apply a pre-trained machine learning model to new data. The benchmark ultimately indicates the performance of ML processors.

Test results

During the test, the Nvidia H100 GPU performed well across all six of the neural networks reviewed. The GPU scored especially high in terms of throughput and speed in separate server-based and offline scenarios. According to Nvidia, performance was 4.5 times better than its current Ampere A100 processors, which are currently considered to be the best processors for AI and ML applications.

Thanks to the GPU’s Transformer Engine, the Hopper H100 scored well when combined with Google’s BERT data model. This is one of the largest and most data-intensive NLP models in the MLPref AI benchmark. The H100’s performance indicates that the processor is suitable for other large language models like OpenAI’s GTP-3.

Ecosystem

Hopper H100 GPUs should soon replace the A100 processors that serve as Nvidia’s primary datacenter processors at this time. Meanwhile, H100 GPUs are being tested by partners in Nvidia’s ecosystem, including Microsoft Azure, Dell Technologies, HPE, Lenovo, Fujitsu, GIGABYTE and ASUS.

Tip: US bans Nvidia and AMD from exporting high-end chips to China