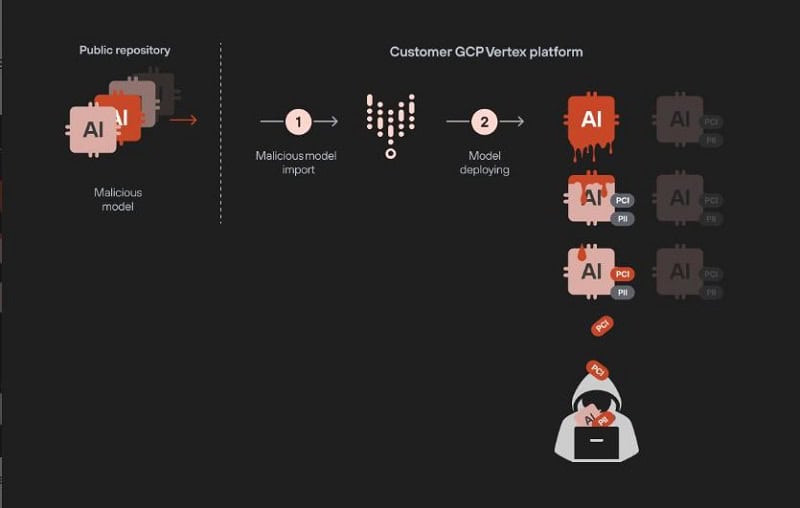

Google’s Vertex AI platform had two serious vulnerabilities. These allowed it to access customers’ LLMs and replace them with malicious variants.

This was discovered by Palo Alto Networks’ Unit 42 research team. Google Vertex AI contained two vulnerabilities. The first of these involved privilege escalation. By abusing so-called “custom job permissions,” the hackers could easily escalate the necessary privileges. This allowed them to access all customer data services within their development projects.

The second vulnerability identified by Unit42 was a flaw that allowed hackers to upload a malicious LLM within Google Vertex AI themselves. This allowed them to then steal data from all other (customer-defined) finetuned LLMs. The latter vulnerability therefore posed a major risk for stealing proprietary and sensitive data, say the researchers.

Stricter control rules needed

Google has since closed the leak. In a concluding comment, Unit42 states that these vulnerabilities demonstrate the great risks when, for example, a single malicious LLM is deployed in this type of environment.

The researchers therefore believe it is important to have stricter control rules for LLM deployments. Fundamental to that is separating the development and test environments from the eventual live production environments. This reduces the risks that hackers could roll out potentially insecure LLMs before they have been strictly reviewed.

In addition, LLMs coming from internal teams or third-party vendors should always be properly validated before they are actually deployed.

Also read: Hugging Chat Assistants to be abused for data exfiltration