Endor Labs has released the AI Model Discovery tool, which allows companies to discover open-source AI models and make them compliant with their security policies. This helps better manage the risks posed by these models.

Open-source AI models are increasingly being used for business applications. While this makes it easier to deploy AI, its use also comes with risks.

Current Software Composition Analysis (SCA) tools fall short in identifying and securing these risks, says open-source security specialist Endor Labs. They are designed primarily for tracking open-source packages and not for identifying risks that AI models in applications can cause.

AI Model Discovery Tool

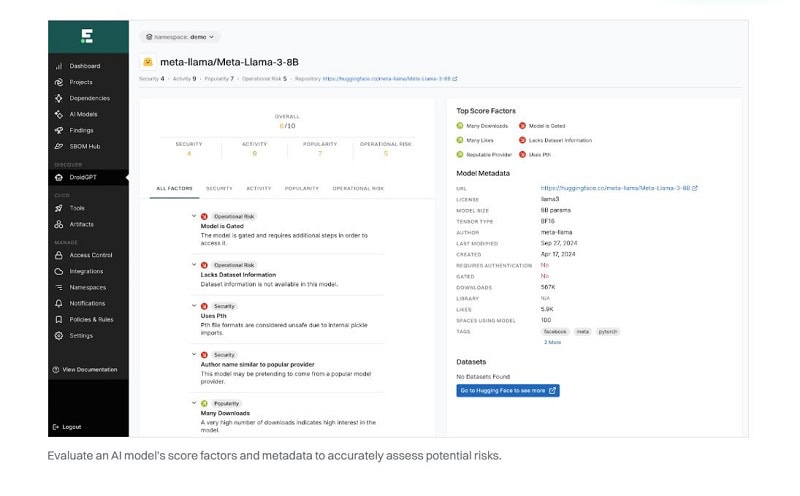

Endor Labs wants to change this and has therefore introduced the AI Model Discovery Tool. With this tool, companies can easily detect open-source AI models in their applications, especially models distributed via Hugging Face. In addition, they can apply security policies to these models.

The tool focuses on three core functions:

- Detection: Scanning and inventorying locally used AI models and mapping which teams and applications are using these models.

- Analysis: Assessing AI models for known risk factors such as security, quality, activity and popularity. The tool also identifies models with unclear data sources, licenses or practices.

- Enforcement: Implementing security guidelines within the enterprise environment, alerting developers to policy violations and blocking high-risk models.

Under the Hood

Under the hood, the AI Model Discovery Tool searches the open-source models used from Hugging Face using a series of parameters to search the underlying source code for certain patterns. This range is constantly being extended. However, this sequence is currently limited to open-source Python code because most open-source AI models use this programming code.

When the patterns are discovered, the AI models are further examined.

In cases of doubt or ambiguity, the source code is examined once more using the AI functionality in the tool. Testing shows that this final check produced the correct observations in 80 percent of cases.

Also read: Open-source tool FuzzyAI makes AI development more secure

Future developments

Current customers of Endor Labs’ SCA tools can use the tool directly for testing (Hugging Face) open-source AI models. In the near future, the tool will also become available for other model repositories and sources and for programming languages other than Python.

Recently, in our podcast series Techzine Talks on Tour, we discussed with Rick Smith of SentinelOne about how in this year, 2025, it will not only be more about AI, but more importantly how AI should be properly secured. Listen to the podcast here: