Everything there is to find on tag: mixture of experts.

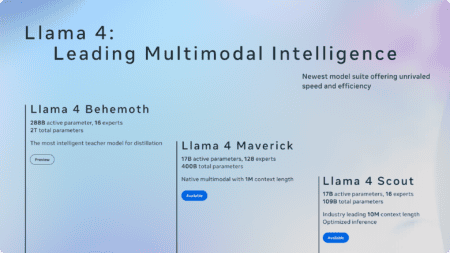

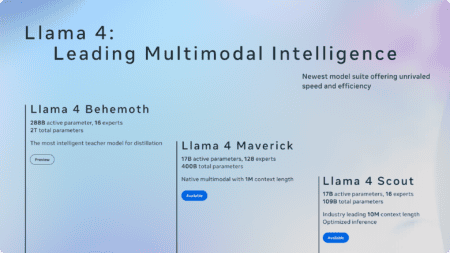

Meta launches Llama 4: new multimodal AI models

Meta has announced the first models in the Llama 4 series. The new multimodal AI models, Llama 4 Scout and Ll...

Everything there is to find on tag: mixture of experts.

Meta has announced the first models in the Llama 4 series. The new multimodal AI models, Llama 4 Scout and Ll...

Chinese LLM developer DeepSeek has unveiled its R1 series of large language models (LLMs), optimized specific...

Microsoft announces a new family of LMs. The Phi-3.5 line includes three models, including, for the first tim...

Elon Musk's AI developer xAI has finally made the basic model, underlying parameters and architecture of the ...

Microsoft announces Tutel. The open-source library is available immediately for developing AI models and appl...