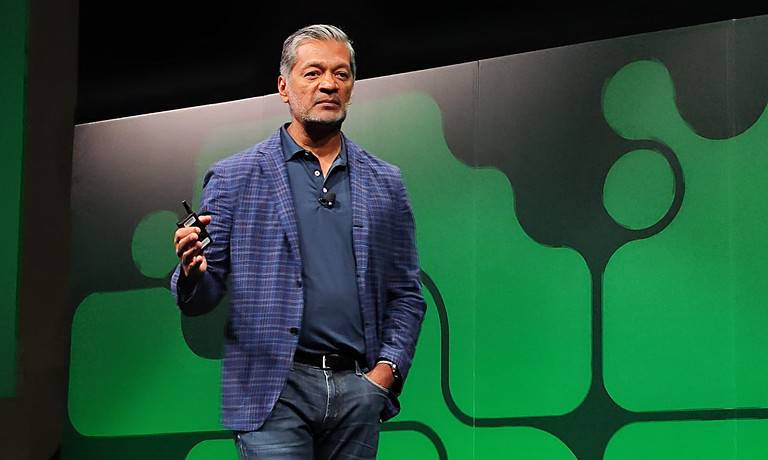

MongoDB.local NYC kicked off this week in New York as part of the company’s programme of more localised developer conferences. With just one main keynote session delivered initially by MongoDB CEO and president Dev Ittycheria, the audience consisted of programmers with an additional quotient of some Ops (operations) staff.

Starting this company with an adherence to the document model of database development, Ittycheria explained how his firm has worked over the last few years to ‘chip away’ at the concerns that customers may have once had when considering trusting their data crown jewels to his company’s technology proposition. Pointing to how MongoDB Atlas has evolved as a globally distributed data platform, some 40,000 developers are said to sign up for this technology every week.

“Atlas is the most widely available data service on the planet at the moment across all three major hyperscalers and across 110 world datacentres,” said Ittycheria. “Thinking about how we have grown with the cloud, the SaaS model has been incredibly good for us, but it has of course resulted in a number of tradeoffs with the proliferation of point tools emerging to handle the specific functions that data-centric developers needed every day. We saw this as unsustainable, so we worked to radically simplify the use of data developer tools with natively developed functions that work in a seamlessly unified way.”

It is, he claims, all the benefits of cloud, but without the cost overhead of having to bolt-on extraneous functions (for streaming, for graph queries, for vector-based search and so on) which are costly, complex and challenging – and also involves working across rigid and inflexible database schemas.

An elegant data platform

As part of this year’s event, the audience were led through major MongoDB Atlas service updates which included key data streaming capabilities in the beta release of Atlas Stream Processing.

The company also added Atlas Vector Search to provide native support for vector search and become a single platform that unifies what is now an even more expanded number and type of functions. Looking at the total platform today, MongoDB is what Ittycheria repeatedly refers to as an ‘elegant data platform’, which may be a slightly colourful term, or it may just describe it quite succinctly.

One superbly illustrative example of vector search is a use case in a car repair shop – this scenario was explained by Sahir Azam, chief product officer at MongoDB as part of his keynote presentation.

Given the fact that most of us go into these establishments and report that there’s something wrong the car by saying that there’s a ‘kind of sound’ coming from it, this is a pretty indistinct way of recording data related to car faults. By recording car sounds and transforming problematic knocks and dings into vectors, these vectorised information streams can then be used to track other similar car issues to massively speed up problem detection and then, therefore, maintenance and repair.

Google Cloud partnership

MongoDB also used this event to announce a new initiative in partnership with Google Cloud to help developers accelerate the use of generative AI and build new classes of applications. The company says that MongoDB Atlas is the multi-cloud developer data platform used by tens of thousands of customers and millions of developers globally.

With an integrated operational data store at its core, MongoDB Atlas is said to be well-positioned to build applications powered by generative AI with less complexity. Developers can now take advantage of MongoDB Atlas and partner integrations with Google Cloud’s Vertex AI Large Language Models (LLMs) and new quick-start architecture reviews with MongoDB and Google Cloud professional services to jump-start software development.

“With the shift in technology powered by generative AI taking place today, the future of software and data is now, and we’re making it more evenly distributed for developers with MongoDB Atlas,” said Ittycheria. “This shift begins with developers… and we want to democratise access to game-changing technology so all developers can build the next big thing. With MongoDB Atlas and our strategic partnership with Google Cloud, it’s now easier for organizations of all shapes and sizes to incorporate AI into their applications and embrace the future.”

A new class of applications

Recent advancements in generative AI technology like LLMs are widely agreed to present new opportunities to reimagine how end users interact with applications. Ittycheria and team suggest that developers want to take advantage of generative AI to build new classes of applications, but many current solutions require piecing together several different technologies and components or bolting on solutions to existing technology stacks, making software development cumbersome, complex, and expensive.

The proposition here is that MongoDB and Google Cloud are helping address these challenges by providing a growing set of solutions and partner integrations to meet developers where they are and enable them to quickly get started building applications that take advantage of new AI technologies.

“Generative AI represents a significant opportunity for developers to create new applications and experiences and to add real business value for customers,” said Kevin Ichhpurani, corporate vice president, global ecosystem and channels at Google Cloud. “This new initiative from Google Cloud and MongoDB will bring more capabilities, support, and resources to developers building the next generation of generative AI applications.”

MongoDB and Google Cloud explain that they have partnered since 2018 the firms insis they have helped thousands of joint customers adopt cloud-native data strategies. Earlier this year, the partnership was expanded to include deeper product integrations.

Google Cloud Vertex AI

Now, developers can use MongoDB Atlas Vector Search with Google’s Vertex AI within Google Cloud to build applications with AI-powered capabilities for personalized and end-user experiences. Google reminds us that Vertex AI provides the text embedding API required to generate embeddings from customer data stored in MongoDB Atlas, combined with the PaLM text models to create advanced functionality like semantic search, classification, outlier detection, AI-powered chatbots and text summarisation.

According to a joint technical statement from both firms, the MongoDB and Google Cloud professional services teams can help rapidly prototype applications by providing expertise on data schema and indexing design, query structuring and fine-tuning AI models to build a strong foundation for applications.

“The Vertex AI platform caters to the full range of AI use cases from advanced AI and data science practitioners with end-to-end AI/ML pipelines to business users who can create out-of-the-box experiences leveraging the foundational models to generate content for language, image, speech and code. Developers can also tune models to further improve the performance of the model for specific tasks,” notes the companies.

Another day, another hyperscaler

It’s always interesting to hear from a company (like MongoDB, or any other enterprise software vendor) when they come to the point of a major hyperscaler announcement. If you’ve never seen all three major Cloud Services Providers (CSPs) noted side by side as new news (i.e. one of the three has joined in a more formalised partnership), that may just be because they don’t want to all sit at the same table when that kind of publicity vehicle is engaged.

All of which, in a way, is kind of strange, as they all do partner and co-innovate with each other, but there you – sometimes there’s no love lost in hyperscaler abstraction virtualisation instance provision, as the old saying goes.

MongoDB continues on its mission to be humongous and be the best (if not the most widely used) database on the planet – and you can document that now (pun intended) until we join the conference programme again next year.