During re:Invent 2025, AWS unleashed a huge wave of AI updates on the audience and the market. The new proprietary Nova 2 frontier models offered via Amazon Bedrock are particularly eye-catching. Trainium3 UltraServers should ensure that training and inferencing can once again be performed significantly faster. Several valuable updates to AgentCore also stand out, and AWS will provide hardware, services, and management in customer data centers with AI Factories.

There were no big surprises, such as the announcement of the first generation of Nova models, in AWS CEO Matt Garman’s opening keynote this year. However, this certainly does not mean AWS has run out of AI innovation. Quite the contrary, in fact. AWS is significantly expanding its offering this year and going deeper to make it more mature. In other words, AWS’s AI ambitions are gaining more substance. From a practical point of view, especially from the end users’ perspective, this is extremely valuable.

Nova 2 Lite and Nova 2 Pro

Whereas last year’s announcement of the Nova models came towards the end of the keynote, this year Garman more or less kicks off the keynote with it. It’s clear that AWS is serious. From now on, four new Nova 2 models are available in Amazon Bedrock.

According to AWS, Amazon Nova 2 Lite is a fast and cost-effective reasoning model for everyday workloads. The model offers an accessible entry point for developers who want to leverage advanced AI capabilities without requiring significant computing power.

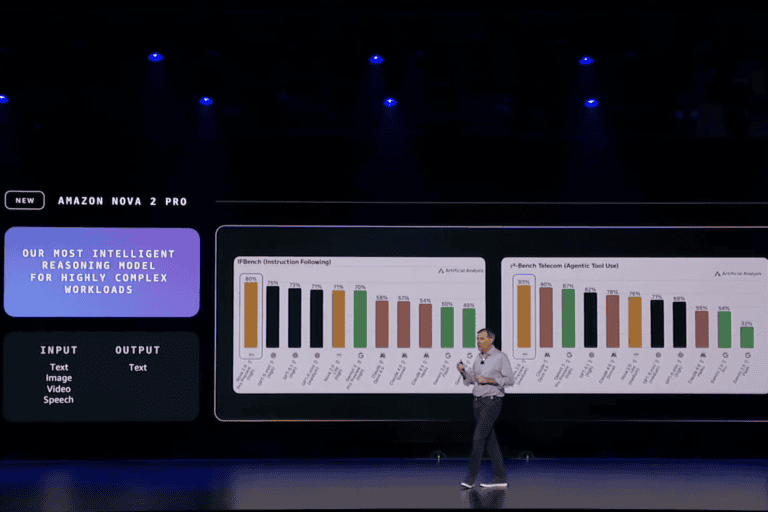

Amazon Nova 2 Pro is the most intelligent model in the lineup and was specifically developed for highly complex, multi-step tasks. The model can handle multi-document analysis, video reasoning, and software-related tasks.

Both models feature extended thinking with step-by-step reasoning and task decomposition. Developers have access to three levels of so-called thinking intensity ( low, medium, and high). The idea is that this allows them to determine and monitor the balance between speed, intelligence, and cost.

What struck us was that AWS places a lot of emphasis on the performance of the new models compared to the competition, models that most people will be much more familiar with than Nova. Think of ChatGPT, Gemini, Claude. We already noticed this last year when Nova was launched. It indicates that AWS is an underdog in this area and still has to prove itself in the market. In many other areas of public cloud, it is exactly the opposite. These relationships alone make it interesting to continue to follow, in our opinion. AWS is also very competitive in terms of price, as we can see in the overview on the AWS website.

Nova 2 Sonic and Nova 2 Omni

In addition to the ‘standard models’ Nova 2 Lite and Nova 2 Pro, AWS has two other interesting models. Nova 2 Sonic is an AI model specifically focused on speech-to-speech. Developers who want to add functionality to applications that allows users to converse (in their own language) with an AI that also responds in that language. According to AWS, you don’t have to focus strictly on a single topic. The model handles things asynchronously. This means, among other things, that you can change the subject without confusing the model.

The latest model is one that attempts to bring together all the possible modalities you encounter in AI. Test, images, video, speech, Nova 2 Omni must be able to handle everything and delivers text and images as output. According to AWS, this makes it the first to offer such a model. One of the goals of Nova 2 Omni is to keep the costs of deploying AI models within limits. There is now no need to link different models together. This not only reduces costs, but also the complexity of the overall environment of organizations.

Nova Forge: build your own frontier model

In our opinion, the most interesting announcement regarding Nova is Nova Forge. With this, AWS wants to boost the market for building frontier models. Nova Forge is a service that allows organizations to build their own frontier models. As the name suggests, these are frontier models based on AWS’s own Nova models. It is therefore also a way for AWS to bring its own models (and thus Amazon Bedrock) to the attention of a wider audience.

AWS has even come up with a name for the frontier models built with Nova Forge: Novellas. Each Novella is essentially a combination of customers’ own data and the capabilities offered by Nova. Another interesting feature is that Nova Forge offers customers multiple model checkpoints. They can use pre-trained, mid-trained, and post-trained Nova model checkpoints. This allows them to combine their data with Nova at multiple points during the training of their own Novella. The underlying frontier model is currently Nova Lite, but Nova Forge customers will also be among the first to gain access to Nova 2 Pro and Nova 2 Omni.

Trainium3 accelerates AI training (and inferencing)

Many of the AI updates AWS is making today relate to products and services it offers on top of its own infrastructure. However, that infrastructure is also constantly evolving. During re:Invent, the company announced that the latest Amazon EC2 Trn3 UltraServers are now generally available.

The Trainium3 UltraServers are quite special and important for AWS. They are the first chips to be baked using a 3nm process. A single Trainium3 UltraServer contains a total of 144 Trainium3 chips. This latest generation naturally delivers significantly better performance than its predecessor. It offers 4.4 times higher performance, 3.9 times more memory bandwidth, and four times higher performance per watt than the Trn2 UltraServers. A Trainium3 chip offers 2.52 petaflops of FP8 computing power. That amounts to 362 petaflops in total.

Trainium3 is now the fastest AI accelerator that AWS offers on Amazon Bedrock. We also noticed that regular emphasis was placed on the fact that Trainium is not only suitable for training AI models, but also very suitable for inferencing. AWS also has the Inferentia line for this purpose, of course, but apparently Trainium can also be used for this very well. It might be time to take a closer look at the naming, because this makes it all a bit confusing. It is understandable that AWS emphasizes this, because inferencing is where a lot of growth can be expected in the future.

Bedrock AgentCore gets updates

AWS is also unveiling new Bedrock AgentCore functionality today. The new features should make the development of agentic AI a lot easier. AgentCore helps organizations set up autonomous and (hopefully) effective and smart self-service workflows. It is important that these continue to work as originally intended. To ensure this, organizations can set specific guidelines, reduce hallucinations, and ensure that bots or workflows comply with predefined policies.

Looking at the specific new features that AWS is adding, there are three: Policy, AgentCore Evaluations, and AgentCore Memory. The addition of Policy to AgentCore should enable organizations to set clear boundaries for applications regarding what they can and cannot do. Interestingly, Policy runs in AgentCore Gateway. This is interesting for two reasons. First, it means that it is not part of the agents’ code itself. Second, it ensures that it can respond in near real time. This should make it possible to keep agents in line at all times.

AgentCore Evaluations is designed to assist developers. It allows them to ensure continuous insight into the quality of the agents. It does this by evaluating behavior. Evaluations is not so much focused on setting boundaries, but more on how agents perform within those boundaries. AWS has already built and included thirteen so-called evaluators. These include quality criteria such as correctness, accuracy in selecting tools, safety, whether agents achieve their goals, and whether they actually help agents to function better. Developers can also create their own evaluators and add them to AgentCore Evaluations.

The latest addition to AgentCore that we will discuss here is AgentCore Memory. It basically does what the name suggests. Whereas many AI agents lose their memory, as it were, as soon as a new interaction starts, AgentCore Memory ensures that the agent gets to know users better over time. The AI agents can learn from previous interactions and experiences. They can then apply these insights to new and future interactions.

AWS Frontier Agents for software development

Today, AWS presented what it calls a new class of AI agents. It calls them Frontier Agents. They function as an extension of development teams. These agents can work completely autonomously to make project proposals, generate code, and adjust dependencies, among other things.

According to AWS, pilots have shown that Frontier Agents can do months of work in just a few days. The Professional Services Delivery Agent starts projects based on diagrams or meeting notes. It generates project proposals and work descriptions in a matter of hours. According to AWS, this used to take weeks.

The agent works with specialized sub-agents that write, test, and deploy code. For COBOL mainframes, VMware workloads, and .NET applications, the system automatically activates the AWS Transform service. Read this article for more information. Migrating software often also means adjusting dependencies. AWS claims that the agent takes care of most of this based on Transform.

AWS is not only announcing the concept of the Frontier Agent, it is also launching three of them at once. These are the Kiro Autonomous Agent, Security Agent, and DevOps Agent. The first can be seen as an extension of the Kiro IDE offered by AWS itself. Its main purpose is to help developers build applications. The Security Agent is designed to ensure that applications are and remain safe and secure. Finally, the DevOps Agent should make it easier to quickly resolve application issues.

New AI Factories in customer data centers

AWS AI Factories enable organizations to convert their existing infrastructure to AI environments. The service focuses on accelerating AI adoption without companies having to replace their entire infrastructure. AI Factories therefore allow organizations to build on the investments they have already made in their IT environment.

In other words, the solution integrates with existing systems and extends them with AI capabilities. This makes it easier for organizations to take the step towards production-ready AI. Please note that AWS hardware must be installed in the organization’s own data center to make this possible. So it is not just a service, but also physical hardware. That is quite something. In addition to AI accelerators, it also involves networking, storage, databases, and security. You are essentially turning your own data center into a mini AWS data center. On top of that, the necessary AWS services also run on it. AWS also manages the environment.

The reason why AWS offers this is obvious. In theory, at least, it makes it easier for organizations to get started with AI. This can be a godsend, especially for governments and regulated industries. They don’t have to move to the cloud, but can use their own environment.

AWS wants to expand the market

If we are to interpret the AI announcements from AWS discussed in this article, it is clear that the company wants to expand the market for AI. Many of the announcements are intended to enable more organizations to get started with (agentic) AI. Of course, it is also doing this to be and remain relevant in this market.

The new Nova models should put AWS more firmly on the map as a provider of its own frontier models, offering good performance at a competitive price. With Nova Forge, it now has an extra string to its bow. Of course, it must be careful not to overshoot the goal it has always emphasized with Amazon Bedrock. That is, it wants to offer a choice of models that customers can choose from.

AWS also continues to demonstrate that it is working hard to make the underlying infrastructure more powerful and widely applicable. The new Trainium3 UltraServers are a good example of this, but AWS AI Factories also contribute to this. Finally, it is good to see that AWS is also taking responsibility for ensuring that AI agents can make optimal use of everything that AI models and AI stacks have to offer.

All in all, we may not have seen anything earth-shattering from AWS this week, but we did see a strong broadening and deepening of its offerings. In many ways, we believe this is more valuable than yet another shiny new announcement that is still in its infancy.