Amazon Web Services is hosting its annual re:Invent conference this week. During this conference, AWS showcases its innovations for its cloud solutions. During the opening keynote of Adam Selipsky, the CEO of AWS, it was mostly a power show in which the company demonstrated that it is the market leader. However, there was also a big announcement at the very end.

Officially, there was already an opening keynote on Monday night (local time in Las Vegas). In it, some chip innovations were revealed. Still, we see Selipsky’s keynote as the actual opener. This is where the overall story is brought and the overall innovations of AWS.

AWS re:Invent is back, by the way; last year, COVID impacted the event a lot, and attendance was much lower. Now there are 50,000 people present in Las Vegas to attend re:Invent. There are also another 300,000 registrations online from customers and partners attending the event virtually. In addition, Selipsky reported moments later that AWS is the most popular cloud among unicorns. Unicorns are startups worth more than $1 billion. According to Selipsky, there are now more than 1,000 unicorns worldwide, and 84% of them choose AWS.

Customer success on AWS

Selipsky kicks off by mentioning some customers who are innovating using AWS. For example, the BMW Group is developing its electric car platform using AWS. In addition, the NASDAQ has moved its matching engine to AWS to process orders faster on the exchange. AWS also helped the largest Ukrainian bank with their transition to the cloud. PrivatBank managed to move its entire infrastructure to AWS, including 4 petabytes of customer data, in just 45 days.

Sustainability is high on the agenda

You can’t ignore sustainability as an organization anymore. At AWS, they have therefore strengthened their goals. In 2025 the company only wants to use renewable energy. To make this possible, they are currently the largest buyer of renewable energy. So, rather than building their own wind or solar farms, AWS is buying up the proceeds from such farms to reach that goal. The goal to be carbon neutral is still at 2040, competitors like Google Cloud want to achieve that in 2030.

AWS uses billions of gallons of (drinking) water

AWS is also investing in the area of water management. AWS still consumes 0.25 liters per kWh; the industry average is 1.8 liters per kWh, according to AWS. By 2030, the company wants to give more water back to the community than it consumes. That’s going to be a huge undertaking because AWS has hundreds of data centers worldwide, only 20 of them using recycled water to date. However, those data centers can provide the community with 2.4 billion gallons of water annually. For example, farmers can irrigate the land. That also means that AWS can save billions more gallons of water in the coming years. Not all that water is drinking water, by the way; it can also come from rivers, the sea or industries. However, quite a few gallons are drinking water. There’s no getting around that.

The water consumption of data centers has already been raised in politics in the Netherlands. Still, worldwide the consumption of (drinking) water by data centers is a significant but mainly unknown problem. That AWS has already started to save, recycle and return water is good, but some regulation to force all major data center players to do so would not hurt.

Saving money by migrating to the cloud?

To go completely cloud-only as an organization is quite difficult. For SMBs, it’s still relatively easy and affordable. For enterprise organizations, it usually means a big hurdle and spending a lot of money. The general conclusion is that it is more expensive than opting for a hybrid cloud strategy, where you as an organization have your own data centers or colocation in addition to your cloud infrastructure. Selipsky cited some examples of companies that actually saved money by moving to the cloud. According to him, Carrier saved 40% in costs by migrating to the AWS cloud. Gulead saved $60 million, and Agco has 78% lower costs in the cloud. We’re especially curious about the specifications of these customers because we didn’t get them.

Another example that is easily explainable is Airbnb. That company managed to save $63.5 million in cloud spending during COVID. Airbnb runs entirely in the cloud and had much less activity on the website because vacations were not an option for a while. So they could keep the website up and running with much less infrastructure. In the cloud, you can scale down more quickly than in your own data center.

Display of power around data

Selipsky started with the power play of an industry leader after this. For example, he talks about the data explosion we are currently in. Organizations have an increasing focus on data, data analytics and making the data available.

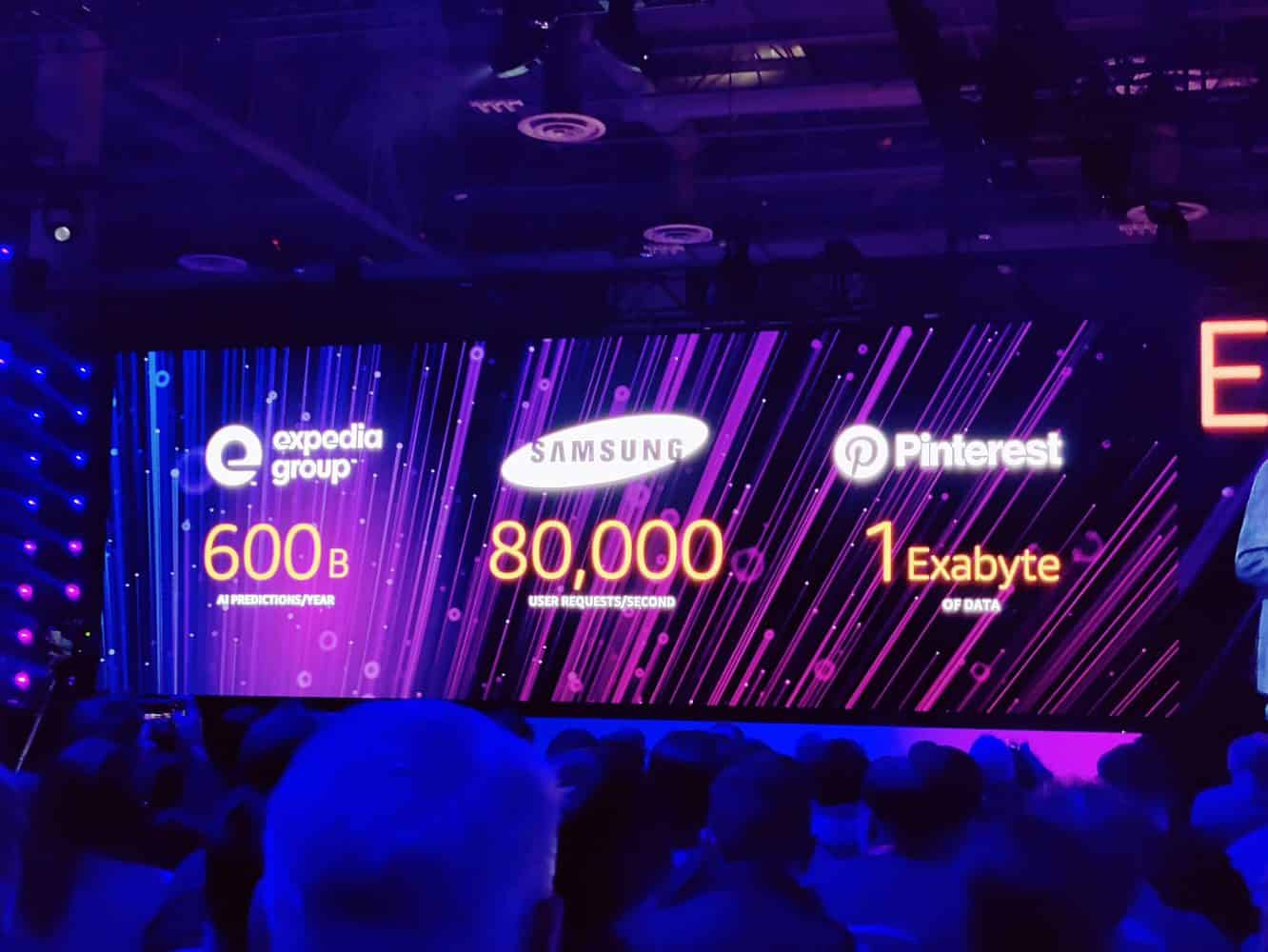

Examples he cites include Pinterest, with 1 exabyte of data, the Expedia Group making 600 billion in AI predictions per year and Samsung which, with its 1.1 billion customers, accounts for 80,000 user requests per second on the AWS cloud.

He then talks about having the right tools, integrations, governance and insights. He then shows new solutions or features for each of these categories.

Databases

Amazon Aurora is presented as the most successful cloud database. Five times faster than MySQL and three times faster than PostgreSQL, but more importantly, according to him, at 1/10 of the cost of a commercial database. Which is probably a comparison with the Oracle Autonomous Database.

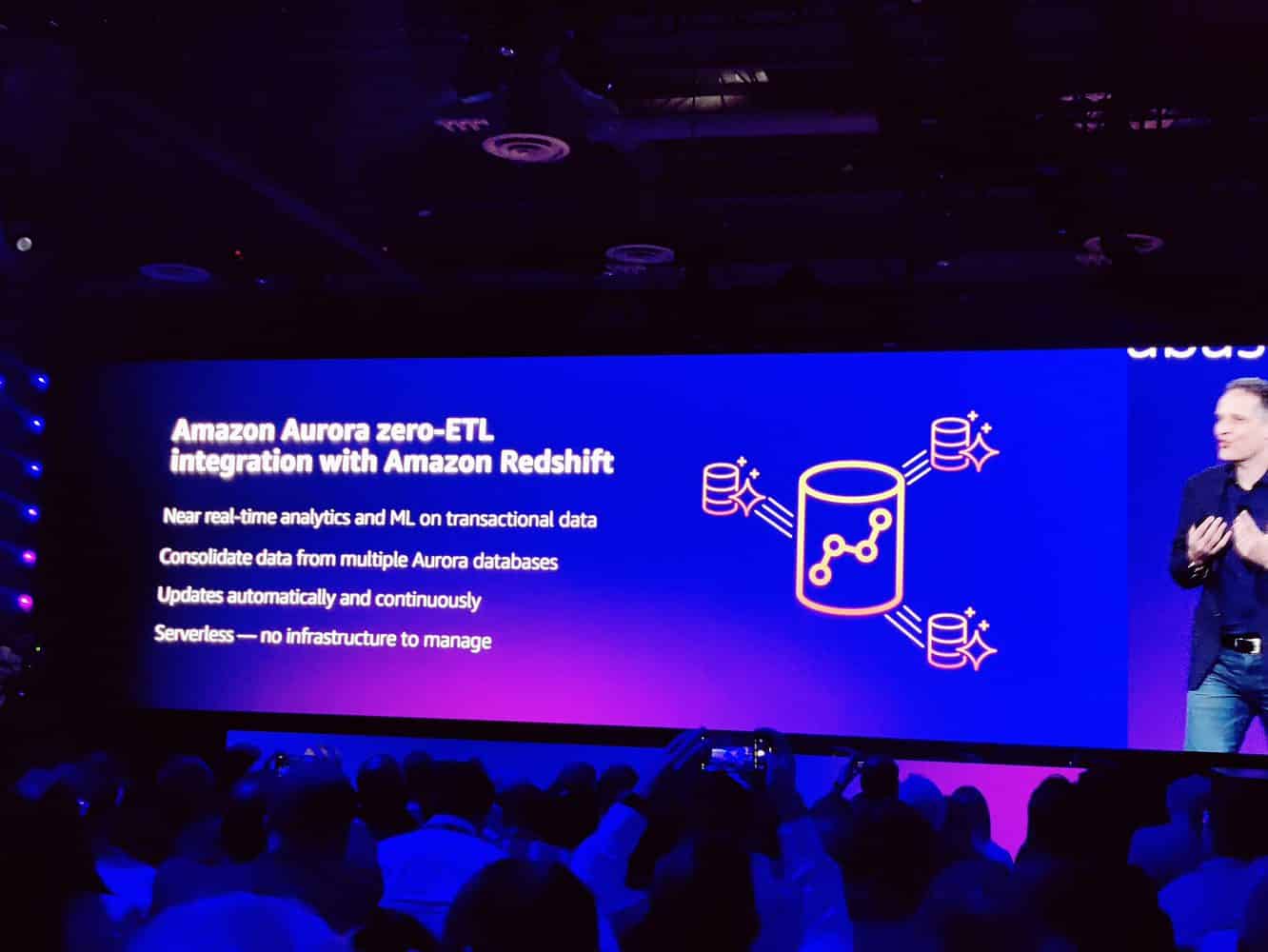

He then talks about integrations with Amazon Redshift, the Amazon Cloud data warehouse. You can now quickly move Amazon Aurora tables and columns into Amazon Redshift for instant analysis. The same goes for Apache Spark. Amazon states that they have made sure Apache Spark runs best on AWS. But now, they also offer the ability to transfer Apache Spark data to Amazon RedShift in a few clicks. Again to make data available for analytics within seconds. This fits within AWS’ zero ETL goal. By that, they mean extracting, transferring and loading data in real-time.

Serverless OpenSearch

Next, a new database service is briefly announced. The serverless OpenSearch Service. OpenSearch is the Amazon spin-off (fork) of ElasticSearch. It is now also offered serverless, so you don’t have to worry about the underlying infrastructure.

Amazon SageMaker

In the category of tools and integrations, SageMaker cannot be missing. With Amazon SageMaker, Amazon owns the largest AI development platform. Meanwhile, over 10,000 customers use SageMaker to develop and train AI models with billions of parameters. Selipsky highlights support for TensorFlow, PyTorch and MXNet. He also highlights SageMaker Studio, the first-ever IDE for machine learning. According to Selipsky, we will hear the necessary announcements surrounding Sagemaker tomorrow. To be continued, in other words.

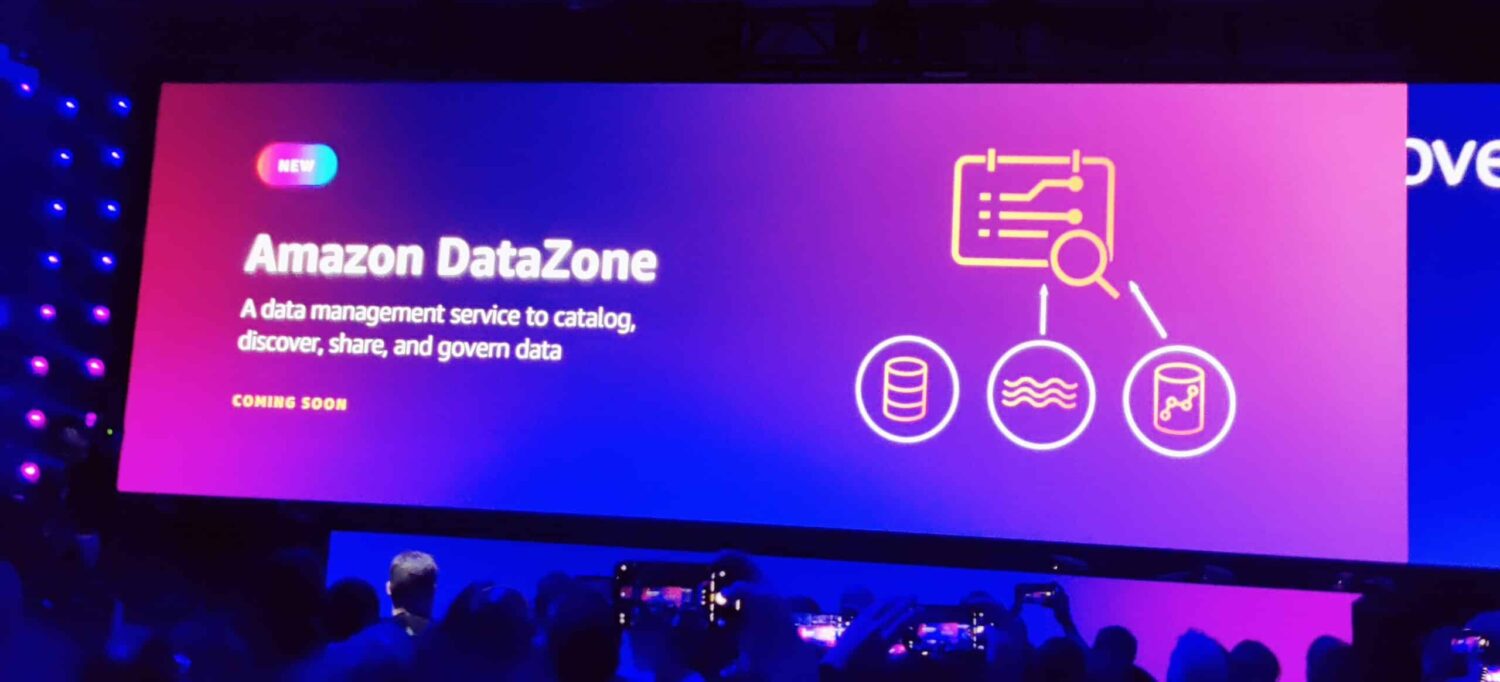

Amazon offers a governance tool with Amazon DataZone

With Amazon DataZone, AWS now offers a tool in which you can catalogue your organization’s data. On the one hand, this provides a piece of governance and compliance if all data is captured in such a tool. On the other hand, it offers the ability to make data transparent to colleagues. If there are other projects where such data can be helpful, then other departments or individuals can request access to that data. Or the data can be shared with specific applications. That way, as an organization, you keep track of what data you have and who has access to it. All data is easily accessible through a web portal. In addition, Amazon Datazone also has APIs, making it easier for other applications to integrate with specific data sets.

Amazon Security Lake

Recently, an Open Cybersecurity Schema Framework (OCSF) has been established. A framework for how security data should be stored. Many major security players have joined this, including AWS, Broadcom, Crowdstrike, IBM, Palo Alto Networks, Okta, Rapid7, Salesforce, Splunk, Sumo Logic, Tanium, Trend Micro and Zscaler.

AWS immediately took advantage of this with the introduction of the Amazon Security Lake. A solution in which you can bring all your organizations’ security data together in one data lake. Security solutions can connect directly to this data lake to add data to it but also perform data analysis on data they have not collected themselves. AWS also provides proprietary analysis tools to analyze all that security data. That way, patterns can be discovered between data from firewall logs from Cisco or Palo Alto Networks with authentication logs from Okta or security threat data from SentinelOne, for example.

Display of power around compute

After the story about data, Selipsky moves on to compute because it should be clear that Amazon is becoming a big player in data center chips. AWS now has powerful chips for generic workloads and specialized chips for machine learning.

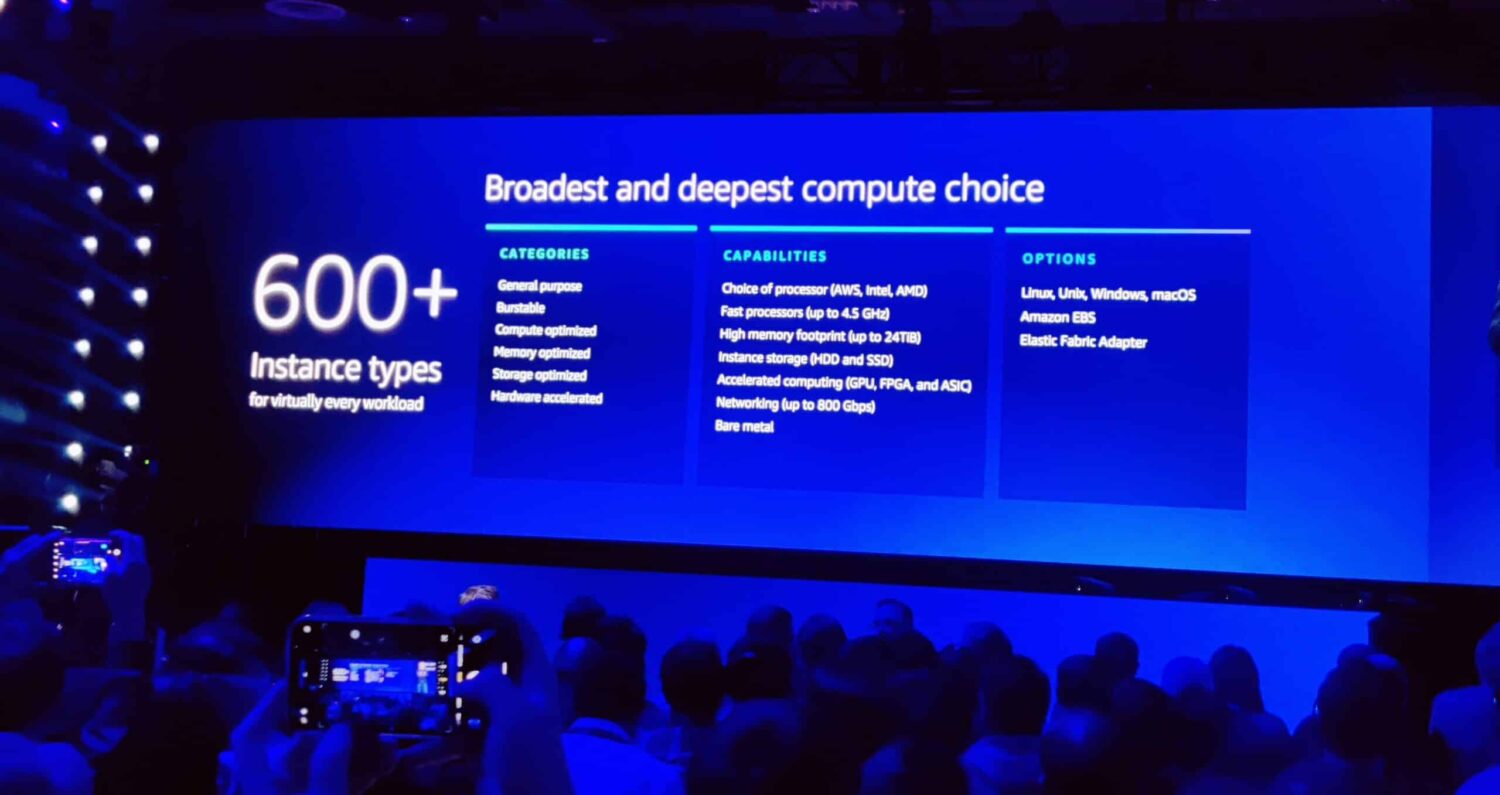

Selipsky states that AWS now offers more than 600 compute options with EC2. We get a brief history lesson on the development of AWS Nitro. This is AWS’ infrastructure chip used with every EC2 instance. Nitro handles the hypervisor, network and storage controller, some security solutions and a bit of monitoring. By having this all done by a separate chip, it has no negative impact on the CPU on which the customer’s workloads are running. In addition, the hypervisor runs completely separate from the client environment, which is much more secure.

Selipsky stated that with Graviton 3, AWS has achieved great success. This chip was 25 percent faster than its predecessor, but the Graviton chips have proven themselves by now. Organizations were initially reluctant to switch from the familiar Intel chips to AWS, but that is over. Especially now that customers can very quickly save 20 percent in costs by switching EC2 instances to Graviton 3-powered instances.

Also read: AWS increasingly replacing Intel Xeon chips with self-made ARM chips

You can’t ignore Amazon’s own chips for machine learning, either. The company has two different chip series for machine learning. On one side are the Tranium chips for training machine learning models, and on the other side are the Inferentia chips, which are used to make predictions based on AI models. According to Selipsky, the Trn1 instance has a 50 percent lower cost of training ML models. In turn, the Inf1 instance has a 70 percent lower cost per prediction.

Qualtrics is cited as an example. That company analyzes data based on surveys, social media and user feedback. They have saved about 40 percent in costs by switching to AWS’ ML chips.

To reinforce this, AWS is now introducing the Inf2 instance, which offers 4x higher throughput with 1/10 the latency compared to its predecessor.

AWS bets on chips for high-performance computing

AWS is also looking to make a bigger mark in high-performance computing. It already offered the ability to run HPC workloads on AWS with the Hpc6a instance. A third-generation AMD Epyc chip powers this. For many organizations, this instance is particularly interesting because it is x86-based.

For organizations that have HPC workloads and are not tied to x86, there will be an instance based on Graviton 3. The Hpc7g instance contains a Graviton 3e chip. In addition, AWS is also introducing the Hpc6id, which is an instance that offers the best price/performance for data and memory-intensive HPC workloads. It is also available immediately.

AWS Supply Chain is Amazon’s newest business application in addition to Contact Center

AWS already had its first proprietary business application with Amazon Contact Center, which has significantly changed the world of Contact Centers. With Amazon Contact Center, you can easily build a contact center and deploy it anywhere in the world. Especially at the beginning of the COVID pandemic, many companies switched to Amazon Contact Center because they needed thousands of call center agents working from home within 24 hours. That solution is now being extended with the necessary additional features as well.

One of the new features is a forecasting module so organizations can make better schedules for peak times of the day. There will also be a module to better monitor agent performance. The most important announcement for Contact Center, as far as we are concerned, is the new Agent Workspace. This is a new user interface where agents are presented with step-by-step actions to assist customers better. No doubt, this will again integrate with an internal knowledge base. Selipsky did not go into great depth on this yet.

AWS Supply Chain is perhaps the most important announcement

According to Selipsky, customers often ask AWS if they could combine the solutions and knowledge Amazon has of supply chain processes with AWS. Amazon is one of the largest online retailers and thus has tremendous supply chain knowledge. That question is now being answered with AWS Supply Chain. A new cloud application that can optimize many organizations’ supply chain.

AWS has automatic integration baked in with SAP ECC, SAP S/4HANA, AWS S3, or via an EDI connector. You can integrate AWS Supply Chain with these well-known ERP solutions or other ERP systems in just a few clicks. Selipsky states that AWS uses machine learning to create those integrations, so you, as a company, don’t have to select and link all the database fields yourself.

AWS Supply Chain offers a very clear web interface where you can see all your locations and their associated inventory. You can also communicate directly via chat with the inventory managers for each location, should that be needed. However, most of it is automated via machine learning. So you can immediately see where there is a shortage or just way too much stock. AWS Supply Chain can also directly make suggestions to move specific stock so that it is better distributed across the different locations. In doing so, it also considers the distances between different locations.

The main goal of AWS Supply Chain is to reduce risk and lower costs. It provides a very clear view of your inventory. Selipsky also immediately states that this is only the beginning of AWS Supply Chain and that the product will gain many new features in the future. Organizations can quickly get in on AWS Supply Chain; the company does not work with large upfront licensing fees.

Conclusion

All in all, AWS introduced hefty new solutions in just two hours. Many existing solutions have been expanded, but what the company leaves behind most of all is an impression of dominance and power on the IT industry. It is the global leader in the cloud in terms of market share, but probably technologically as well. On the one hand, that makes AWS very interesting, on the other hand, perhaps a bit too powerful. For Microsoft and Google, there is more work to be done to keep up with them.