Amazon Web Services has kicked off its annual AWS Re:Invent conference with the opening keynote. In it, the necessary announcements have already been made. One of the most important announcements this year is undoubtedly the introduction of Graviton 3. Graviton 3 is a new ARM-based chipset that can deliver even more performance at lower costs with lower power consumption. In addition, AWS is introducing new instances for both compute and training machine learning models.

The new Graviton 3 chip will once again cause many AWS customers to exchange their Intel-based instances for Graviton 3-based instances. With Graviton 2, AWS already had a chip that could wipe the floor with the Intel Xeon data centre chips, but with Graviton 3, AWS goes one step further.

Graviton 3 is about 25 percent faster for typical average workloads compared to Graviton 2. The chip can perform floating point operations and cryptography twice as fast. Graviton 3 also handles machine learning workloads well, working three times as fast compared to Graviton 2. In addition to this enormous performance gain compared to its predecessor, the Graviton 3 is also much more energy efficient. The chip consumes 60 percent less energy than Graviton 2. This chip will provide many cloud workloads with computing power soon because AWS once again states that this chip offers the best price/performance.

AWS will make the C7g instance available, which is based on Graviton 3.

Intel will receive the introduction of the Graviton 3 less positively. This company still needs a few years to become competitive again and will have to watch how even more workloads will use chipsets that are not Intel-based.

Also read: AWS increasingly replacing Intel Xeon chips with self-made ARM chips

AWS Inferentia chips

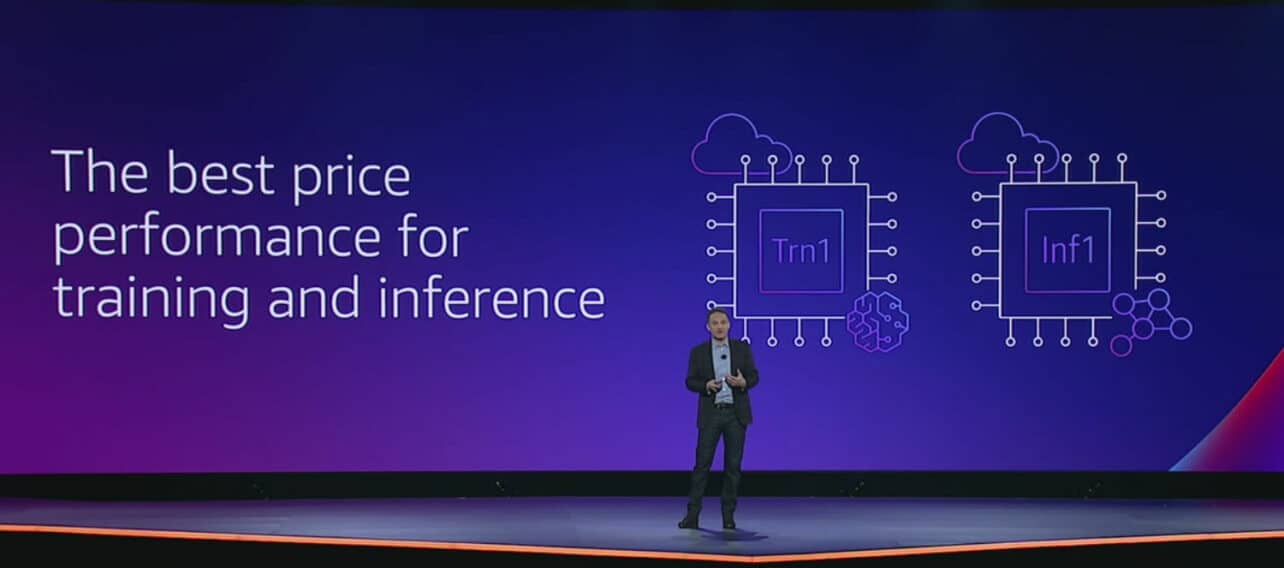

Last year AWS introduced the Tranium chip, a chip to train machine learning models. It has now become available through the Trn1 instance in EC2. With this, everyone can now train their machine learning models with it. The unique thing about this Trn1 instance is that it can be combined with tens of thousands of instances to train even the most complete machine learning models.