Hugging Face unveils SmolLM, a compact language model suitable for local use on personal devices. Such models already exist from Microsoft and Meta, but SmolLM surpasses them in testing.

SmolLM is a new family of language models (LMs) from Hugging Face. It is a suite of three models driven by 135 million, 360 million and 1.7 billion parameters. In a high-end LLM, that number runs into the trillions. Developers themselves do not give exact figures on this feature, but it is estimated that GPT-4 Turbo contains 1.76 trillion parameters, Claude 3 Opus 2 trillion parameters and Gemini 1.5 Pro at least 1.6 trillion parameters.

The number of parameters in AI models is increasing at lightning speed. That makes it almost impossible to set a firm standard on how many parameters need to be in a model to pass as “Large”. In any case, the new Hugging Face family of models is considered a compact family. The largest model, consisting of 1.7 billion parameters, is close to the number of parameters available from GPT-2 from 2019 (1.5 billion parameters).

High-quality training data

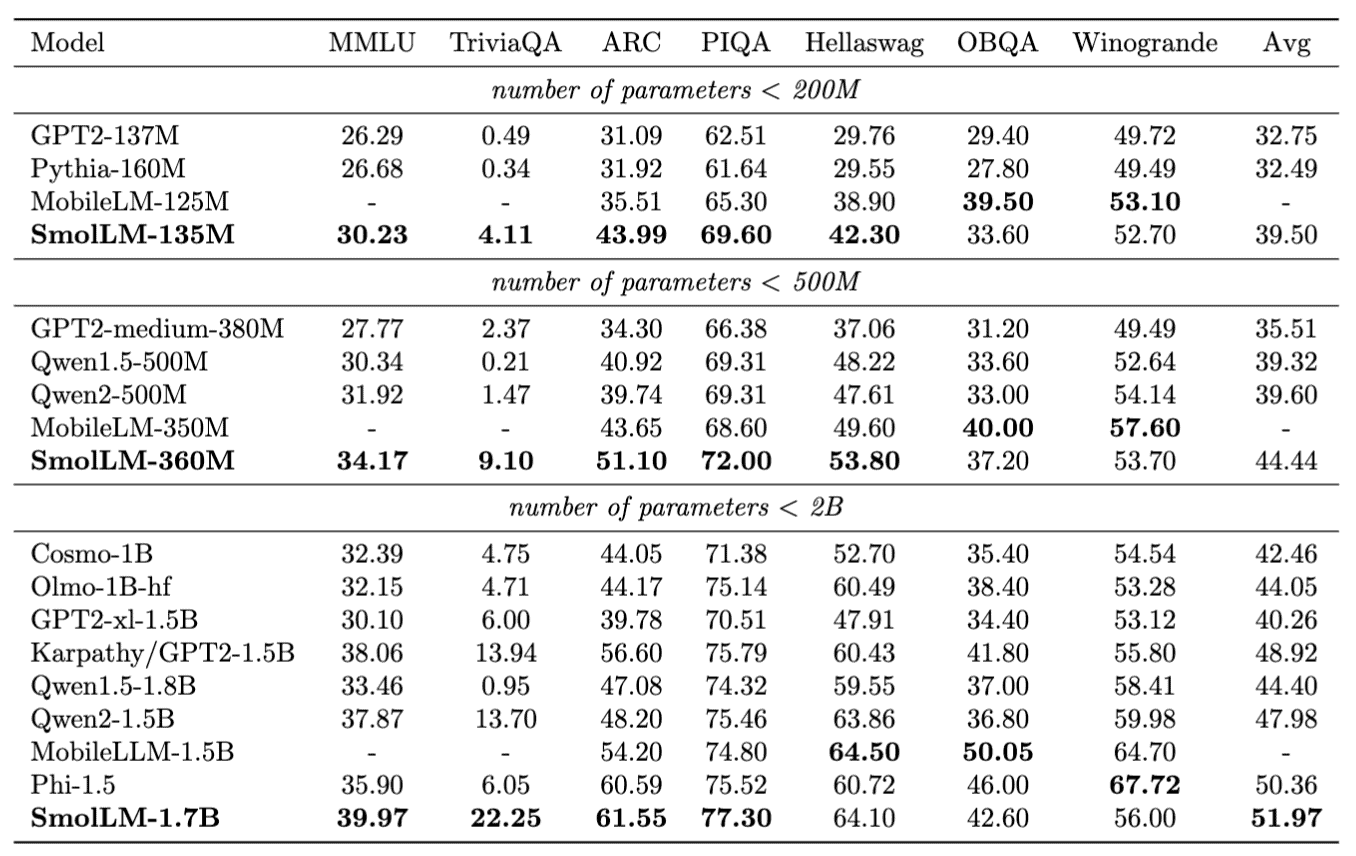

SmolLM can deploy these parameters much more targeted than the 2019 OpenAI model. Its performance also exceeds that of recently released models, such as Meta’s MobileLLM, released earlier this month. MobileLLM posted improvements of 2.7 to 4.3 percent, according to benchmark tests comparing it to previous models of the same size. SmolLM alone always contains at least 10,000 more parameters than these it compares itself to from Meta.

Text continues below image

Source: Hugging Face

According to the researchers, the better results in the tests are the result of the good data quality of the training data. For the training, Cosmo-Corpus, Python-Edu, and FineWeb-Edu were combined. These models all have an educational angle and were thoroughly filtered to get from three LLMs to one LM family.

New step toward cloud independence

Hugging Face’s new family of models again improves AI models that can run locally. These models are said to operate completely cloud-independent, thus addressing privacy concerns. Such models could boost AI use significantly, especially in business environments where it is crucial that internal data does not leak to the outside world.

Hugging Face hopes to contribute to this field with the launch of SmolLM. The launch is important for developers because the company acts open-source and releases the datasets and training code. This simplifies further development compared to previously available models where “most of the details about the data curation and training of these models are not publicly available.”