The new version is more affordable and has less latency, allowing more people to use ChatGPT maker OpenAI’s powerful model.

A smaller model can be attractive to researchers and developers. For example, they may prefer such a model when building applications because of its lower computing cost and higher speeds. A smaller version uses fewer parameters than the larger version. That smaller number of parameters is enough in several situations to perform simpler tasks.

For example, GPT-4o mini, as the model is called, can be used for applications that link or parallelize multiple model calls. GPT-4o mini is also suitable for passing much context to the model or communicating with users via quick responses.

Performance

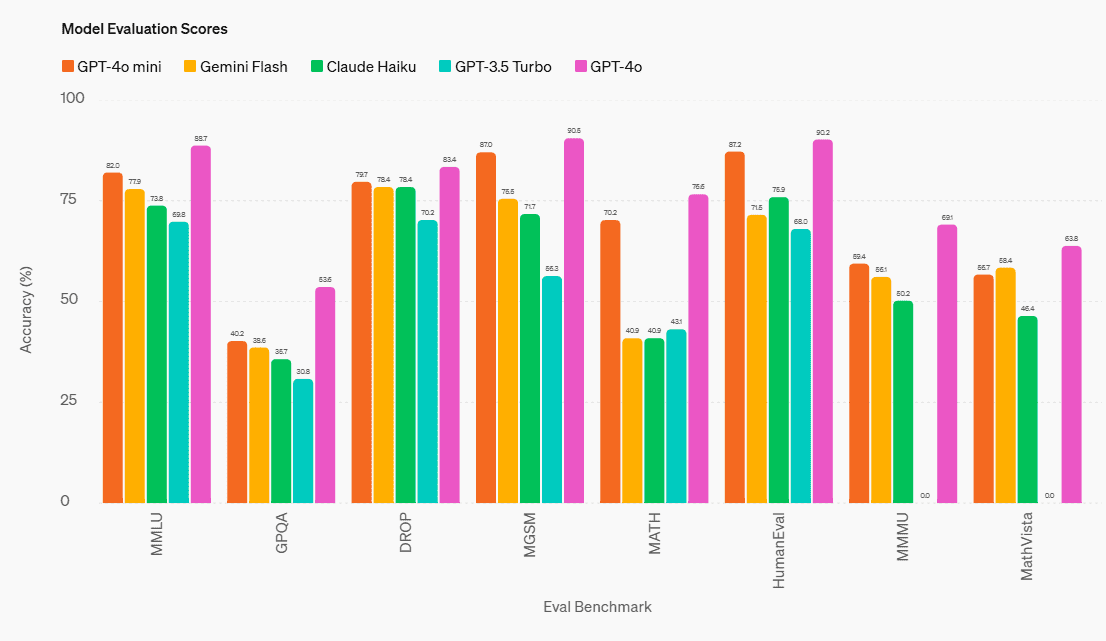

OpenAI also published benchmarks to show its capabilities. It outperforms other small models in textual intelligence and multimodal reasoning. In addition, it shows strong performance when calling functions. This is beneficial for developers building apps that involve data retrieval or interactions with external systems. Below is a summary of the benchmarks.

In the area of reasoning, the MMLU benchmark is important. There, GPT-4o mini scores 82 percent, significantly better than competitors Gemini Flash (77.9 percent) and Claude Haiku (73.8 percent). For math tasks and coding, the MGSM and HumanEval benchmarks are leading, and OpenAI’s new model performs higher in that area as well. Finally, we find the MMMU useful to highlight, as it serves as a standard for multimodal reasoning. There, GPT-4o mini sits near 60 percent, while Flash and Haiku achieve 56.1 percent and 50.2 percent, respectively.

GPT-4o mini is available immediately for ChatGPT Free, Plus, and Team users. Enterprise users will follow next week. Developers pay 15 cents per 1 million input tokens and 60 cents per 1 million output tokens.

Tip: Microsoft abandons observer position on OpenAI board of directors