Microsoft developed the benchmark Windows Agent Arena to demonstrate how well AI assistants can help and support Windows users with their tasks.

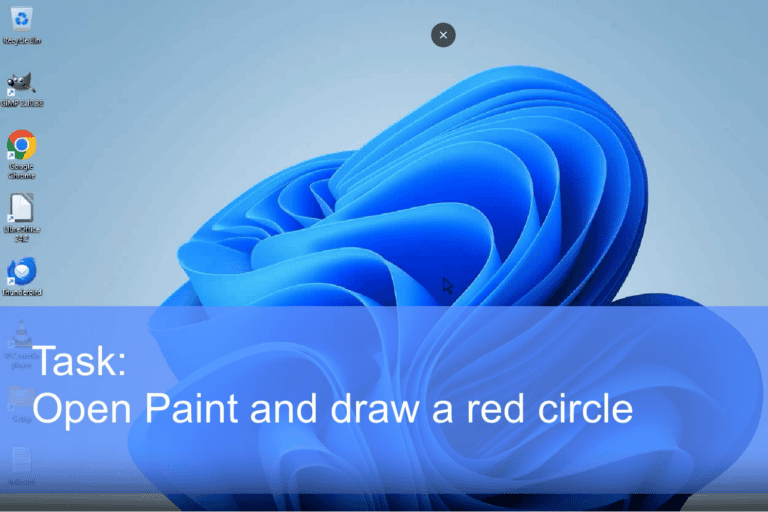

The benchmark tests explicitly the performance of AI assistants on Windows PCs. It tests both the accuracy of tasks performed and the speed at which the AI agent can interact with commonly used Windows apps. Items tested include the Web browsers Microsoft Edge and Google Chrome, system functions such as Explorer, apps such as Visual Studio Code, Notepad, Paint, and the clock. The test includes 150 different operations.

AI agents are not convincing on the moment

The technology seems to need further evolution to convince Windows users that AI agents for the PC are a great help. Microsoft Research, the developers of the benchmark, put together the agent Navi. The AI agent achieved an overall score of only 19.5 percent, compared with a human success rate of 74.5 percent. Windows Agent Arena does provide AI agent developers with a good measure of the performance of their latest development.

Rogerio Bonatti, the study’s lead author, said, “Windows Agent Arena provides a realistic and comprehensive environment to push the limits of AI agents. By making our benchmark open-source, we hope to accelerate research in this crucial area within the AI community.”

The development of high-performing AI agents is also important for Microsoft to stimulate disappointing sales of Copilot+ PCs. The latest models from PC makers possess the capabilities to run AI apps. However, for this to be useful to users, the apps must also be on point.

Also read: These are the new Copilot+ PCs from Lenovo, Samsung, ASUS and Acer